· Strategy · 2 min read

Why AI Pilots Fail: The 80% Stat

Most enterprise AI fails not because of the model, but because of the 'Last Mile' integration costs. We breakdown the hidden latency budget of RAG.

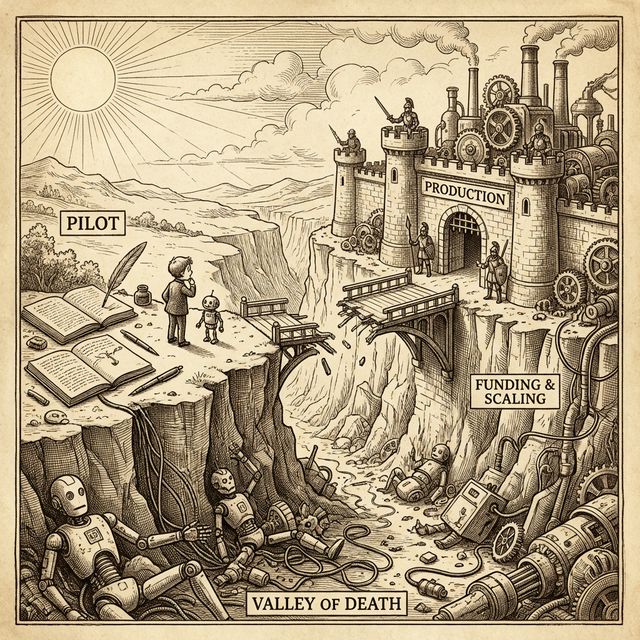

The Valley of Death

If you talk to enough CIOs, you start to hear the same number whispered in boardrooms: 80%. That is the estimated percentage of Generative AI pilots that will never see a production environment in 2026. Gartner puts the number of abandoned projects at 30% minimum, while MIT research suggests a failure rate as high as 95% for early entrants.

Why? It’s not the models. GPT-4 and Gemini 1.5 Pro are demonstrably capable of reasoning. The failure happens in the “Last Mile”—the excruciating gap between a Python notebook that works 70% of the time and a production service that works 99.9% of the time with sub-second latency.

The Latency Tax of RAG

The most common pilot today is “Chat with your Data” (RAG). In a demo (creating a POC in a notebook), you use a local in-memory vector store and skip re-ranking. It feels instant (~1.5s). In production, you hit the Latency Wall.

Here is the Latency Budget of a robust enterprise RAG pipeline, based on 2025 benchmarks (Cohere, Pinecone):

| Component | Time (Optimized) | Time (Enterprise Legacy) | Description |

|---|---|---|---|

| Embedding | 45ms | 200ms | OpenAI text-embedding-3-small vs legacy BERT models on CPU. |

| Vector Search (HNSW) | 20ms | 500ms | Pinecone/Milvus (p99) vs unoptimized pgvector (IVFFlat). |

| Document Retrieval | 50ms | 800ms | Fetching 50KB payloads from S3/Blob Store. |

| Re-ranking | 392ms | 1,200ms | The Bottleneck. Cohere Rerank 3.5 Mean Latency (Agentset.ai benchmarks). |

| Guardrails (Input) | 100ms | 400ms | Presidio PII scanning + Lakera Jailbreak detection. |

| LLM Inference | 800ms | 4,000ms | Time to First Token (TTFT) vs full generation. |

| Total | ~1.5s | ~7.0s | The difference between “Flow” and “Churn”. |

A notebook skips the 392ms Re-ranking and the 100ms Guardrails. But without Re-ranking, accuracy drops by ~20%. Without Guardrails, you can’t ship compliant apps. You are stuck in a trap: Ship fast and hallucinate, or ship slow and lose users.

The “Integration Tax”: Why 80% Fail

Beyond latency, Gartner reports that 63% of organizations lack confidence in their data management. This manifests as “Data Gravity” failure modes that you don’t see in a clean POC:

- Stale Indices: Your vector index is updated nightly. The customer moved this morning. The AI confidently mails the old address.

- Permission Silos: The AI answers “What is the CEO’s salary?” because the RAG pipeline bypassed SharePoint’s ACLs.

- Dirty Data: Ingesting 10,000 PDFs without OCR correction. The model sees

C0mP4ny P0l1cyand halluctionates.

Escaping the Pilot Trap

To survive the 80% purge (Gartner’s 2026 prediction), stop building open-ended “Chatbots.” Start building Deterministic Flows.

- Don’t let the user ask anything.

- Do offer specific “Slash Commands” that map to optimized, cached queries.

- Pre-compute embeddings for static content.

- Async the heavy reasoning steps. Don’t make the user stare at a spinner while you chain-of-thought.

Narrow the scope. Pre-compute the context. Survive the valley.