Performance over Portability? Running Local LLMs on the Asus ProArt 13

Can a thin-and-light PC handle production-level LLMs? We benchmark the Asus ProArt 13 with RTX 4060, the Ryzen AI 9 NPU, and the 8GB VRAM bottleneck.

Can a thin-and-light PC handle production-level LLMs? We benchmark the Asus ProArt 13 with RTX 4060, the Ryzen AI 9 NPU, and the 8GB VRAM bottleneck.

Autonomous agents are prone to infinite reasoning loops and 'democratic' indecision. We explore the Supervisor pattern in LangGraph, MCP, and why orchestration beats choreography.

If your training loop isn't fault-tolerant, you're paying a 40% 'insurance tax' to your cloud provider. We look at the architectural cost of 30-second preemption notices.

When your model doesn't fit on one GPU, you're no longer just learning coding-you're learning physics. We dive deep into the primitives of NCCL, distributed collectives, and why the interconnect is the computer.

Inference price isn't a fixed cost-it's an engineering variable. We break down the three distinct levers of efficiency: Model Compression, Runtime Optimization, and Deployment Strategy.

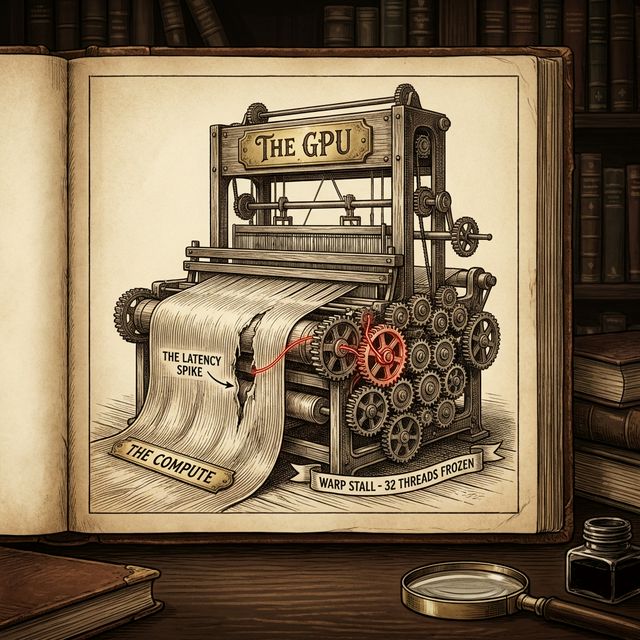

A war story of chasing a 5ms latency spike to a single loose thread. How to read Nsight Systems and spot Warp Divergence.