· Strategy · 4 min read

The Reliability Tax: Why Cheap GPUs Cost More

In the Llama 3 training run, Meta experienced 419 failures in 54 days. This post breaks down the unit economics of 'Badput' - the compute time lost to crashes - and why reliability is the only deflationary force in AI.

The Sticker Shock Mirage

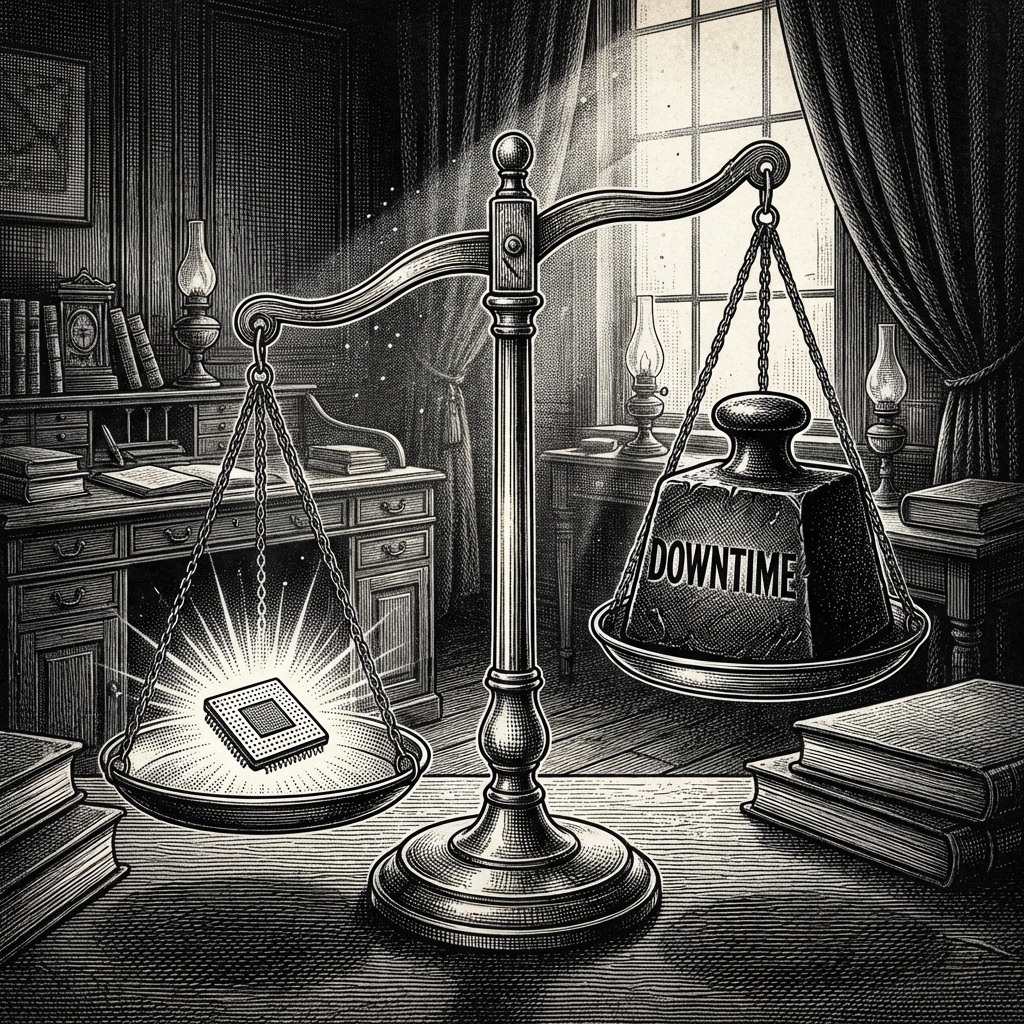

If you put an H100 SXM5 node from a budget cloud provider next to one from a hyperscaler, the specs look identical on paper. 8x GPUs, 900GB/s NVLink, 3.2Tbps InfiniBand. But the price tag might differ by 40%. The CFO looks at the spreadsheet, sees the 40% delta, and asks the obvious question: “Why are we paying a premium for the same silicon?”

It’s a fair question. It’s also the wrong one.

In the world of distributed AI training, you are not just buying chips. You are buying Model Flops Utilization (MFU) over time. And the biggest enemy of MFU isn’t slow silicon; it’s Mean Time Between Failure (MTBF).

The Physics of Failure: 419 Crashes in 54 Days

Let’s look at the data from Meta’s Llama 3 405B training run. They used 16,384 H100 GPUs. Over a 54-day period, they experienced 419 unexpected component failures. That is roughly one failure every 3 hours.

What caused them?

- GPU HW Issues (58.7%): HBM3 bit flips, SRAM corruption, and thermal throttles.

- Host HW Issues (17.2%): CPU failures, PCIe bus errors.

- Network Issues (8.4%): Optical transceiver degradation and switch port flaps.

This is the reality of scale. At 16k GPUs, failure is not an anomaly; it is the heartbeat of the system.

Calculating the “Reliability Tax”

When a job crashes, you don’t just “restart” it you end up paying in taxes. Let’s define the Badput (wasted compute) formula:

Now let’s apply this to a smaller, more common cluster of 256 GPUs on a “Budget Cloud” vs. a “Premium Cloud.”

Scenario A: Budget Cloud ($2.00/GPU/hr)

- MTBF: 24 hours (Frequent failures due to older interconnects/cooling).

- Checkpoint Frequency: Every 2 hours.

- Recovery Time: 30 minutes (slower storage/networking).

- Lost Progress: Avg 1 hour (half the checkpoint interval).

Daily Cost of Failure: 1 failure/day × (0.5h reboot + 1h lost) × 256 GPUs × 768/day wasted.**

Scenario B: Premium Cloud ($2.80/GPU/hr)

- MTBF: 120 hours (5 days).

- Checkpoint Frequency: Every 4 hours.

- Recovery Time: 15 minutes (optimized storage/networking).

- Lost Progress: Avg 2 hours.

Daily Cost of Failure: 0.2 failures/day × (0.25h reboot + 2h lost) × 256 GPUs × 322/day wasted.**

The TCO Verdict

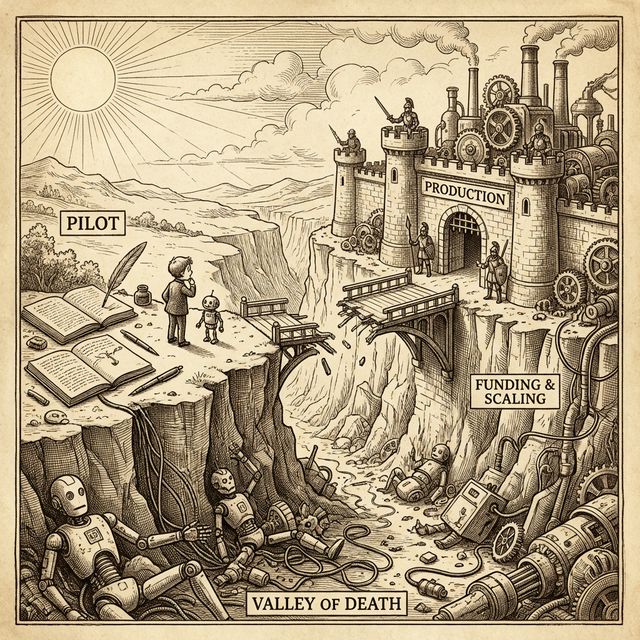

Even though the Premium Cloud is 40% more expensive per unit hour, the Effective Cost per Trained Token narrows significantly once you factor in the 15-20% “Badput” tax of the budget provider. And this doesn’t even account for the most expensive asset: Developer Morale.

The Hidden Costs of “Cheap”

1. The Interconnect Tax (Jitter)

Cheap clouds often advertise “400Gbps networking,” but they don’t mention Tail Latency. In All-Gather operations, the speed of the cluster is determined by the slowest packet. If your cloud provider oversubscribes their spine switches, your expensive H100s will spend 30% of their time waiting for data.

Insight: You are paying for 100% of the GPU but using 70% of it. That is a 30% tax effectively added to the unit price.

2. The Checkpoint IO Bottleneck

The Llama 3 paper noted that checkpointing was a major overhead. If your cloud provider uses standard NFS instead of high-throughput parallel storage (like Lustre or distinct GCS paths), saving a 4TB checkpoint can take 40 minutes instead of 4 minutes. During those 40 minutes, your GPUs are idle. That is pure waste.

Strategic Better Buying

When the CTO presents a hardware purchase plan, the Board should ask three questions that move beyond “Unit Price”:

- “What is the guaranteed / historical recovery time (RTO) for a node failure?”

- “What is the effective MFU of this architecture?” (do a benchmark on the systems to validate this)

- “Is the storage co-located (and optimised for ai workloads) with the compute?” (Saving checkpoints across regions is the silent killer of training speed).

Conclusion

In 2026, the competitive moat isn’t who has the most GPUs; it’s who can keep them fed and running. Reliability is deflationary. Investing in better storage, better networking, and better orchestration reduces the total cost of producing intelligence