Spot Market Arbitrage for AI: The Economics of Fault Tolerance

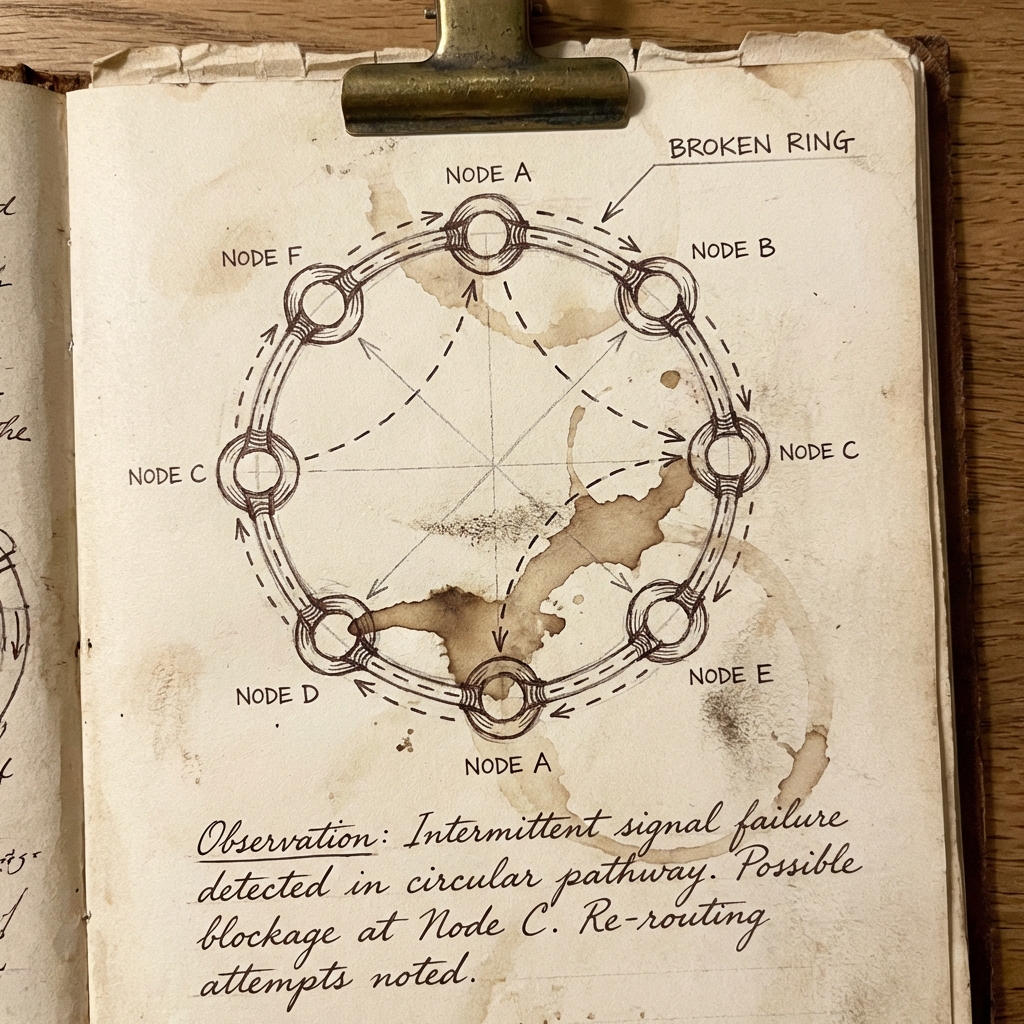

If your training loop isn't fault-tolerant, you're paying a 40% 'insurance tax' to your cloud provider. We look at the architectural cost of 30-second preemption notices.

If your training loop isn't fault-tolerant, you're paying a 40% 'insurance tax' to your cloud provider. We look at the architectural cost of 30-second preemption notices.

When your model doesn't fit on one GPU, you're no longer just learning coding-you're learning physics. We dive deep into the primitives of NCCL, distributed collectives, and why the interconnect is the computer.

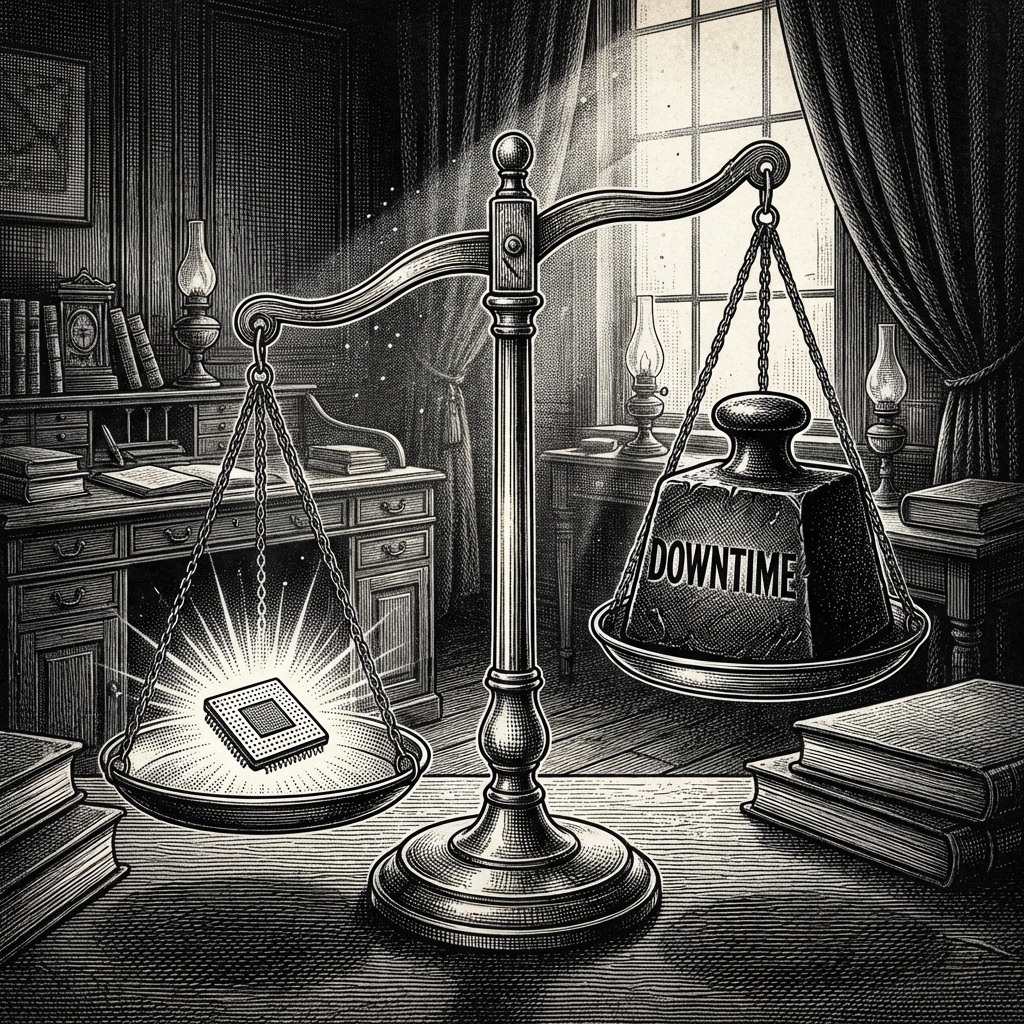

The AI industry is shifting from celebrating large compute budgets to hunting for efficiency. Your competitive advantage is no longer your GPU count, but your cost-per-inference.

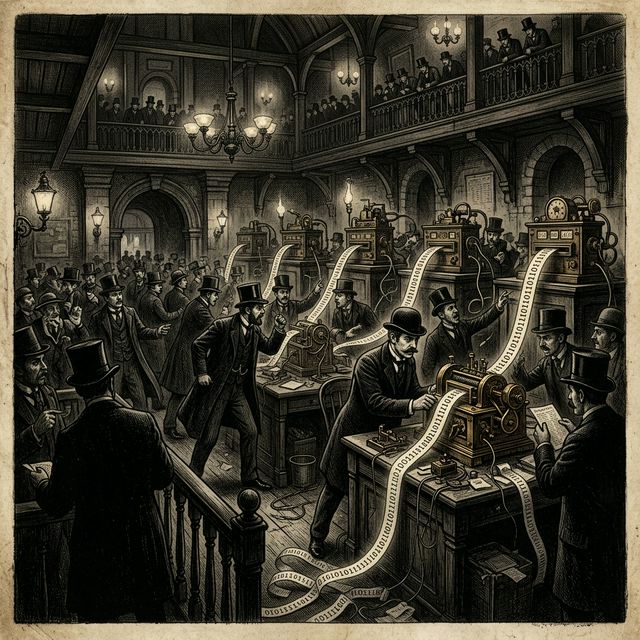

When standard tools report a healthy cluster, but your training is stalled, the culprit is often a broken ring topology. We decode specific NCCL algorithms and debugging flags.

In the Llama 3 training run, Meta experienced 419 failures in 54 days. This post breaks down the unit economics of 'Badput' - the compute time lost to crashes - and why reliability is the only deflationary force in AI.

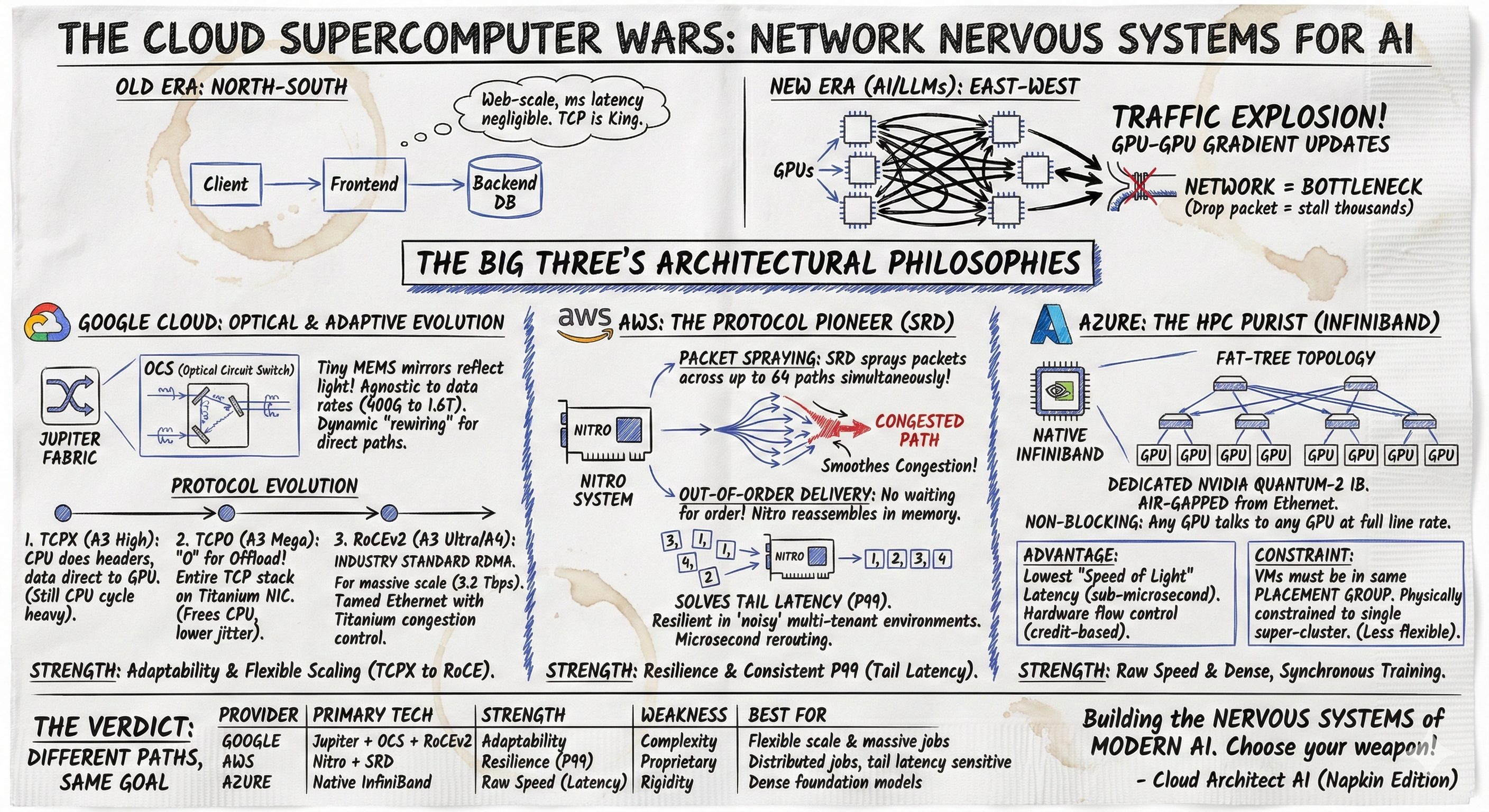

Generative AI has shifted data center traffic patterns, making network performance the new bottleneck for model training. This post contrasts how the "Big Three" cloud providers utilize distinct architectures to solve this challenge. We examine Google Cloud’s evolution from proprietary TCPX to standard RoCEv2 on its optical Jupiter fabric, AWS’s innovation of the Scalable Reliable Datagram (SRD) protocol to mitigate Ethernet congestion, and Azure’s adoption of native InfiniBand.