· Hands-on · 9 min read

Optimizing LangGraph Cycles: Stopping the Infinite Loop

Preventing infinite recursion loops in reasoning chains with robust circuit breakers.

The most dangerous feature in any modern Agentic AI framework is the while True loop.

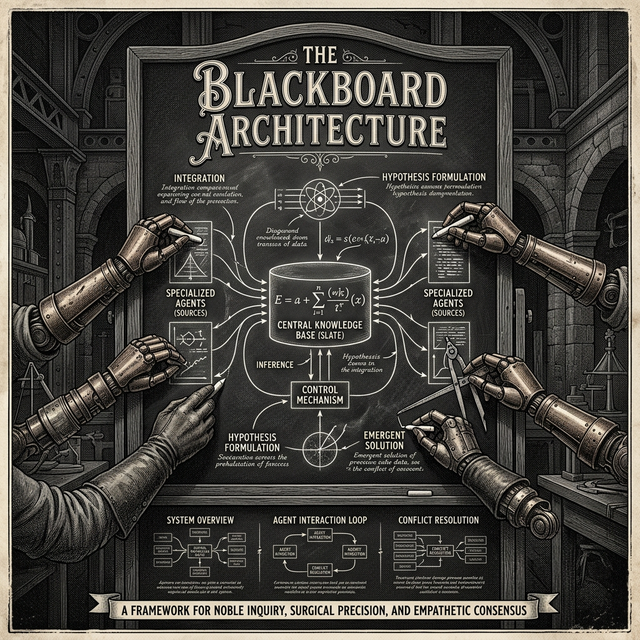

When you make the leap from a linear Execution Chain (Agent A -> Agent B -> Output) to a Cyclic Directed Graph (Agent A <-> Agent B <-> Tool), you unlock an entirely new class of emergent reasoning capabilities. You are no longer just piping data through models. You are creating a systemic, reflective entity capable of critique, self-correction, and autonomous iteration. It is incredibly powerful.

It is also an incredible way to obliterate your infrastructure budget in 45 minutes.

When you introduce cycles into an orchestrator like LangGraph, you suddenly have to grapple with the reality that Large Language Models are profoundly stubborn pieces of software. They are exceptionally good at finding a logical rut and digging it deeper.

If Agent A (the Planner) formulates a flawed assumption, and Agent B (the Executor) repeatedly tries and fails to execute that assumption using an external API tool, the two nodes will likely just keep throwing the same hallucinated arguments back and forth at each other.

- Agent A: “Call the GitHub API with this malformed query.”

- Agent B: “The API returned a 400 Bad Request error.”

- Agent A: “Understood. The API must clearly need this malformed query again to work properly.”

Suddenly, your underlying billing API registers a silent $500 charge for a single context-exploding query that ran iteratively for 200 cycles in the background.

In a production environment, you cannot afford to rely on the model itself to intuitively decide when it is mathematically time to stop trying. You cannot assume an LLM will possess graceful failure semantics. You have to engineer safety, timeouts, and state tracking directly into your cyclic graphs at the architectural level.

Here is exactly how we engineer safety into cyclic graphs in production to stop the unstoppable loop.

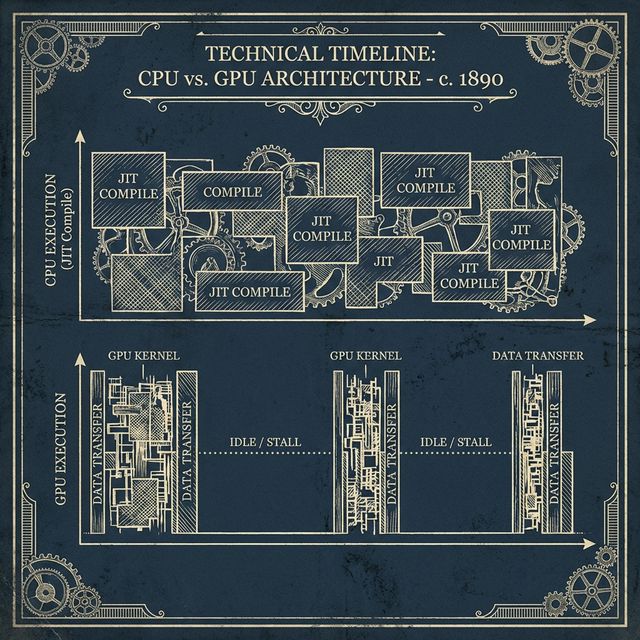

The Soft Landing: The “Time-To-Live” (TTL) Step Count

To its credit, LangGraph inherently understands the danger of infinite loops. By default, it provides a built-in recursion_limit in the invoke or astream graph methods. If the graph hits this limit (defaulting to 25 steps), the entire framework throws a hard Python exception.

The problem with recursion_limit is that a hard exception is not a user-experience strategy. It crashes the application loop, loses the state context, and returns an ugly 500 Server Error to whatever frontend is trying to talk to your orchestrator.

We do not want a hard crash. We want a soft landing. We want the agent to try a complex task for up to 5 discrete cycles. If it fundamentally fails to reach a solution within those 5 cycles, we want to gracefully degrade to a “Best Effort” fallback answer, or at minimum, issue a mathematically sound apology back to the client holding the WebSocket open.

To achieve this, we cannot rely on the framework to crash. We must inject a global, strictly monotonically increasing steps counter directly into the foundational Graph State itself.

from typing import TypedDict, Annotated

from langchain_core.messages import BaseMessage

from langgraph.graph.message import add_messages

# We define the core schema of our Graph's shared memory

class AgentState(TypedDict):

# Standard array of LLM conversation turns

messages: Annotated[list[BaseMessage], add_messages]

# Our critical, unhallucinable safety counter

steps: intEvery single node in your graph—whether it is a Tool Executor or the LLM Reasoner—must accept this state, increment the steps integer by 1, and return the updated state dict.

The magic happens in the Conditional Edge (the routing logic) that controls the cycle. Because the steps variable is firmly embedded in the runtime state, your router is entirely immune to LLM hallucinations or logical stubbornly.

def router(state: AgentState):

"""

The deterministic routing function.

It runs natively in Python, not inside the LLM prompt.

"""

# 1. First Priority: The Circuit Breaker

if state["steps"] > 5:

print(f"CRITICAL WARNING: Graph hit TTL limit {state['steps']}. Routing to fallback.")

return "final_answer_fallback_node"

# 2. Second Priority: Has the LLM successfully completed the goal?

last_message = state["messages"][-1]

if "FINAL ANSWER" in last_message.content:

return "end"

# 3. Default Path: Let the cycle continue to the next Node

return "continue_execution"This ensures that the graph always gracefully terminates into a predefined, safe terminal node rather than letting a Python unhandled exception tear down the pod.

The State Oscillation: The Semantic Cache Divergence Check

Sometimes the loop isn’t simply repeating the exact same characters indefinitely. Often, it is slightly worse: it is oscillating between states logically.

Imagine an Agent built to write comprehensive marketing copy about Apple computers.

- Cycle 1: The LLM output says, “I need to search the web for recent articles about ‘Apples’.”

- Cycle 2: The Search Tool returns Google results predominantly about the physical fruit, orchards, and recipes.

- Cycle 3: The LLM sees the search results, realizes the mistake, and reasons: “Wait, these findings are ambiguous and relate to agriculture. I need to search the web specifically for ‘Red Apples’.”

- Cycle 4: The Search Tool returns Google results about Honeycrisps and Fuji variants.

- Cycle 5: The LLM gets frustrated, decides it’s lost, and reverts its strategy: “I need to go back to the beginning. I need to search the web for ‘Apples’.”

The agent is visiting the exact same semantic and logical state twice. It is trapped in a non-productive oscillation.

To break this, we implement a Semantic Cache of recent tool invocations inside the AgentState.

Before your Graph is allowed to actually execute a pricey HTTP call to an external tool api (like a search engine or a database), you insert an intermediate Python check: Has this Graph explicitly called this exact tool, with these exact JSON arguments, within the last 3 turns?

If the answer is yes, you do not execute the HTTP call. Doing the exact same API call for the second time in 5 seconds and hoping for a novel JSON response is the definition of insanity.

Instead, you intercept the call, force a mock tool response that clearly says: "SYSTEM OVERRIDE: YOU HAVE ALREADY TRIED THIS ACTION AND IT FAILED. TRY A COMPLETELY DIFFERENT APPROACH INSTEAD." and feed it back into the LLM context. This artificial jolt of explicitly negative feedback is often the exact shock therapy an oscillating reasoning engine needs to break out of a rut and try a novel path.

The Adult In The Room: The “Critic” Node

The most profoundly robust, enterprise-grade method to break a nasty cycle is to alter the architecture of your graph to introduce a dedicated “Critic” or “Supervisor” node.

This specific node is not given permission to use tools. It does not do the heavy lifting of executing the business logic. It exists purely to observe the ongoing transaction.

Every 3 steps of the primary execution loop, you intentionally route the flow graph through the Critic Node. The Critic Node takes a smaller, significantly cheaper LLM (e.g., Gemini 1.5 Flash instead of Gemini 1.5 Pro) and uses a highly specific system prompt:

“Examine the last 3 steps of conversation and tool execution log between the Planner Agent and the Executor. Judge objectively: Is the agent actively making measurable progress toward the original user goal? Is it stuck in a loop? If there is no progress, dictate a completely new logical strategy. If it is hopelessly trapped, output TERMINATE.”

Worker agents inherently suffer from deep tunnel vision when trying to solve a complex coding or logical problem. They lose the forest for the trees.

The Critic node serves as the “Adult in the room.” By periodically stripping away the operational details and forcing a high-level, objective evaluation of the trajectory of the reasoning, the Critic maintains the meta-perspective that the worker nodes inevitably lose. If the Critic outputs TERMINATE, the Graph state is forced cleanly to the End node.

Observability: Debugging Complexity with LangSmith

A crucial realization when moving an Agentic Graph into production is that you absolutely cannot debug dynamic cyclic reasoning using traditional print() statements in a terminal.

The standard out of a Docker container rapidly becomes an indistinguishable, scrolling blur of repeated JSON payloads and raw LLM tokens. You will not be able to diagnose why a loop is occurring just by looking at the text dump.

You require a visual telemetry tracer designed specifically for arbitrary Directed Acyclic Graphs containing non-deterministic nodes.

Tools like LangSmith (or Google Cloud’s integrated Tracing suites for Vertex AI) are not optional niceties; they are mission-critical debugging infrastructure.

When you export your graph executions to LangSmith, you can visually inspect the actual “Run Tree”. If you log into your observability suite and you see a vertical execution stack that looks like a mesmerizing fractal pattern infinitely repeating: [Planner -> Tool Exec -> Critic -> Planner -> Tool Exec -> Critic...]

You immediately know you have a profound stability and loop problem in your prompt engineering.

The diagnostic secret here is to look closely at the inputs (the state payloads) to the repeated steps in the tracer UI. Ask yourself: Are the state payloads actively changing?

If the variable inputs feeding into the Planner node at Step 9 are semantically identical to the inputs that fed into the Planner node at Step 3, your global state isn’t actually mutating. Your system isn’t learning any new facts from its environment. It is just burning compute cycles iterating in a vacuum.

The Immutable Production Rule

If you take nothing else away, burn this rule into your team’s operational playbook:

Every cycle in a production graph must rely on a strictly monotonic condition to continue.

Something in your state object must measurably increase (a steps counter, a floating-point confidence score, the volume of extracted data) or strictly decrease (the available search space, the remaining token budget, the remaining retry attempts) with absolutely every single turn of the wheel.

If your graph executes a complete loop and the state remains entirely static, you must violently kill the execution thread.

Infinite loops used to just lock up a CPU core and force a restart. In the Agentic age, infinite loops actively drain your API budget and expose you to profound latency liabilities. We use the tools we have—TTL counters, Caching, and Critics—to tame the graph and handle them gracefully.