· Engineering · 3 min read

The Storage Wall: Why Your GPUs are Waiting on GCS

Buying expensive GPUs to wait on cheap storage is an operational failure. We break down the math of 'Badput' and why high-performance I/O is actually a discount.

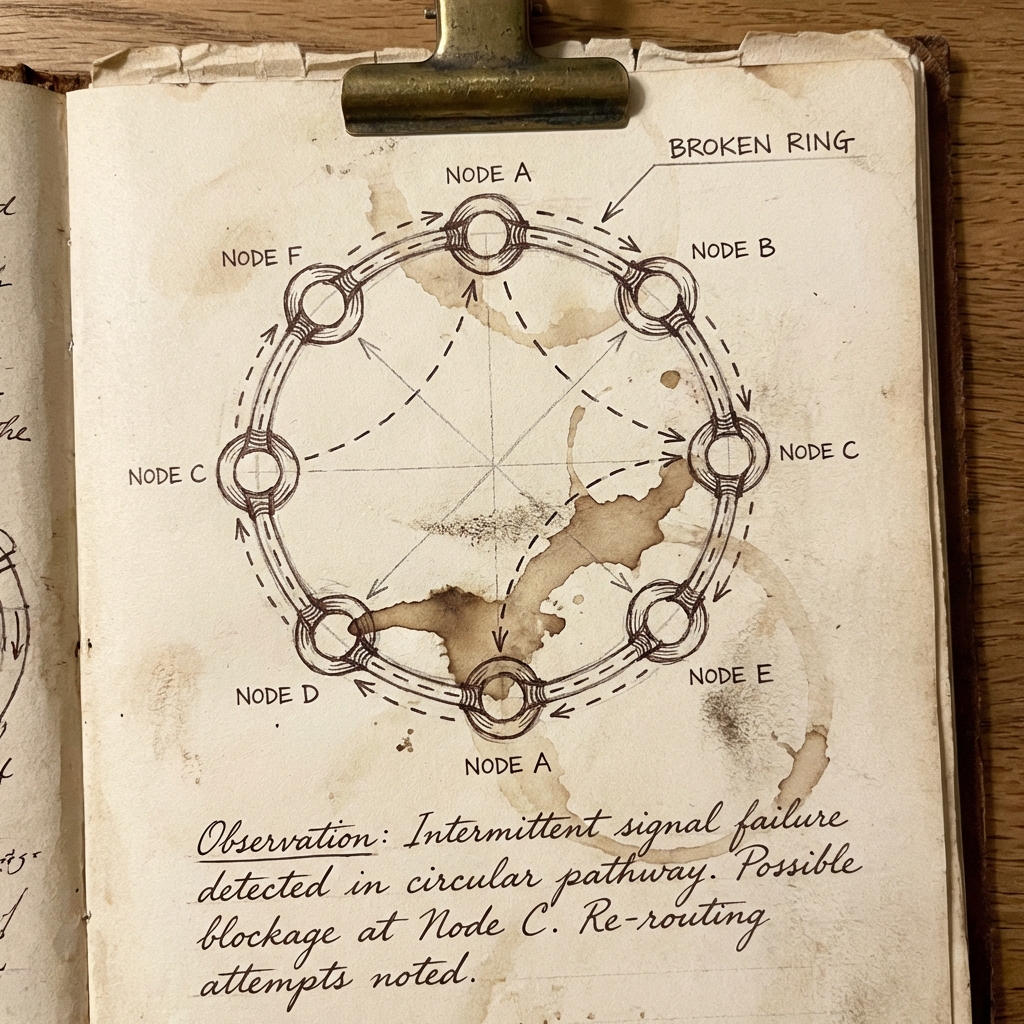

There is a specific kind of frustration reserved for anyone managing a GPU cluster. It’s that moment you check your dashboard and see a row of H100s—hardware that costs more than a mid-range Tesla—sitting at 15% utilization while the “I/O Wait” metric climbs.

It’s the silent killer of AI projects. We’ve obsessed over compute density and InfiniBand fabrics, but we’re still treating the storage layer like a standard web bucket. If you’re trying to feed a training loop from a basic object store, you’re basically trying to fill a swimming pool through a cocktail straw.

The Reality of “Badput”

In the systems world, we talk about Badput. It’s the delta between the compute you paid for and the compute that actually produced a gradient update.

If your GPU is “active” 100% of the time, that doesn’t mean it’s doing work. Often, it’s just spinning. To measure actual Badput, you need to look at three things:

- GPU Utilization (volatiltiy): If

nvidia-smishows 100% but your power draw is idling at 150W (on a 700W chip), you’re not doing math. You’re waiting. - The FUSE Bottleneck: If you’re using a basic mount, measure your kernel-level I/O wait. Every millisecond the CPU spends context-switching to handle a GCS request is a millisecond the GPU is starving.

- Samples per Second: This is your ground truth. If your throughput in a local dev container is 2x what it is in the cloud cluster, your storage is the thief.

The Math of the “Cheap Storage” Tax

Most FinOps teams focus on the line item for “Storage Cost.” This is a mistake. The only metric that matters is the Cost per Productive Training Hour.

Let’s look at the actual math for a standard 8-GPU node:

Scenario A: The “Budget” Stack

- Node Cost: $32.00 / hr

- Standard GCS Storage: $0.10 / hr

- GPU Utilization: 40% (Starved by I/O)

- Effective Cost per Hour: (0.10) / 0.40 = $80.25

Scenario B: The “Optimized” Stack

- Node Cost: $32.00 / hr

- Hyperdisk ML + FUSE Cache: $1.50 / hr

- GPU Utilization: 95% (Saturated)

- Effective Cost per Hour: (1.50) / 0.95 = $35.26

By spending 15x more on storage, you just cut your training bill by 56%. This isn’t just an optimization; it’s basic arithmetic. If you’re not saturating the chips, you’re just donating money to your cloud provider.

Solving the Wall on GCP

We don’t live in a world where “Cloud Magic” just works. You have to build the bridge.

The most effective way to kill badput right now is a tiered approach. Use Cloud Storage FUSE with the --file-cache flag aimed at a Local NVMe SSD.

- The First Epoch: FUSE streams from GCS. It’s slow, and your GPUs will wait.

- The Rest of the Run: The local cache is now “warm.” Your batch loader is pulling from NVMe at PCIe Gen 5 speeds.

For massive datasets where local SSDs aren’t enough, Hyperdisk ML is the answer. It allows you to mount the same high-throughput volume to thousands of VMs simultaneously. It’s essentially a read-only parallel filesystem that you can spin up with a single API call.

Conclusion

Infrastructure engineering is about identifying the bottleneck and moving it until it becomes too expensive to move again. Right now, the bottleneck for most teams isn’t the model or the code; it’s the physical distance between their data and their silicon.

Stop chasing the “cheapest” storage. Calculate your productive ROI. If your GPUs are at 0% for more than a few seconds between batches, you have a storage problem, and the solution is to stop being cheap where it counts.

#Infrastructure #GCP #GCS #GPU #ROI #Performance #Hyperdisk