· Systems Engineering · 3 min read

My Profiling Nightmare: The Warp Stall

A war story of chasing a 5ms latency spike to a single loose thread. How to read Nsight Systems and spot Warp Divergence.

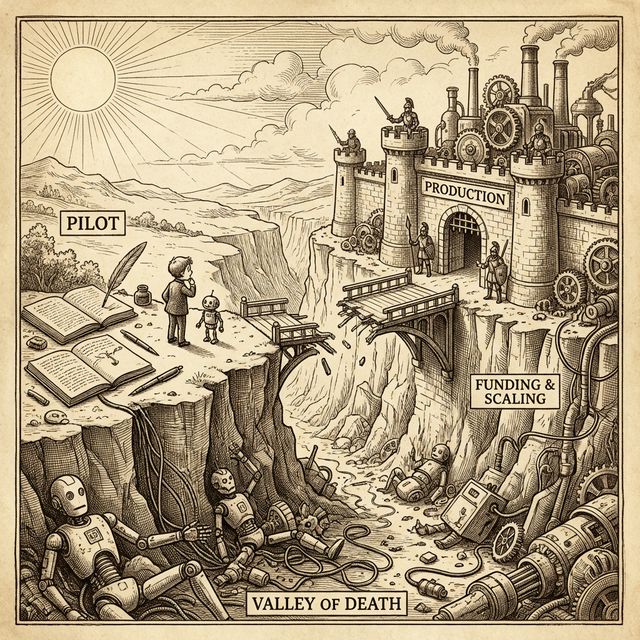

The 5ms Ghost

It started with a complaint: “Inference is jittery.” Our P99 latency was fine (20ms), but our P99.9 had random spikes to 25ms.

Concept Check: P99 vs P99.9 Imagine 1,000 requests.

- P50 (Median): How fast the “average” request is.

- P99: How fast the 990th slowest request is (the worst 1% of users).

- P99.9: The worst 1 request out of 1000. This is the “tail latency” that kills user experience.

In High-Frequency Trading or Real-Time Voice Agents, a 5ms delay at P99.9 is the difference between a smooth conversation and a robot stutter.

We looked at the logs. Nothing. We looked at the network. Clean. So we did what must be done: We attached nsys (Nsight Systems).

Reading the Flame

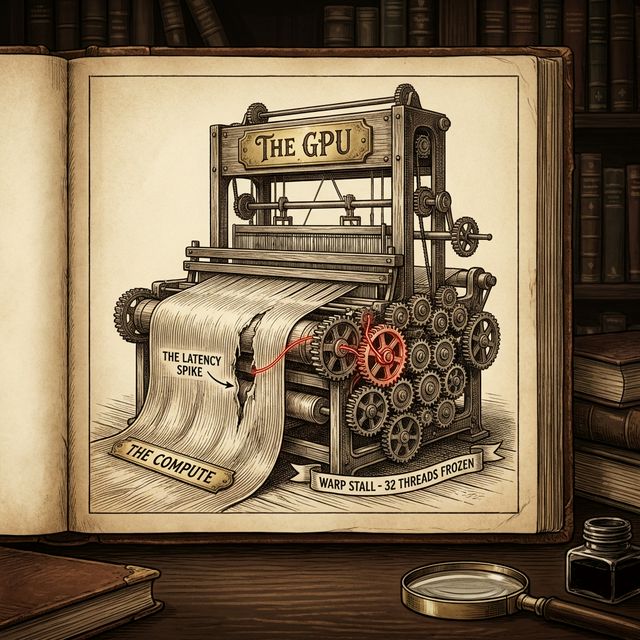

Nsight Systems (nsys) is an MRI machine for your code. It doesn’t just show “how long” a function took; it shows exactly what the hardware was doing every microsecond.

If you haven’t opened an Nsight Systems report, it looks like a digital city on fire. Thousands of tiny colored blocks representing Kernels (small functions that run on the GPU).

I zoomed in on the spike. There it was: A “blue” compute kernel that should take 10us was taking 5000us. The name: fused_attention_kernel_v2.

But why? The kernel code hadn’t changed. I hovered over the block. “Warp Stall Reason: Pipeline Busy”

The Warp and the Weft (SIMT Architecture)

To understand why this is bad, you need to understand how a GPU thinks. A GPU executes threads in bundles called Warps (usually 32 threads at a time).

The Analogy: The Marching Band Think of a CPU as a Ferrari: fast, agile, but can only carry 2 people. Think of a GPU as a Marching Band (a Warp).

- Lockstep: Everyone marches at the exact same tempo.

- Single Instruction: If the conductor says “Step Left,” potentially all 32 musicians step left.

This is SIMT (Single Instruction, Multiple Threads). There is only one “Brain” (Instruction Pointer) for 32 “Bodies” (Threads).

The Tragedy of Divergence

What happens if the code says: if (musician_is_wearing_hat) { step_right() } else { step_left() }?

In a CPU, each person decides independently. In a GPU “Marching Band,” this is a disaster called Warp Divergence.

The hardware technically cannot let half the band step left while the other half steps right simultaneously. It creates a Stall.

- Freeze the 30 people without hats.

- Command the 2 hat-wearers to “Step Right”. (The other 30 just stand there, wasting time).

- Freeze the 2 hat-wearers.

- Command the 30 others to “Step Left”.

- Re-group everyone to march forward again.

In our code, a single thread hitting a “Safety Check” forced the entire band to stop and wait for it to finish a complex log operation. The GPU utilization dropped to near zero, but the “Time Taken” skyrocketed.

The Ghost in nsys

If you open the “Warp State Statistics” in Nsight Compute, you will see a massive bar for: “Stall Reason: Allocation (Waiting for Branch Resolution)”

This is the smoking gun. The scheduler is stuck waiting for the 1 divergent thread (the guy with the hat) to finish his solo before the band can play again.

The Fix

We traced the diversion to a “safety check” added in the last PR:

if (token_id == PAD_TOKEN) {

// Complex logging logic

}One single thread hit a PAD_TOKEN in the middle of a dense compute block. It triggered a complex logging path that deserialized the tensor. The other 31 threads in the warp just sat there, waiting.

We moved the check to the CPU host. The 5ms spike vanished.

Conclusion

- Never print from a CUDA kernel.

- Avoid branches in hot loops.

- Trust

nsys. It sees whatprintdebugging cannot.