· Deep Tech · 10 min read

Speculative Decoding: Cheating Physics for Latency

Using a 'Draft' model costs 10% more VRAM but saves 50% Latency. Here is the mechanics of the gamble.

Latency is the absolute enemy of fluency in generative AI.

When you sit down to chat with a Large Language Model-or when your application relies on a streaming response to feel interactive-two metrics rule your life. The first is “Time to First Token” (TTFT), which is basically your “ping” to the model. The second, and far more critical for the user experience, is “Inter-Token Latency” (ITL).

If the model stutters, generating text slower than a human can read, the illusion of intelligence breaks instantly. Humans read at roughly 3 to 5 words per second. To make an AI feel conversational, we need it to generate text consistently at 10 to 15 tokens per second. Anything slower feels like watching a loading bar over a dial-up modem.

But here is the brutal physics problem we are all dealing with in production: massive models are inherently, unavoidably slow.

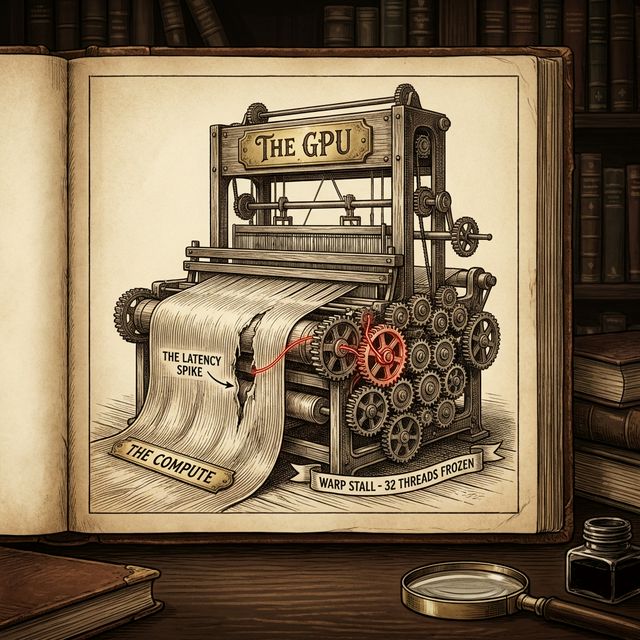

If you ask a 70 Billion parameter model to generate a single token, the GPU cannot just “think” about that token. It has to physically move 140 Gigabytes of model weights from its High Bandwidth Memory (HBM) into the compute cores (the Streaming Multiprocessors, or SMs) for every single token it generates. Let that sink in. To generate the word “Hello,” we moved 140GB of memory. To generate the word “World,” we moved another 140GB of memory.

In this scenario, the model is not compute-bound. It is memory-bound. The insanely fast arithmetic logic units on your $30,000 NVIDIA H100s are spending the majority of their time idling, twiddling their silicon thumbs, waiting for data to arrive from the memory bus.

We can’t change the speed of light, and memory bandwidth on hardware is growing much slower than the size of our models. So, how do we fix it? We don’t build faster memory. We cheat.

We cheat using a technique called Speculative Decoding.

Speculative Decoding is basically a high-stakes poker game played between a tiny “Draft” model and a massive “Target” model. And the beauty of this architecture is that the house odds are mathematically unbeatable. Let’s break down how this actually works under the hood.

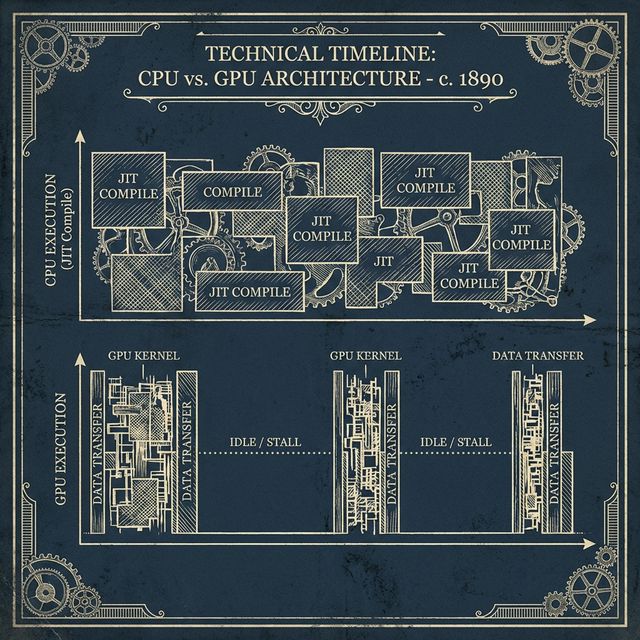

The Core Mechanic: The Serial vs. Parallel Trap

To understand why Speculative Decoding is a breakthrough, you first have to understand the trap of autoregressive generation.

Normally, generating text with an LLM is a strictly serial process. You generate Token 1. You feed Token 1 back in, to generate Token 2. You feed Token 1 and 2 back in, to generate Token 3.

You absolutely cannot compute Token 3 until you know exactly what Token 2 is. It is a fundamental dependency chain. This is why you are bound by memory bandwidth; you have to do a full pass of the model weights for every single link in that chain.

Speculative Decoding breaks this strict dependency chain by asking a beautifully simple question: What if we just guess?

To do this, we load two entirely separate models into our GPU VRAM:

- The Target Model: Let’s say, Llama-3-70B. We will call this the “The Genius.” It is slow, massive, and highly accurate.

- The Draft Model: Let’s say, Llama-3-8B. We will call this the “The Intern.” It is small, incredibly fast, but prone to making mistakes.

Here is what the execution loop looks like in practice, especially if you are running this on Google Kubernetes Engine (GKE) using an inference framework like vLLM.

Step 1: The Draft Phase

Instead of asking the Genius to generate the next token, we hand the context to the Intern. We ask the Intern to quickly guess what the next five tokens will be.

Because the Intern is small (8B parameters), it can fit its weights into the cache much easier, and it runs blazing fast. Within milliseconds, the Intern spits out a guess: [The, quick, brown, fox, jumped].

Step 2: The Verification Phase

Now, we hand that sequence of five tokens to the Genius.

Here is where the magic happens. We do not ask the Genius to generate the next five tokens one by one. We ask the Genius to take those five guessed tokens and run a forward pass on them in parallel as a single batch.

Wait, didn’t I just say text generation is strictly serial?

Generation is serial. Verification is parallel.

When you provide the full sequence [The, quick, brown, fox, jumped] to the big model, the Transformer architecture is uniquely designed to process that entire sequence at once. The Attention mechanism looks at every token in the sequence simultaneously and calculates the probability of each token given the ones that came before it.

The Genius evaluates the Intern’s work comprehensively in a single forward pass:

- Given the prompt, was “The” the correct next token? Yes.

- Given the prompt + “The”, was “quick” the correct next token? Yes.

- Given the prompt + “The quick”, was “brown” the correct next token? Yes.

- Given the prompt + “The quick brown”, was “fox” the correct next token? Yes.

- Given the prompt + “The quick brown fox”, was “jumped” the correct next token? No. The Genius realizes that based on its immense training data, “jumps” was a much better continuation than the past-tense “jumped”.

Because the first four tokens were confirmed accurate by the Target model, we “accept” those four tokens into our final output stream.

Think about the math of what just happened. We generated 4 highly accurate tokens for the computational cost of just 1 forward pass of the massive 70B model, plus a tiny bit of overhead from the 8B model. We effectively just achieved a 4x speedup on our token generation rate without sacrificing a single drop of quality.

The Rejection Sampling Algorithm

It is important to note that this verification isn’t just a simple binary “Yes/No” check of the top selected token. That would degrade the output quality because it ignores the probability distributions.

To ensure that the final output from a Speculative Decoding setup is mathematically identical to the output you would get if you just ran the Target model normally, we use a technique called Rejection Sampling.

When the Target model evaluates the Draft model’s tokens, it looks at the probability distributions (the logits).

If p_target(token) >= p_draft(token), we accept the token immediately. The Genius agrees the Intern made a highly logical choice. If p_target(token) < p_draft(token), we don’t automatically throw it out. We reject it with a specific probability margin: 1 - (p_target/p_draft).

This mathematical trick guarantees that even though we are taking shortcuts by guessing with a smaller model, the final stream of text generated is drawn from the exact same statistical distribution as the Genius model. You aren’t getting a “worse” or “dumbed down” response. You are getting the exact same response, but you are getting it up to 3x or 4x faster.

The Trade-Off: Trading VRAM for Latency

As with everything in systems engineering, there is no such thing as a free lunch. Speculative Decoding buys you incredible latency improvements, but the currency you pay with is VRAM.

You now have to hold both models in your GPU memory simultaneously.

- Llama-3-70B loaded in FP8 precision requires roughly 70GB of VRAM.

- Llama-3-8B loaded in FP8 precision requires roughly 8GB of VRAM.

You are paying an “8GB Tax” on your expensive infrastructure just to host the Intern. In a world where VRAM is consistently the scarcest and most expensive resource in the data center, is this tax actually worth paying?

Absolutely.

Look back at the physics problem. Memory bandwidth is the bottleneck during generation, not compute capacity. The compute units (the SMs and Tensor Cores) on that H100 are sitting idle most of the time.

Speculative Decoding effectively takes those idle compute cycles and puts them to work verifying the draft batch. You are actively trading “Spare Compute” and a “Small amount of Extra VRAM” to solve your “Memory Bandwidth” latency problem. In almost every user-facing application, reducing Time-to-First-Token and Inter-Token-Latency is worth far more than the 8GB of VRAM you sacrificed.

The Evolution: Medusa, Eagles, and Beyond

The industry is moving so fast that holding two entirely separate models in memory is already starting to look like old-school thinking.

The requirement to match tokenizers between the Draft and Target models is incredibly painful. If the 8B model uses a slightly different vocabulary than the 70B model, mapping the token IDs back and forth during the verification phase destroys your latency gains.

The cutting-edge solution is to stop using a separate model entirely.

Architectures like Medusa solve this by adding extra “heads” directly to the main Target model itself. Instead of the model predicting just the next token [t+1], the Medusa heads are trained to predict [t+1, t+2, t+3] simultaneously. It is as if the model is stuttering ahead of itself, drafting its own future tokens using a lightweight internal mechanism, and then verifying them using its own primary attention layers.

Similarly, the Eagle architecture uses a very lightweight drafted layer that sits directly on top of the frozen LLM embeddings of the target model.

The goal for all of these approaches remains exactly the same: Prevent the HBM memory bus from being the bottleneck that ruins your user experience.

Designing Infrastructure for Speculation

If you are an infrastructure architect or a DevOps engineer building an inference stack today, you cannot ignore Speculative Decoding. It must be factored into your cluster design.

Here is the practitioner’s checklist for implementing this in production:

- Co-locate your models carefully: If you are using the two-model approach, you cannot have the Draft model sitting on GPU 0 and the Target model sitting on GPU 1 if your interconnect is slow. The latency of moving the draft tokens across a standard PCIe bus will eat all your gains. You must map these models across GPUs connected by high-speed NVLink.

- Use Vertex AI Model Garden: If managing the topology of draft vs. target models mapping to physical hardware sounds exhausting, rely on managed services. Google Cloud’s Vertex AI Model Garden is rapidly adopting these exact serving optimizations under the hood for their managed endpoints. Let Google’s engineers worry about the NVLink topology; you just focus on the API integration.

- Obsess Over the Acceptance Rate: The most critical metric to monitor in a speculative setup is the Acceptance Rate. If your Draft model is too dumb (perhaps you used a 1B model to draft for an 80B model), the Target model will reject almost every guess. When this happens, you waste compute time guessing, you waste compute time verifying, and you still have to generate the token from scratch. You end up slower than if you hadn’t used speculation at all.

- Domain Fine-Tuning the Drafter: If your application is highly specialized-for example, generating Python code or parsing legal contracts-a generic 8B model will have a terrible acceptance rate. You will likely need to Parameter-Efficient Fine-Tune (PEFT) your Draft model on your specific domain data so that its guesses align much closer to what the Target model expects.

Speculative Decoding is the closest thing we have found to magic in modern AI infrastructure. It is a brilliant software optimization that sidesteps fundamental hardware limitations. It’s a gamble where you control the deck, and if you play your cards right, you cut your latency in half.