· Agentic AI · 8 min read

The Agentic Software Development Life Cycle

The software development paradigm is shifting from prompt-and-response to an agentic workflow where developers become coaches, not players, orchestrating AI agent systems for a 100x productivity leap. The key skill is no longer prompt engineering, but Context Engineering - providing agents with structured data, documentation, and instructions. This post provides a practical playbook to build and scale this new agentic SDLC, moving from theory to an implementable strategy for the future of software development.

Architecting the New Agentic Software Development Life Cycle

For the past year, the software development world has been dominated by the “prompt-and-response” paradigm. We interface with an LLM via often editor extensions, ask it to write a function or explain a concept, and then copy-paste that output back into our IDE. This is a powerful form of assistance, but turns out its not a revolution. Turns out this approach is a bottleneck. The developer is still the “player”—in the loop, making every play, and bound by their own cognitive speed.

The true revolution, the “100x” or “1000x” productivity leap, and this will only come from a fundamental shift in our role: from Player to Coach, and ultimately, to Owner.

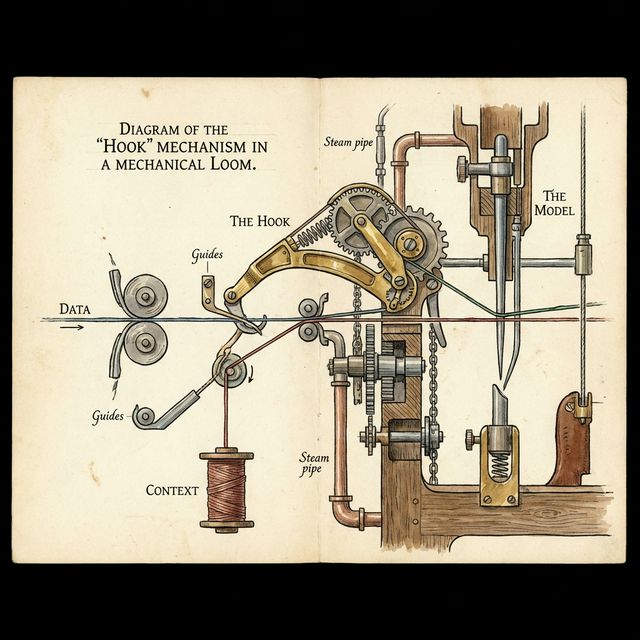

We are moving from prompting a model to orchestrating a system of AI agents. This new agentic SDLC requires a new set of skills, and the most important one isn’t prompt engineering. It’s Context Engineering.

Based on recent research and applied AI experiences, this post outlines some key technical insights required to build and scale this new agentic workflow, moving from theory to a practical to implementable playbook.

Moving from Player to Owner

Our relationship with AI in software development is evolving across three distinct stages. Understanding this evolution is key to knowing where to invest your efforts.

- The Player (Past): This is the “prompt-and-response” mode. The human is the central intermediary between the LLM and the real world (our codebase, the internet). We ask via prompts, we get some feedback, and then we take all the actions. This is the 1x world. You only build what you know.

- The Coach (Present): This is the “interactive agent” mode. The human moves “less in the loop.” We don’t just give prompts; we give instructions and tasks to an intermediary agent (which could be a sophisticated CLI or an IDE plugin). This agent has capabilities: it can read/write files, create PRs, and run shell commands. We develop the playbooks and guide the agent, which parallelizes the work. This is the 100x unlock.

- The Owner (Future): This is the “fully autonomous” mode. The human is “out of the loop.” Agents are triggered by events (e.g., a GitHub action on a new PR, a new Jira ticket) and execute complex workflows in the background. The human manages the “franchise,” not the day to day runs.

The healthiest SDLC will be a mix of Coach and Owner modes. The critical-path approach to get to this stage is via the “Crawl, Walk, Run” approach.

- Crawl: Start with “Coach” mode. Create agentic extensions or scripts for discrete jobs: coding, testing, security analysis, debugging.

- Walk: Begin offloading tasks to autonomous agents. Start with low-risk, high-toil work like generating documentation, running security reviews, or writing unit tests.

- Run: The human becomes the orchestrator. An agent, triggered by a bug report, drafts a plan, writes the code, tests it, and requests a human review of the plan and the result, not every line of code.

🧠 Context Is Your New Compiler

We’ve found that teams spend too much time obsessing over the intrinsic quality of the model. “Can Model X do this?” “Will Model Y give me 100% test coverage?” Ever heard that one before?

As it turns out this is the wrong focal point. Frontier models are converging. A mediocre model with a high-quality set of instructions will outperform a frontier model with a lazy prompt every time.

Your new job is Context Engineering.

Context is the hierarchical set of information you provide to the agent alongside the task. It includes:

- Data: The code files, schemas, and API specs.

- Documentation: Framework guides, internal best practices.

- Persona: “You are a senior security engineer. Your primary concern is injection risk.”

- Instructions: The “playbook” itself. A markdown file detailing the exact steps to follow.

The model is the “biggest black box” you have the least control over. You have full control over the task (the prompt) and the context (the instructions). Focus your time there.

Here’s an actual customer story on where this approach made a difference.

The Test Coverage Problem

A regulated fintech firm struggled to get an LLM to produce tests with their required 90% coverage. Prompts like “write tests with 90% coverage for this file” were unreliable, producing inconsistent results.

They shifted to Context Engineering. They created a

testing_playbook.mdfile. This instruction file, provided as context on every run, detailed:

- “First, scan the target file and identify all public methods.”

- “For each method, generate one happy path test and two edge-case tests (e.g., null inputs, empty arrays).”

- “Use our internal

MockServiceframework, not a generic mocking library.”- “After writing tests, run the coverage tool and check the output. If coverage is below 90%, identify the missing branches and loop to Step 2.”

The result? The same model, now acting as an agent following a playbook, consistently hit the coverage target. They didn’t need a better model; they needed better instructions.

🛠️ So what does a practical playbook for agent orchestration look like?

In the “Coach” mode, you are guiding the agent. This requires practical techniques for managing the agent’s state, focus, and workflow.

1. Codify Workflows in Markdown

Your SDLC (e.g., Research -> Plan -> Implement -> Test) should be codified into markdown instruction files. This makes your process repeatable, version-controlled, and shareable. An agent (e.g., via a custom extension) reads this workflow file for every task, ensuring consistency.

2. Manage Memory - Clear the context when it no longer serves you

Agents have a limited context window, and chat history creates “crust”—noise from previous, unrelated steps. Use planning as a checkpoint.

Instruct the agent: “First, create a detailed implementation plan and save it to plan.md.” Once the file is created, use a mechanism to reset the agent’s short-term memory or chat history.

Then, start the next task: “Implement the solution by following plan.md exactly.” This strategy not only optimizes tokens but, more importantly, forces the agent to focus only on the relevant context (the plan), just as a human developer would switch from a planning mindset to an implementation mindset.

3. Use Dynamic Context Selection

You don’t give a human the entire company library to fix one bug. You give them the relevant files. Agents should work the same way.

Instead of overwhelming the agent with all your documentation, use a “poor man’s dynamic context selection.” Maintain a folder of markdown files for different technologies (DaisyUI.md, PixJS.md, FastHTML.md). In your main instruction file, instruct the agent to only import the files relevant to the current task. This keeps the context sharp and relevant.

✅ Building Trust: Validation in a 1000x World

When agents generate 1000x more code, how do we review it? We don’t. We must stop reviewing code and start reviewing intent.

The new “code review” is a comparison of two artifacts:

- The Plan (Future Intent): The

plan.mdfile the agent created. - The Log (Past Trail): An

implementation.logfile that tracks the agent’s actions.

Your review becomes: “Does the log file prove the agent successfully executed the plan?” We can even create a “confidence score” by using another agent to compare the plan against the log.

A powerful validation technique is to use different models for generation versus review. We’re all wary of a model “reviewing its own homework.” By using a different model (e.g., using a powerful, large model to write the code, but a faster, lighter model to review it), you introduce an external “pair of eyes” to validate the logic and catch biases.

The Legacy Migration Loop**

A large retail company was paralyzed by a 15-year-old monolithic Java application. They needed to migrate to Spring Boot, but the original business logic was lost to time.

They adopted an agent-based workflow.

- Plan: They used an agent to reverse-engineer the legacy application, not into new code, but into a set of BDD Gherkin feature files (

.feature). This document became the plan and the specification.- Implement: They fed these Gherkin files to a “Coach” agent as the primary instructions for writing the new Spring Boot application, module by module.

- Validate: They used the exact same Gherkin files to have another agent generate a suite of Cucumber tests.

This created a perfect, automated validation loop. The specification (Gherkin) was used to generate the code and the tests that validated the code, ensuring no business logic was accidentally dropped.

📊 The Real Payoff: Moving DORA Metrics

This entire agentic framework is not just a developer convenience; it’s a core driver of business performance. The DORA (DevOps Research and Assessment) report identifies key metrics for high-performing tech organizations.

By adopting these agentic workflows—which are, by nature, small, automated, and version-controlled—we directly impact the two most important DORA “throughput” metrics:

- Lead Time for Changes: Dramatically decreases.

- Deployment Frequency: Dramatically increases.

The DORA research shows a counter-intuitive truth: as throughput increases, instability (Change Fail Rate, Rework Rate) actually decreases. By using agents to automate the high-toil work of testing, validation, and small-batch deployments, you not only get faster, you get safer.

You Are the Architect

The future of software development isn’t a single, all-knowing AI. It’s an ecosystem of specialized agents, and you are its architect.

Stop focusing on the perfect prompt. Start architecting your context. Codify your workflows, build your instruction playbooks, and create robust validation loops. Your new job is to graduate from “Player” to “Coach”—and build the autonomous “Owner” systems that will define the next decade of software.