· AI at Scale · 6 min read

Not All Zeros Are the Same - Sparsity Explained

Demystifying hardware acceleration and the competing sparsity philosophies of Google TPUs and Nvidia. This post connects novel architectures, like Mixture-of-Experts, to hardware design strategy and its impact on performance, cost, and developer ecosystem trade-offs.

An Executive’s Guide to AI Sparsity

You might not have heard much about it lately, but for efficient AI, “sparsity” has become a critical term. This term, however, is overloaded and describes several fundamentally different problems. This weekend, I spent some time understanding the fundamentally different approaches that hardware providers—Google and Nvidia—have taken to solve them.

If you are wondering why it’s worth understanding this difference, the answer is simple: it’s a core strategic decision that directly impacts your infrastructure cost, developer velocity, and model performance. Choosing the wrong hardware for your specific “sparsity” problem can be like using a Swiss Army knife for brain surgery.

First, let’s break down the concepts.

Not All Sparsity Is Created Equal

At a high level, “sparsity” means an AI model has a lot of zeros. The challenge is where those zeros are and why. This creates two distinct bottlenecks that dominated the last generation of AI.

- Memory-Bound Sparsity (The “Library Problem”)

This is about sparse data access. Imagine needing to pull one specific book (a data vector) from a library containing trillions of books (the model’s memory). This is the defining bottleneck for large-scale recommendation models (DLRMs) that power everything from e-commerce to social media feeds. When a user logs in, the model must “gather” their specific embedding vector (a dense representation of their interests) from a massive, terabyte-scale table.

So what’s the problem with this? The computation is trivial (it’s just a lookup), but the system stalls, memory-bound, waiting for the data while your powerful, expensive compute units sit idle.

- Compute-Bound Sparsity (The “Zero-Math Problem”)

This is about sparse computation. Imagine a giant spreadsheet calculation where 50% of the numbers are zero. You can (in theory) skip half the math. This applies to the model weights in any math-heavy model, like LLMs and computer vision. Researchers “prune” these models, setting non-critical weights to zero to reduce the computational (FLOPs) load.

The problem is that skipping random, unstructured zeros is notoriously difficult for parallel hardware. To get a real speedup, you need a predictable, structured pattern of zeros that the hardware is built to understand.

How Do Nvidia’s and Google’s Approaches Differ?

Google’s Approach: The TPU SparseCore

Google’s business (Search, YouTube, Play Store) depended (and still does) on massive-scale recommendation. They built a “scalpel” for their specific memory-bound problem.

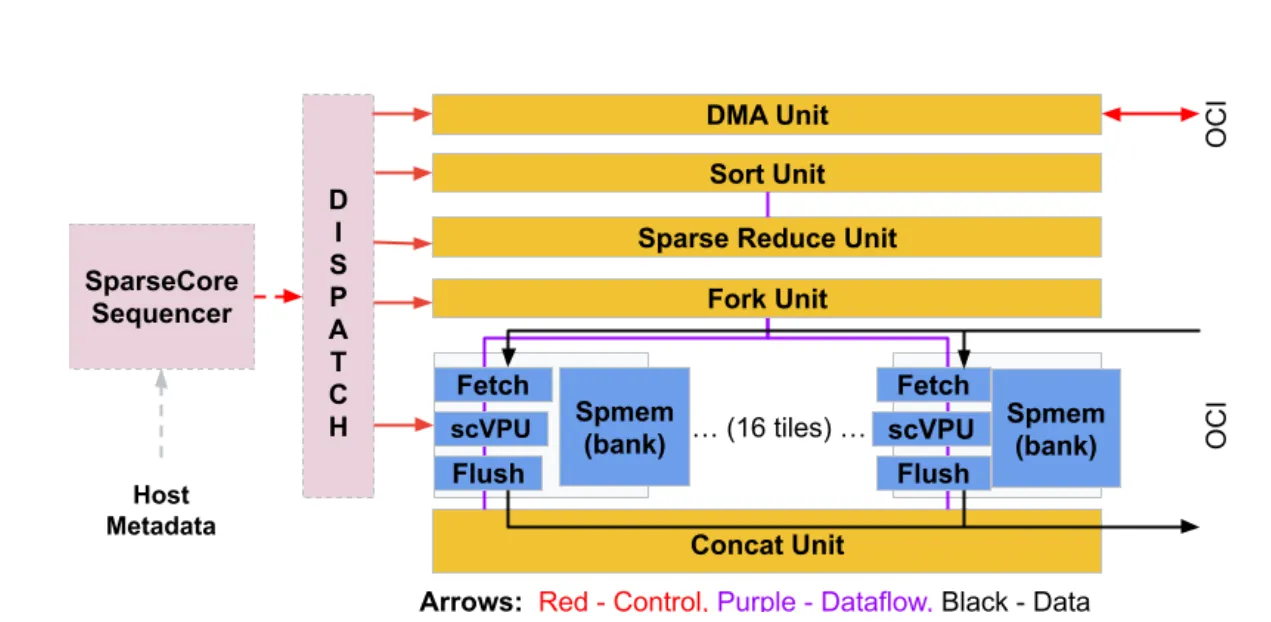

The SparseCore is not part of the main TPU. It is a separate, dedicated co-processor (an ASIC-within-an-ASIC) found on TPU v4, v5p, and the new Ironwood chips. It’s a “dataflow processor” that runs in parallel. Its sole job is to handle the “library problem.” It races ahead, dynamically fetches the sparse, irregular embedding data from high-bandwidth memory (HBM), and feeds it to the main TPU compute units in a clean, dense format they can instantly process.

It provides a staggering 5x-7x speedup on recommendation workloads compared to not using it—all while using only 5% of the die area and power. For developers, this complexity is almost entirely hidden by the XLA compiler, making it a low-effort gain within the Google Cloud ecosystem.

Nvidia’s Approach: The 2:4 Sparse Tensor Core

Nvidia provides a “Swiss Army knife” for all AI developers. Their feature is a general-purpose optimization for the compute-bound problem.

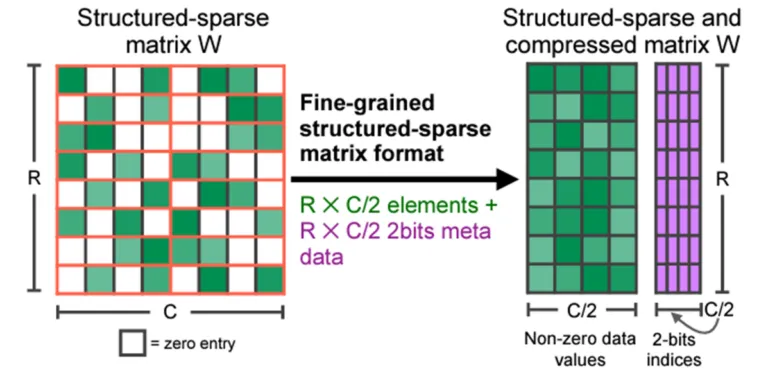

They decided not to build a separate chip. Instead, they built an integrated feature directly into the main Tensor Cores of their Ampere (A100) and Hopper (H100) GPUs.

It solves the “zero-math problem” by enforcing a rigid “2:4 structured sparsity” pattern. The hardware is designed to understand one rule: in any group of four weights, two must be zero. When it sees a matrix in this format, it automatically skips the zero-value calculations, theoretically doubling its math throughput.

This delivers a practical 1.5x to 1.8x speedup for inference on any model that can be pruned. The “cost” is a non-trivial engineering workflow: developers must “train, prune, and fine-tune” their models to fit this 2:4 pattern. Nvidia mitigates this with a robust software ecosystem, including libraries like cuSPARSELt and torchao.sparsity.

Mixture-of-Experts (MoE) and “Activation Sparsity”

ref: https://newsletter.maartengrootendorst.com/p/a-visual-guide-to-mixture-of-experts

For the sake of completion, let’s also look at a new architecture, Mixture-of-Experts (MoE), which introduces a third type of sparsity.

MoE models (like Mixtral and GPT-4) are not one giant neural network. They are a collection of many smaller “experts” (e.g., 8) and a “gating network” that dynamically routes each input token to only a few of them (e.g., 2 of the 8).

This creates “activation sparsity”—for any given input, most of the model’s parameters are inactive. This is the key to training trillion-parameter models with a fraction of the compute. This new “block-sparse” pattern requires new hardware strategies.

TPUs tackle MoE with a holistic, co-designed approach. Their new TPUs (Trillium and Ironwood) are explicitly designed to handle the massive scale of MoE models, critically using the open-sourced “Pallas kernels”—a JAX extension specifically for high-performance “block-sparse matrix multiplication on Cloud TPUs.”

Nvidia’s 2:4 hardware was designed for weight sparsity, not activation sparsity.

True to its philosophy, its flexible ecosystem is solving the problem with software. New research, like the “Samoyeds” system, shows how to create custom kernels that apply structured sparsity to both weights and activations, cleverly mapping the MoE problem onto Nvidia’s existing 2:4 Sparse Tensor Cores to achieve significant speedups.

How Should You Approach This? Match Your Workload to the Hardware

Your choice of accelerator should be dictated by your primary AI workload.

For Hyperscale Recommendation/Search (e.g., e-commerce, media, ads):

- Recommendation: Google Cloud TPU (v4/v5p/Ironwood).

- Why: Your bottleneck is the memory-bound “library problem.” The TPU SparseCore is the only purpose-built designed to solve this exact issue, delivering transformative 5x-7x gains on embedding lookups with minimal developer effort.

For General-Purpose Inference (LLMs, CV) with a Focus on TCO:

- Recommendation: Nvidia (A100/H100) Ecosystem.

- Why: Your bottleneck is the compute-bound “zero-math problem.” The 2:4 Sparse Tensor Core is a general feature that can accelerate any prunable model. It requires an upfront MLOps investment (“train, prune, fine-tune”) but provides a solid ~1.7x performance boost with the flexibility to run on any cloud or on-premise.

For Cutting-Edge MoE Model Development:

- Recommendation (Integrated Stack): Google TPU (Trillium). This is the “all-in-one” path. Google is co-designing the hardware and software (Pallas kernels) specifically for MoE’s “block-sparse” compute pattern.

- Recommendation (Flexible R&D): Nvidia (H100). This is the “build-your-own” path. The hardware is a powerful, flexible tool. You will rely on your team’s (or the community’s) software expertise to build or leverage new kernels (like Samoyeds) to map the MoE problem onto the 2:4 hardware.