· AI at Scale · 9 min read

Switching Technologies in AI Accelerators

This post contrasts the switching technologies of NVIDIA and Google's TPUs. Understanding their different approaches is key to matching modern AI workloads, which demand heavy data movement, to the optimal hardware.

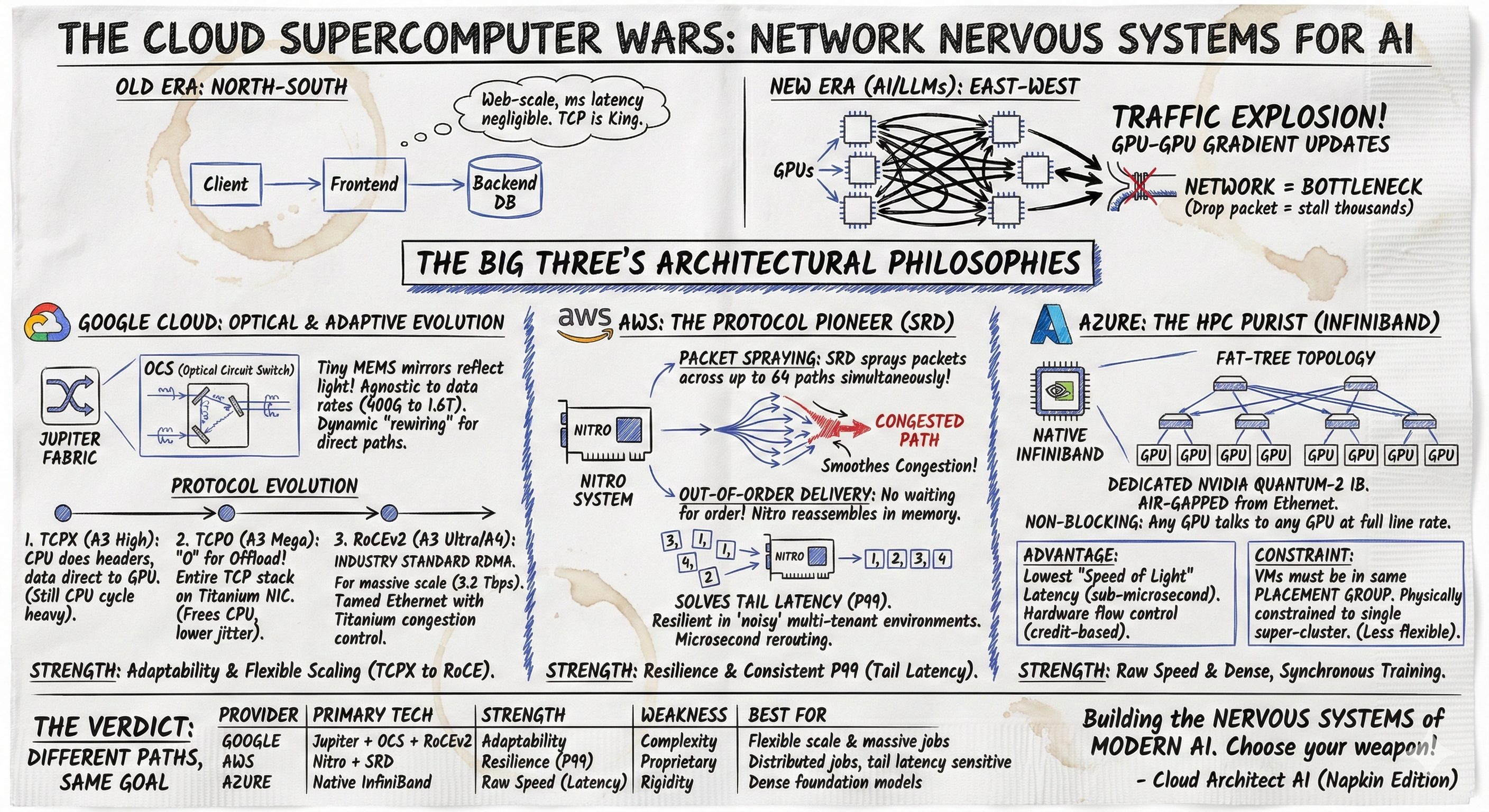

In 2025 - two distinct philosophies govern how supercomputers are built and connected. Google’s approach, centered on its Tensor Processing Units (TPUs), uses a revolutionary Optical Circuit Switching (OCS) fabric. This system physically reconfigures its network using light paths, creating a bespoke, optimized topology for a single, massive workload. It’s a model of specialized efficiency, designed for the marathon-like training of foundation models where power consumption and total cost of ownership are paramount.

In contrast, NVIDIA’s ecosystem employs a hierarchical Electrical Packet Switching (EPS) fabric. This two-tiered approach uses proprietary NVLink and NVSwitch for ultra-fast communication within a server rack (“scale-up”) and industry-standard InfiniBand to connect multiple racks into a massive cluster (“scale-out”). This architecture prioritizes flexibility and dynamic responsiveness, managed by intelligent software that adapts to the fixed network.

Which one should you go for? Like all things IT - it depends. Google’s OCS offers unparalleled efficiency for predictable, monolithic workloads at an extreme scale. NVIDIA’s tiered EPS provides the critical versatility required for the varied and often unpredictable demands of AI research, multi-tenant cloud services, and broad enterprise deployment. This post is aimed to explain the approach taken by both the giants to help you have a deeper appreciation and clarity on the path to take.

TPU and OCS Architecture - Reconfigurable Fabric

Google’s approach to AI infrastructure is that of hardware and software co-design, culminating in a system that physically adapts its network to the task at hand.

TPU Pod Architecture and Optical Connectivity

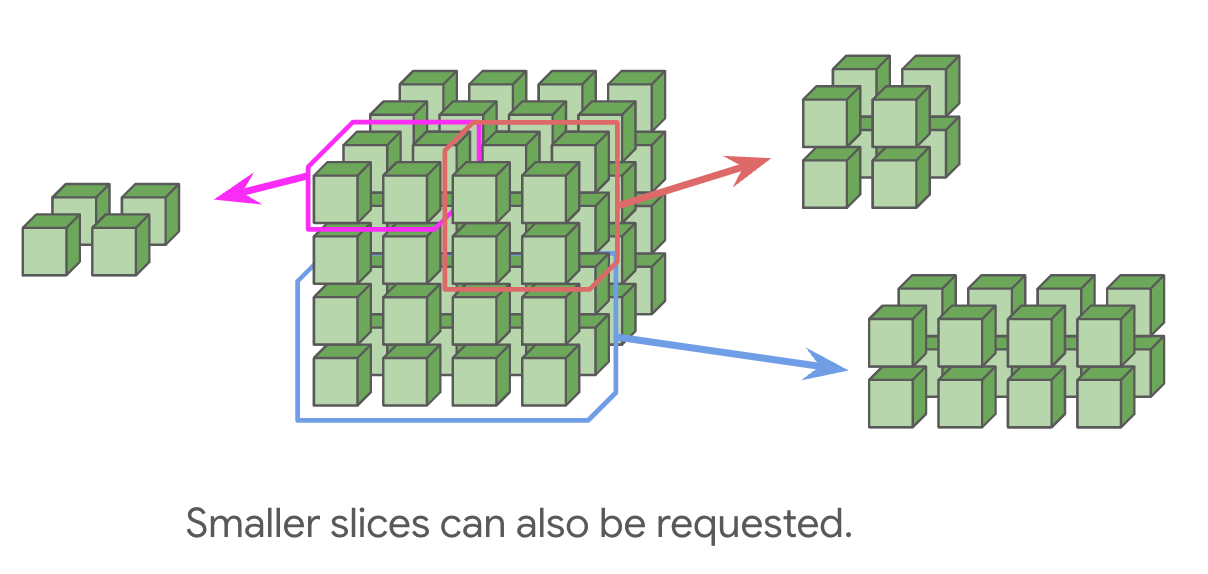

Google’s AI infrastructure is built from modular units. The smallest building block is a “cube,” a 4x4x4 arrangement of 64 TPU chips connected by high-speed copper links. To scale beyond this, multiple cubes are assembled into a “TPU Pod,” which can contain up to 4,096 chips (for ~v4) or 9216 (in case of v7)

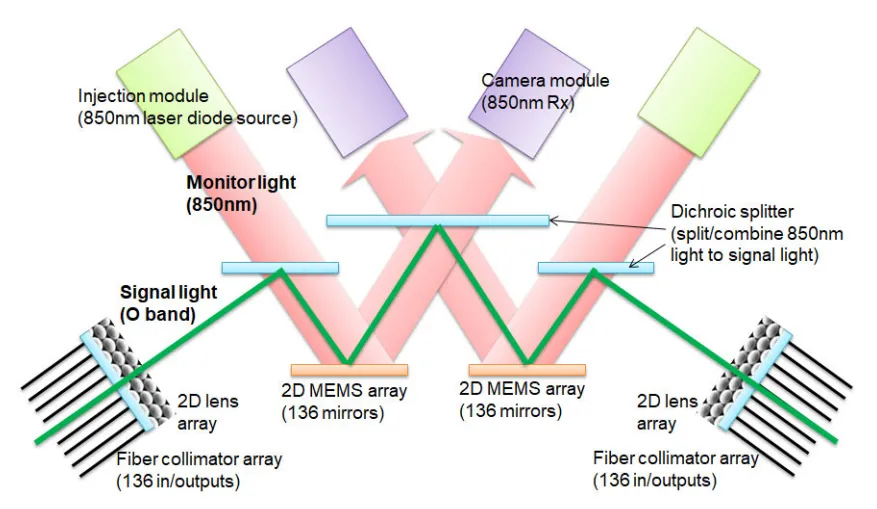

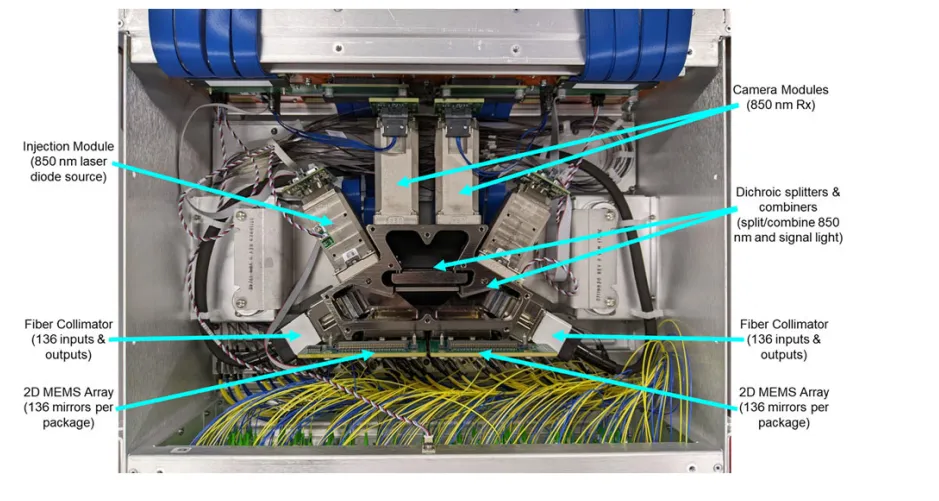

The challenge is connecting these cubes, which can be separated by distances too great for copper. Google’s solution is the Optical Circuit Switch (OCS), a device that routes data purely as light.

At the heart of the OCS are microscopic, movable mirrors (3D MEMS). When a connection is needed, these mirrors are precisely tilted to redirect a beam of light from an input fiber to an output fiber, creating a direct, uninterrupted physical path—a “circuit”—between two different TPU cubes. This connects the on-chip Inter-Chip Interconnect (ICI) of one cube to another, effectively wiring them together into a single, larger machine.

https://arxiv.org/pdf/2208.10041

The Software Stack: Programming the Light

This reconfigurable hardware is controlled by a sophisticated, deeply integrated software stack that plans the network configuration ahead of time.

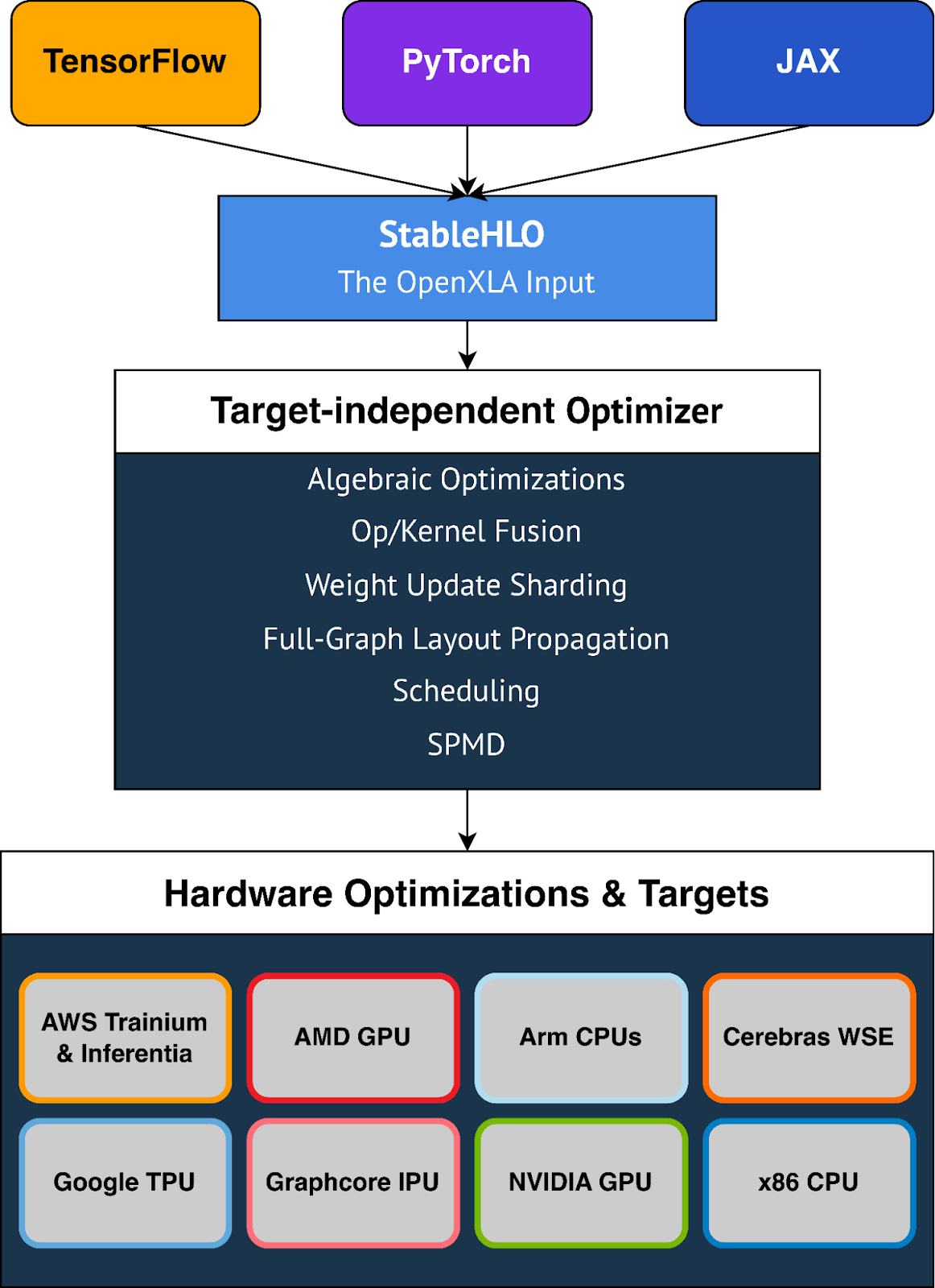

- JAX, XLA, and HLO: Developers typically write models using JAX, a Python library for high-performance computing. The JAX program is compiled by the Accelerated Linear Algebra (XLA) compiler into a High Level Operations (HLO) graph. This graph is a static, predictable blueprint of the entire workload, detailing every computation and, crucially, every communication pattern required between the TPUs.

- Pathways and the Pod Manager: The HLO graph is fed to the Pathways orchestration system. For a given job, the user requests a specific network topology (e.g., a 3D torus) that is optimal for their model’s communication needs. The Pathways Resource Manager and a lower-level Pod Manager analyze the HLO graph and the requested topology. They then generate a master plan, mapping computations to specific TPU cubes and determining the exact light paths the OCS must create to form the desired network.

- Execution: This plan is then executed by

libtpu, which sends the precise commands to tilt the OCS mirrors and configure the on-chip ICI switches. This entire process happens before the training job begins, physically wiring the supercomputer into a bespoke network optimized for that specific workload.

So what does this all mean?

This unique approach presents a clear set of trade-offs & benefits.

- The primary advantage is unparalleled efficiency. By creating a direct optical path, the OCS avoids power-hungry electrical-to-optical conversions, consuming less than 3% of the total system power. Once a circuit is set, data travels with near-zero latency.

This architecture also offers incredible fault tolerance; if a chip or link fails, the software can simply reconfigure the light paths to route around the faulty component, dramatically improving system availability for long-running jobs.

- The OCS approach delivers the highest return on investment for massive-scale foundation model training. For workloads that run continuously for weeks or months on thousands of chips with predictable communication patterns, the one-time setup cost is negligible. The resulting gains in performance-per-watt and lower total cost of ownership make it the ideal solution for dedicated “AI factories”.

NVIDIA’s Hierarchical Fabric Tiered & Electrical Approach

NVIDIA’s strategy is built on a flexible, hierarchical electrical network that has become the industry standard, prioritizing broad compatibility and dynamic performance.

Scale-Up and Scale-Out Architecture

NVIDIA employs a two-tiered interconnect strategy:

- Scale-Up with NVLink and NVSwitch: Within a single server or rack, NVIDIA uses its proprietary NVLink technology, a high-bandwidth, low-latency electrical interconnect for direct GPU-to-GPU communication. NVSwitch chips act as a fabric to create

all-to-all, non-blocking connectivity, ensuring every GPU in the server can communicate with every other GPU at full speed. This tightly coupled group of GPUs is known as an “NVLink domain” and can scale up to 72 GPUs in the latest Blackwell architecture, effectively acting as a single, massive accelerator.

- Scale-Out with InfiniBand: To connect multiple NVLink domains (i.e., multiple servers) into a larger cluster, NVIDIA uses InfiniBand, a high-performance networking standard widely adopted in high-performance computing (HPC). This creates a powerful, cluster-wide fabric capable of scaling to thousands of GPUs.

ref: https://www.nvidia.com/en-us/networking/infiniband-switching/

Electrical Packet Switching

This fabric operates on electrical packet switching, where data is broken into small packets that are routed individually. This is enhanced by two key technologies:

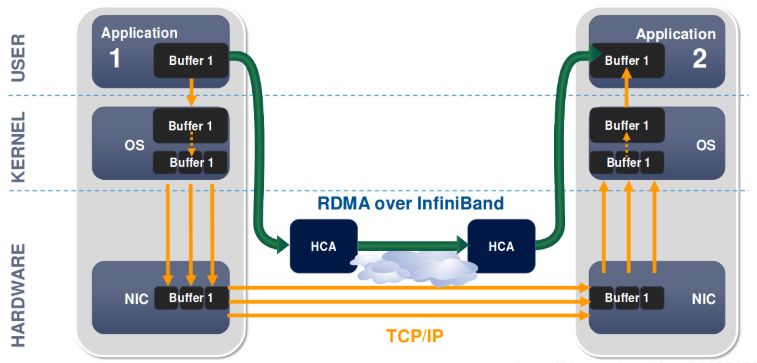

RDMA (Remote Direct Memory Access): A core feature of InfiniBand, RDMA allows a network adapter in one server to directly read or write data in the memory of another server. This process bypasses the CPU and operating system on both ends (“kernel bypass” and “zero-copy”), dramatically reducing latency and freeing up the CPU for computation.

In-Network Computing (SHARP): NVIDIA’s Scalable Hierarchical Aggregation and Reduction Protocol (SHARP) offloads certain computational tasks directly to the network switches. For example, during an

all-reduceoperation where gradients from all GPUs need to be summed, the switch can perform the summation in-flight as data passes through it. This reduces the total amount of data traversing the network and frees up GPU cycles.

The Software Stack: Adapting to a Static Network

Unlike Google’s stack, which changes the network to fit the program, NVIDIA’s software adapts the program to fit the fixed network.

PyTorch and CUDA: Most developers interact with the system through high-level frameworks like PyTorch, which are built on NVIDIA’s CUDA platform.

NCCL (NVIDIA Collective Communications Library): For multi-GPU communication, the key software is NCCL. When a job starts, NCCL automatically detects the physical network topology—identifying fast NVLink connections, slower PCIe links, and inter-node InfiniBand paths. Based on this map, its advanced algorithms dynamically select the most efficient communication strategy (e.g., a ring or tree algorithm) for each collective operation at runtime. This process is completely transparent to the developer, allowing code written in PyTorch to run with near-optimal performance on any NVIDIA hardware configuration.

ref: https://developer.nvidia.com/nccl

So what does this all mean?

The primary benefit is flexibility. The packet-switched fabric can handle diverse, bursty, and unpredictable traffic from many users simultaneously, making it ideal for multi-tenant cloud environments. It supports a wide range of frameworks, most notably PyTorch, which is dominant in the AI research community. This versatility makes it the go-to platform for experimentation and development.

The main trade-off is higher power consumption. Every packet must be processed by power-hungry switch electronics, making it less energy-efficient than an all-optical path. While individual packet routing is fast, the cumulative latency from buffering and processing can be higher than a direct optical circuit.

This approach benefits the widest range of use cases. It excels in multi-tenant cloud and research environments where flexibility is key. It is also the superior choice for AI development and experimentation, inference workloads, and small-to-medium scale training, where the overhead of a reconfigurable optical network would be impractical.

The main trade-off is higher power consumption. Every packet must be processed by power-hungry switch electronics, making it less energy-efficient than an all-optical path. While individual packet routing is fast, the cumulative latency from buffering and processing can be higher than a direct optical circuit.

This approach benefits the widest range of use cases. It excels in multi-tenant cloud and research environments where flexibility is key. It is also the superior choice for AI development and experimentation, inference workloads, and small-to-medium scale training, where the overhead of a reconfigurable optical network would be impractical.

To summarise - Google’s OCS is about specialized efficiency for massive, monolithic tasks, while NVIDIA’s EPS is about flexible, dynamic performance for a broader range of AI workloads.Also a great opportunity to use the right mix of accelerators to suit your enterprise needs.