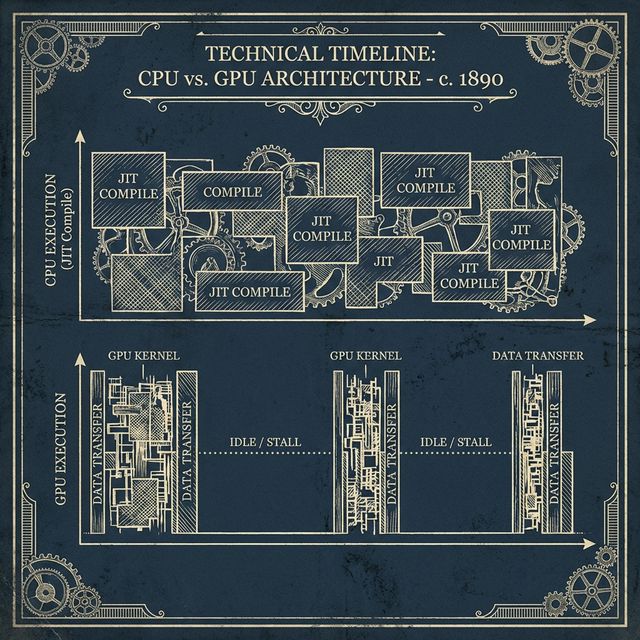

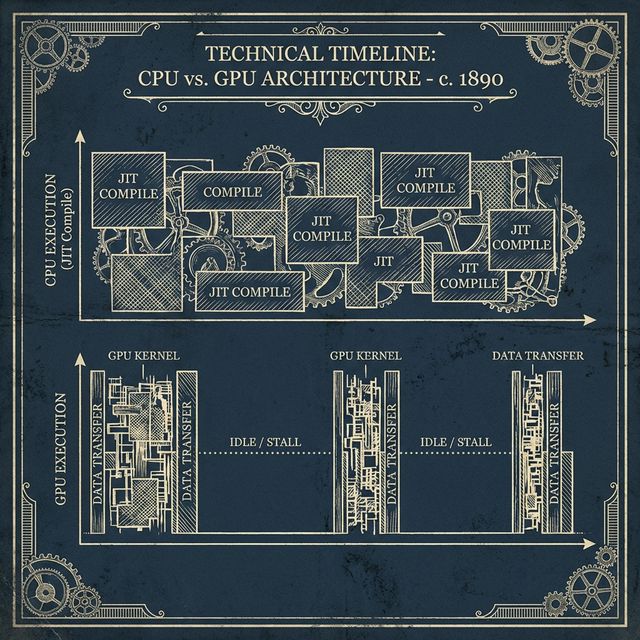

JAX XLA: Why Your GPU is Idle 40% of the Time

Recompilation is the silent killer of training throughput. If you see 'Jit' in your profiler, you are losing money. We dive into XLA internals.

Recompilation is the silent killer of training throughput. If you see 'Jit' in your profiler, you are losing money. We dive into XLA internals.

The AI industry is shifting from celebrating large compute budgets to hunting for efficiency. Your competitive advantage is no longer your GPU count, but your cost-per-inference.

FP4 isn't just 'lower precision' - it requires a fundamental rethink of activation outliers. We dive into the bit-level implementation of NVFP4, Micro-Tensor Scaling, and the new Tensor Memory hierarchy.

The competitive advantage in AI has shifted from raw GPU volume to architectural efficiency, as the "Memory Wall" proves traditional frameworks waste runtime on "data plumbing." This article explains how the compiler-first JAX AI Stack and its "Automated Megakernels" are solving this scaling crisis and enabling breakthroughs for companies like xAI and Character.ai.

As hardware lead times and power constraints hit a ceiling, the competitive advantage in AI has shifted from chip volume to architectural efficiency. This article explores how JAX, Pallas, and Megakernels are redefining Model FLOPs Utilization (MFU) and providing the hardware-agnostic Universal Adapter needed to escape vendor lock-in.