· Agentic AI · 11 min read

A2A Architectures: Tools are not just Functions (The Two-Phase Commit)

Why Agent-to-Agent (A2A) interactions and Side Effects require a 'Two-Phase Commit' for safety.

When a Large Language Model calls a tool, the standard tutorial shows it asking for the weather.

The user prompts: “Do I need an umbrella in London?” The LLM generates a tool call: get_weather(city="London"). The runtime executes the Python function, hits an API, and returns “Rainy”. The LLM reads the result and says: “Yes, you should take an umbrella.”

This is a beautiful, safe, and entirely misleading demonstration of what building production AI looks like. Querying the weather is an idempotent, read-only operation. It has zero side effects. If the LLM hallucinates and asks for the weather in “L0nd0n,” the worst that happens is a 404 error and a slightly confused retry loop.

But the industry is rapidly moving beyond these read-only chat patterns. We are entering the era of A2A (Agent-to-Agent) architectures.

In an A2A system, agents do not just retrieve information for a human reading a screen. They communicate with other autonomous agents to execute complex, multi-step business workflows. They write to databases. They spin up cloud infrastructure. They refund customers. They execute financial trades.

When you shift to workflows like refund_customer(id="123"), delete_database(name="prod"), or book_flight(price=500), you are handing a non-deterministic, probabilistic machine the keys to your production environment.

You cannot treat these operations like simple Python function calls. If you do, it is not a matter of if your agent will cause a catastrophic outage or financial loss, but when. You have to treat these tool calls as Distributed Transactions.

The Hallucination and the “Oops” Factor

The fundamentally probabilistic nature of Large Language Models means that they are essentially highly advanced prediction engines. They do not possess a formal logical understanding of the code they are executing. They predict the next most likely token. Most of the time, with advanced models like GPT-4, Claude 3.5 Sonnet, or Gemini 1.5 Pro, these predictions are astoundingly accurate.

However, they are not perfect. Models suffer from attention decay over long context windows. They can conflate entity IDs. They can misinterpret ambiguous human instructions.

Imagine an A2A customer support workflow. The “Intake Agent” receives a frustrated email from a customer, extracts the intent, and passes a structured payload to the “Resolution Agent.” The Resolution Agent decides that a refund is warranted.

If the Resolution Agent hallucinates a refund_customer call with the wrong user ID, or mistakenly adds a zero to the refund amount, and you are using a standard “Tool Use” pattern, the API call fires immediately. The money leaves the stripe account before anyone can blink. There is no confirmation dialog. There is no undo button. The “Oops” factor in A2A systems is instantaneous and irreversible.

We need a safety layer that acknowledges the probabilistic nature of the caller. We need to borrow a battle-tested concept from distributed databases and adapt it for the age of AI: The Two-Phase Commit (2PC).

Distributed Systems 101: The Two-Phase Commit

In distributed computing, when multiple independent nodes need to agree on a transaction that spans across them (like debating an account on Node A and crediting an account on Node B), they use a commit protocol to ensure ACID properties (Atomicity, Consistency, Isolation, Durability). The most common is the Two-Phase Commit.

- Phase 1 (Prepare): The coordinator asks all participating nodes if they are ready to commit the transaction. The nodes check their constraints, reserve the necessary resources, and reply with a “Yes” or “No.” They do not commit the data yet.

- Phase 2 (Commit/Rollback): If all nodes replied “Yes” in Phase 1, the coordinator sends a “Commit” message, and the changes are made permanent. If any node replied “No” (or timed out), the coordinator sends a “Rollback” message, and all nodes release their reserved resources, leaving the system state unchanged.

To build reliable Agent-to-Agent systems, we must map this paradigm onto our LLM Tool Execution loops.

Adapting 2PC for Agentic Workflows

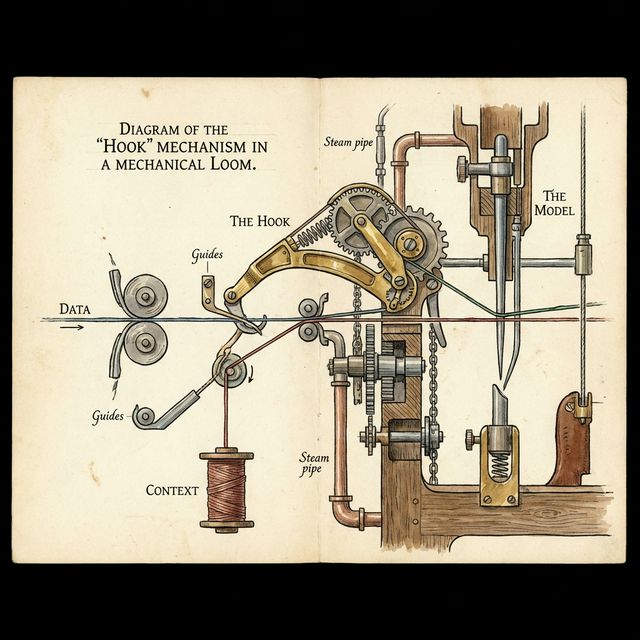

In the standard OpenAI or Anthropic tool execution flow, the model outputs the JSON for the tool call, and your runtime (whether you wrote it by hand or are using a framework like LangChain or Genkit) executes it and feeds the result back to the model.

In an A2A architecture designed for production safety, we must intercept this execution. A tool definition that mutates state should never just be an execute() function. It must be split into a plan() phase and an execute() phase.

Let’s explore how this looks in practice with an irreversible action: booking a flight.

Phase 1: Prepare (The “Semantic Dry Run”)

When the Agent outputs the intent to use the tool-book_flight(destination="LHR", seat="12A", price=500)-the system intercepts this and routes it to the Plan function instead of the execution function.

The Plan phase is a semantic and technical dry run. It validates constraints and gathers context. It asks questions like:

- Does the flight to LHR exist at this price?

- Is seat 12A actually available?

- Does the user’s corporate policy allow flight bookings of $500?

Crucially, it does not charge the corporate card or finalize the booking with the airline.

Instead, the Plan function returns a Token of Intent, a structured object representing the proposed state change, back to the runtime and the Agent.

{

"status": "PREPARED",

"transaction_id": "tx_flight_88921",

"action_summary": "Book Flight UA123 to LHR, Seat 12A",

"financial_impact": "$500.00 USD",

"consequence_level": "IRREVERSIBLE",

"expires_in": "300 seconds"

}The LLM now has this token in its context window. It knows what it is about to do, and it knows the precise parameters that have been validated by the underlying system.

Phase 2: Commit (The “Explicit Confirmation”)

Now we reach the crucial juncture. The system is in a “Prepared” state. The flight seat is placed on a temporary 5-minute hold.

To proceed, the system requires an explicit sign-off on the transaction_id. Who provides this sign-off depends on your A2A topology.

Scenario A: The Human in the Loop (HITL) The most common approach for high-risk actions is to route the transaction_id and the action_summary to an external system, like a Slack channel or a web dashboard. A human manager sees: “Agent 47 is about to book Flight UA123 for $500. Do you approve?” If they click “Yes,” the backend calls the actual execute() function with the transaction ID.

Scenario B: The Supervisor Agent (Pure A2A) In a fully autonomous A2A setup, you introduce a Supervisor Agent or a Constitutional Judge Model. This is a separate, potentially smaller but highly focused LLM prompt whose only job is to audit proposed transactions against a strict set of rules.

The system passes the Token of Intent to the Supervisor Agent, along with the corporate policy document. The Supervisor Agent evaluates: “Does a $500 flight to LHR violate the travel policy?” If it determines the action is safe, it generates a confirm_transaction(tx_id="tx_flight_88921") tool call.

Only when this explicit confirmation is received by the runtime does the system proceed to touch the external API and finalize the booking.

Side Effects Require Persistent State

Implementing a Two-Phase Commit logic implies a fundamental architectural shift: Your agentic tools need to be stateful.

When the plan() phase generates a transaction_id, the details of that proposed API call cannot just live in the ephemeral memory of your Python script. What if the worker node crashes? What if the Human-in-the-loop takes 10 minutes to approve the Slack message, and the original LLM API request times out?

The “Prepared” transaction state must be serialized and stored in an external ledger. Depending on your scale, this could be Redis, a PostgreSQL database, or even a specialized event-sourcing system.

This state object must include:

- The

transaction_id. - The raw payload the LLM proposed.

- The validated payload from the

plan()phase. - A Timestamp.

- A Time-To-Live (TTL).

The TTL is critical. Standard practice for any transaction protocol is managing timeouts. If an agent “Prepares” a flight booking but the Supervisor Agent crashes and never sends the “Commit,” we cannot leave the seat on hold forever. A background worker (a cron job or an ephemeral queue consumer) must monitor the ledger. If the confirmation does not arrive before the TTL expires, the system triggers the rollback() phase, releasing the seat hold and marking the transaction as ABORTED.

This is standard operating procedure for ATM machines, bank transfers, and distributed microservices. It is non-negotiable for production AI Agents.

Avoiding the “Double Charge”: Idempotency in Agentic Workflows

A closely related, and equally terrifying, problem in A2A systems is the retry loop.

Because LLM API calls frequently fail due to rate limits (HTTP 429), context length limits, or generic server errors (HTTP 500), agentic runtimes are built with aggressive retry logic. If a model call fails, the system backs off and tries again.

But what if the LLM successfully generated the refund_customer tool call, the network request reached your server, your server processed the refund via Stripe, but the HTTP response back to the LLM timed out?

The LLM (or the orchestrating framework) thinks the call failed. It retries. It generates refund_customer again. Your customer just got refunded twice.

This is where Idempotency Keys become mandatory.

Every tool call generated by an agent that mutates state must include a unique, deterministic Idempotency Key. In an A2A workflow, the agent must generate this key before it tries to execute the action, often deriving it from a hash of the current task, the timestamp block, and the target entity.

When the tool executes, your backend infrastructure checks the Idempotency Key against the database.

- If the key is new: Execute the refund, store the key as “completed”, and return success.

- If the key exists and is marked “completed”: Do not touch Stripe. Simply return the cached success response back to the agent.

This guarantees that no matter how many times the probabilistic runtime retries a network request, the side effect only occurs exactly once.

Implementing the Transaction Wrapper in Code

You do not want your engineers writing this plan/commit/rollback boilerplate inside every single tool they create. That leads to inconsistent security and massive technical debt. You need to build this into the fabric of your agentic framework using decorators or middleware.

Here is a conceptual example of how a Transaction Decorator simplifies this for the tool author:

from my_agent_framework import transactional, TransactionContext

@transactional(risk_level="HIGH", required_approver="SUPERVISOR")

def terminate_cloud_instance(context: TransactionContext, instance_id: str):

"""

Terminates a cloud instance permanently.

This function only executes during Phase 2 (Commit).

"""

gcp_client.compute().instances().delete(

project='my-project',

zone='us-central1-a',

instance=instance_id

).execute()

return f"Instance {instance_id} successfully terminated."

def plan_terminate_cloud_instance(instance_id: str):

"""

The dry-run phase. Validates constraints.

"""

instance = gcp_client.compute().instances().get(

project='my-project',

zone='us-central1-a',

instance=instance_id

).execute()

if instance['status'] != 'RUNNING':

raise ValueError(f"Instance is already {instance['status']}.")

# Return the warning string that the Agent/Supervisor will see

return f"CRITICAL WARNING: You are attempting to permanently destroy {instance['name']} (IP: {instance['networkInterfaces'][0]['networkIP']}). This action cannot be undone."In an advanced framework, the @transactional decorator orchestrates the entire dance. When the LLM outputs terminate_cloud_instance(instance_id="web-server-01"), the decorator intercepts it. It routes the call to the plan_ equivalent. It generates the transaction_id, logs the state to Redis with a TTL, formats the “CRITICAL WARNING” into the Token of Intent, and injects it back into the LLM’s context window, pausing the main execution thread until a valid Commit signal is received.

The engineer writing the tool focuses entirely on the business logic. The infrastructure handles the transactional guarantees.

The Philosophy of Distrust

Building A2A systems requires a fundamental shift in perspective.

When you write a traditional Python script to automate a task, you inherently trust the logic because you wrote the if/else statements yourself. You know exactly what the code will do given a specific input. The execution path is deterministic.

When you replace that Python script with a Large Language Model instructed via natural language, you forfeit determinism. You are no longer executing instructions; you are evaluating probabilities.

Therefore, the only safe way to architect an A2A system that can touch production data is to adopt a philosophy of Zero Trust regarding the agent’s intent.

You must treat the Agent not as a trusted internal module of your codebase, but as a potentially malicious, albeit well-intentioned, external microservice. It is a client. And just as you would never allow a web frontend to drop a database table just because the JSON payload asked to, you must never allow an Agent to mutate state without routing that request through a hardened, deterministic transactional layer.

Tools are not just functions. They are the Agent’s hands. And if you give a probabilistic robot a hammer, you better architect your system to make absolutely certain it asks for permission before it swings.

If your agent can change the world around it-if it can write, delete, book, or pay-it must operate with unyielding transactional integrity.

Prepare. Verify. Then, and only then, Commit.