· Agentic AI · 3 min read

MCP: The End of the API Wrapper

We analyze the JSON-RPC internals of the Model Context Protocol (MCP) and why the 'Context Exchange' architecture renders traditional integration code obsolete.

Here is the dirty secret for most of the AI agents I built in 2025: 80% of the code was “glue.” Every time I wanted the agent to touch a new system, I had to write a Python function, wrap the API, perform OAuth, and manually construct a prompt describing the schema.

- “Write a wrapper for the Linear API.”

- “Write a wrapper for the Postgres query.”

It was brittle. If Linear changed their API, the agent broke. If the LLM hallucinated a parameter, the function crashed.

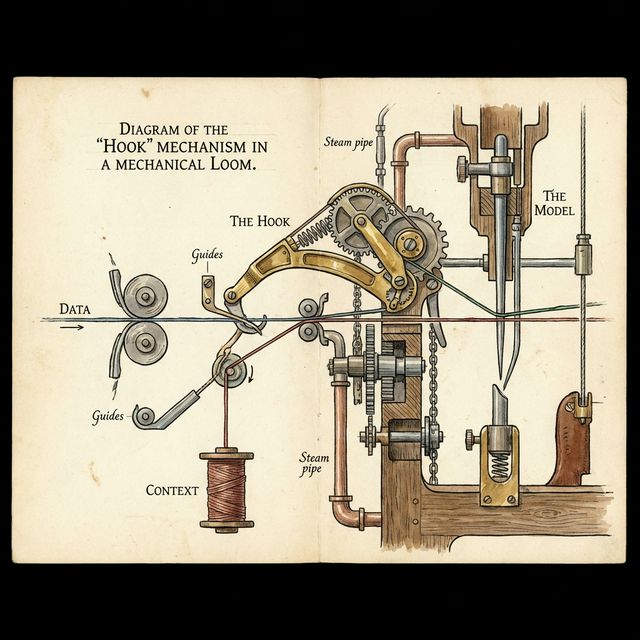

MCP: The SQL for Context

The Model Context Protocol (MCP) changes the paradigm. Instead of the Agent “learning” the API, the System “exposes” its context. It is an open standard (JSON-RPC 2.0 based) that decouples the Model from the Data Source.

The Architecture: Client-Host-Server

MCP operates on a strict topology:

- MCP Server: A lightweight process (local or remote) that implements the spec. It exposes

resources,tools, andprompts. - MCP Host: The application running the LLM (e.g., Claude Desktop, Zed IDE, or your custom Agent).

- MCP Client: The protocol layer that connects Host to Server.

The Protocol Internals

Under the hood, it’s not magic; it’s just JSON-RPC. When an Host connects to a Server, it sends a initialize handshake. The Server responds with its capabilities.

1. Resources: resources/list & resources/read

Resources are passive data (files, logs, database rows).

- Old Way: You fetch the data, format it as text, and paste it into the prompt.

- MCP Approach: The Server exposes a URI schema (e.g.,

postgres://db/table). The Agent “sees” this resource. When it needs it, it callsresources/read.

2. Tools: tools/list & tools/call

Tools are executable functions.

- Old Way: You write a Python function and pass its docstring to OpenAI/Gemini function calling.

- MCP Approach: The Server broadcasts its tools (e.g.,

execute_sql_query). The Host dynamically registers them. The LLM invokes them via the protocol.

Transport Layer: STDIO vs SSE

One of the smartest design choices in MCP is the transport layer support.

- STDIO (Standard Input/Output): For local agents. The Host spawns the Server as a subprocess and talks over

stdin/stdout. Zero networking overhead. Perfect for local dev tools (Git, Grep). - SSE (Server-Sent Events): For remote agents. The Server runs as a web service. The Host connects via HTTP/SSE for bidirectional streaming.

Security & The Human Approval Flow

The biggest risk with Agents is Side Effects (e.g., “Delete production database”). MCP handles this with a native Sampling and Approval flow. Before executing a tool/call, the Host can intercept the JSON-RPC message and present it to the user: “Agent wants to call delete_user(id=5). Allow?” This capability is baked into the protocol.

Conclusion

We are moving from “API Integration” to “Context Exposure.” The winning architecture for 2026 is not “One Giant Agent.” It is a Federation of MCP Servers.

- A

finance-mcp-server. - A

production-logs-mcp-server. - A

codebase-mcp-server.

Your Agent simply connects to the servers it needs, like mounting a USB drive.