· AI at Scale · 8 min read

Getting most out of your GPUs using MIG

Understanding how to partition a single GPU into multiple isolated instances for cost-efficient AI workloads, with a deep dive into NVIDIA's MIG technology and the architectural differences between GKE and EKS..

Fractional GPUs in the Cloud

Introduction

NVIDIA RTX PRO 6000 Blackwell is quite a beast, It delivers 125 TFLOPS of FP32 compute and a massive 96GB of GDDR7 memory. For training frontier models, you need every ounce of that power.

But what happens when your workload isn’t a frontier model?

- What about a developer who needs a GPU for 5 minutes of unit testing?

- What about running a 7B parameter Llama-3 model that only consumes 16GB of VRAM?

- What about a microservice that runs inference on occasional user uploads?

In these scenarios, assigning a full RTX PRO 6000 is economically indefensible. You are utilizing perhaps 10-15% of the hardware while paying for 100% of it. The remaining 85% isn’t just idle; it’s wasted capital.

Fractional GPU solves this problem by partitioning a single physical GPU into multiple, completely isolated virtual instances. Google Cloud’s G4 VMs support this through NVIDIA’s Multi-Instance GPU (MIG) technology.

This guide covers:

- The architecture of hardware-level GPU isolation.

- Correct implementation on Google Cloud G4 VMs (including critical RTX-specific steps).

- A comparative analysis of MIG on GKE vs. Amazon EKS.

- Real-world sizing strategies.

The Problem with Traditional GPU Sharing

Historically, if you wanted to share a GPU between two applications, you had to rely on Time-Slicing (Temporal Sharing).

The “Noisy Neighbor” Trap

Time-slicing works like a CPU scheduler: Process A runs for a few milliseconds, pauses, and then Process B runs. While this provides concurrency, it does not provide isolation.

- Memory Risk: Both processes share the same Global Memory address space. If Process A has a memory leak and causes an Out-Of-Memory (OOM) error, the entire GPU context crashes, killing Process B instantly.

- Latency Jitter: If Process A initiates a heavy compute kernel, Process B has to wait in line. This creates unpredictable latency spikes (P99 latency), which is unacceptable for real-time inference.

Hardware Partitioning

We don’t want to share the GPU; we want to slice it. We need the silicon to behave as if it were physically cut into smaller pieces, each with its own dedicated memory, cache, and compute cores. This is exactly what NVIDIA MIG delivers.

NVIDIA MIG Architecture Deep Dive

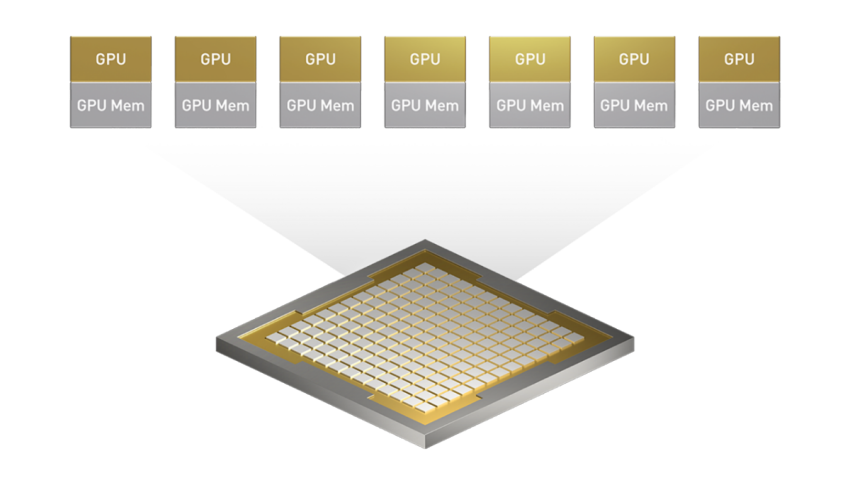

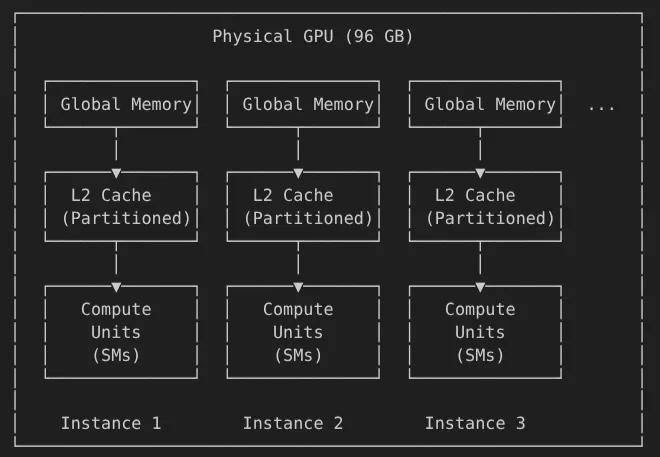

MIG is not software virtualization (like vGPU); it is a physical partitioning of the GPU’s internal resources.

When you configure MIG on an RTX PRO 6000, the hardware isolates the internal data paths to ensure total predictability.

1. Compute Isolation (SMs)

The GPU’s Streaming Multiprocessors (SMs) are hard-assigned to specific instances. If the GPU has 142 SMs and you create a partition using 25% of the device, exactly 35 SMs are dedicated to that partition. A workload running in Partition A physically cannot execute instructions on the SMs belonging to Partition B.

2. Memory Isolation

The 96GB of GDDR7 memory is addressed in contiguous blocks. Partition A might own addresses 0x0000 to 0x2000. It is physically impossible for it to read or write to Partition B’s memory range. This provides fault isolation—if Partition A crashes or segfaults, Partition B keeps running without a hiccup.

3. L2 Cache Partitioning

This is the “secret sauce” of MIG performance. The L2 cache is physically split. This prevents Cache Thrashing, where a memory-intensive job in one partition evicts the hot data of a job in another partition. This guarantees that your inference latency remains stable, regardless of what the other “neighbors” on the GPU are doing.

Supported Profiles

Unlike the A100 which supports up to 7 slices, the RTX PRO 6000 supports up to 4. The naming convention follows the pattern:

| Profile | Compute Share | Memory | Use Case |

|---|---|---|---|

| 1g.24gb | 1/4 (25%) | 24 GB | Light inference (Llama-7B), Dev, CI/CD |

| 2g.48gb | 2/4 (50%) | 48 GB | Medium training, Large inference (Llama-70B 4-bit) |

| 3g.72gb | 3/4 (75%) | 72 GB | Heavy workloads + sidecar monitor |

| 4g.96gb | 4/4 (100%) | 96 GB | Full GPU (MIG disabled) |

Implementing MIG on Google Cloud G4 VMs

The Google Cloud G4 VMs, powered by RTX PRO 6000 Blackwell GPUs, support up to 4 MIG instances.

1. Enable MIG Mode Once the VM comes back online, enable MIG at the driver level.

sudo nvidia-smi -i 0 -mig 1

# Reset the GPU to apply the change (alternative to another reboot)

sudo nvidia-smi --gpu-reset

2. Create Instances (The Efficient Way) You create instances using “Profiles.” The RTX PRO 6000 uses profiles like 1g.24gb (1 compute slice, 24GB memory).

- Note: Use the

-Cflag to create the Compute Instance automatically. Without this, you only create the “shell” (GPU Instance) and have to run a second command to fill it.

# Create four 24GB partitions atomically

sudo nvidia-smi mig -i 0 -cgi 1g.24gb,1g.24gb,1g.24gb,1g.24gb -C

# Verify the creation

nvidia-smi -L

Output:

GPU 0: NVIDIA RTX PRO 6000 (UUID: GPU-...)

MIG 1g.24gb Device 0: (UUID: MIG-...)

MIG 1g.24gb Device 1: (UUID: MIG-...)

MIG 1g.24gb Device 2: (UUID: MIG-...)

MIG 1g.24gb Device 3: (UUID: MIG-...)

GKE vs. EKS - A quick comparison

While MIG is an NVIDIA technology, how you consume it depends heavily on your cloud provider. This is where Google Kubernetes Engine (GKE) and Amazon Elastic Kubernetes Service (EKS) diverge significantly in philosophy.

The Core Difference: Mutable vs. Immutable Infrastructure

1. The Amazon EKS Approach (The “Operator” Pattern)

EKS treats MIG support as an add-on layer on top of a standard node. It follows a “Day 2 Operation” workflow.

- Philosophy: “Here is a standard GPU node. You (the user) install software to reconfigure it.”

- The Workflow:

- Provision a standard GPU node (e.g.,

p4d.24xlarge). - Install the NVIDIA GPU Operator.

- Apply a Kubernetes label to the node:

kubectl label node x nvidia.com/mig.config=all-1g.5gb. - The Reboot Sequence: The GPU Operator detects the label, cordons the node (evicts pods), interacts with the driver to partition the GPU, and reboots the node.

- 10 minutes later, the node rejoins the cluster with MIG enabled.

2. The Google GKE Approach (The “API” Pattern)

GKE treats MIG configuration as a first-class citizen of the Compute Engine API. It follows an “Immutable Infrastructure” workflow.

- Philosophy: “Tell us what hardware configuration you want, and we will boot it that way.”

- The Workflow:

- Create a Node Pool with a single flag:

--accelerator=type=nvidia-rtx-pro-6000,count=1,gpu-partition-size=1g.24gb. - Google’s control plane configures the silicon before the OS boots.

- The node joins the cluster instantly ready to accept workloads.

Comparative Analysis Table

| Feature | Google GKE | Amazon EKS |

|---|---|---|

| Configuration | API-Driven: Defined in Node Pool config. | Label-Driven: Defined via K8s labels + GPU Operator. |

| Startup Time | Fast: Node boots ready-to-use. | Slow: Node boots -> Operator acts -> Reboot -> Ready. |

| Auto-scaling | Smooth: New nodes arrive ready for pods. | Laggy: New nodes flap (Up/Down) causing scheduling delays. |

| Complexity | Low: Managed by GKE control plane. | High: User manages the GPU Operator versioning. |

| Flexibility | Rigid: Defined at Node Pool creation. | Flexible: Can re-label nodes to change profiles (requires drain). |

GKE’s approach is significantly helpful for production environments that rely on Cluster Autoscaler. In EKS, if traffic spikes and a new node is provisioned, that node spends the first 10 minutes performing the “reboot dance” to enable MIG. In GKE, the node arrives ready to serve traffic immediately.

MIG on GKE - Configuration Guide

Since GKE handles the heavy lifting, your configuration is simple.

1. Creating the Node Pool

You define the partition size at the infrastructure level.

gcloud container node-pools create mig-pool \

--cluster=my-cluster \

--machine-type=g4-standard-48 \

--accelerator=type=nvidia-rtx-pro-6000,count=1,gpu-partition-size=1g.24gb \

--num-nodes=3

2. Scheduling Workloads

Your Pods request the specific MIG slice as a resource limit.

apiVersion: v1

kind: Pod

metadata:

name: inference-server

spec:

containers:

- name: model

image: my-model:latest

resources:

limits:

# Request exactly one 24GB slice

nvidia.com/mig-1g.24gb: 1

Use Cases

1. The “Right-Sized” Inference Cluster

Scenario: You have a mix of models: a large heavy-duty LLM and several smaller BERT-based classifiers.

Strategy: Create two GKE Node Pools.

pool-large: Uses2g.48gbpartitions for the LLMs.pool-small: Uses1g.24gbpartitions for the classifiers.Result: You achieve nearly 100% bin-packing efficiency. The classifiers don’t hog a full GPU, and the LLMs have the memory guarantees they need.

2. CI/CD Pipeline Acceleration

Scenario: A nightly build runs 500 integration tests that need GPU access.

- Strategy: Use MIG to run 4 tests in parallel per physical GPU.

- Result: You cut your hardware costs by 75% and your test suite runtime by 4x (concurrency). Because of MIG’s fault isolation, if Test A crashes the video driver, Test B running in the adjacent partition is unaffected.

3. Multi-Tenant SaaS

Scenario: You provide a platform where users can deploy their own custom models.

- Strategy: Assign each user a dedicated MIG partition.

- Result: You can guarantee performance SLAs. User A cannot cause “noisy neighbor” latency spikes for User B because they are physically separated by the memory controller and L2 cache partitioning.

Limitations and Best Practices

While MIG is powerful, it is not a magic bullet. It does have some constraints:

- No Dynamic Resizing: You cannot change a partition from 24GB to 48GB on the fly. You must drain the node and recreate the instances (or in GKE, move the workload to a different Node Pool).

- No P2P / NVLink: MIG instances cannot communicate with each other directly. You cannot run distributed training (using NCCL) across multiple MIG slices on the same GPU. If you need multi-GPU training, disable MIG.

- Profiling is Different: Standard tools like

nvidia-smigive confusing results in MIG mode. Use the NVIDIA DCGM Exporter in Kubernetes to get accurate per-instance metrics for observability. - Hardware Overhead: Enabling MIG consumes a small amount of memory for the management layer (a few hundred MBs), so a 24GB slice effectively gives you ~23GB of usable VRAM.

Conclusion

Fractional GPUs represent a paradigm shift in cloud economics. By moving from monolithic GPU allocation to granular, isolated partitioning, organizations can drastically increase utilization and reduce costs.

While both GKE and EKS support this technology, GKE’s implementation stands out for its operational simplicity. By abstracting the MIG configuration into the Compute Engine API, GKE eliminates the “reboot loops” and complex operator management required on other platforms, making it the premier choice for auto-scaling fractional GPU workloads.

For the RTX PRO 6000 Blackwell, the math is simple:

- Without MIG: One expensive GPU serves one small workload.

- With MIG: One expensive GPU serves four workloads with hardware-guaranteed isolation.

Next Steps: Audit your current GPU utilization. If your average VRAM usage is under 24GB per pod, create a test GKE Node Pool with gpu-partition-size=1g.24gb and migrate your dev workloads. The cost savings will likely pay for the engineering time in the first month.