The Compute-to-Cashflow Gap

The AI industry is shifting from celebrating large compute budgets to hunting for efficiency. Your competitive advantage is no longer your GPU count, but your cost-per-inference.

The AI industry is shifting from celebrating large compute budgets to hunting for efficiency. Your competitive advantage is no longer your GPU count, but your cost-per-inference.

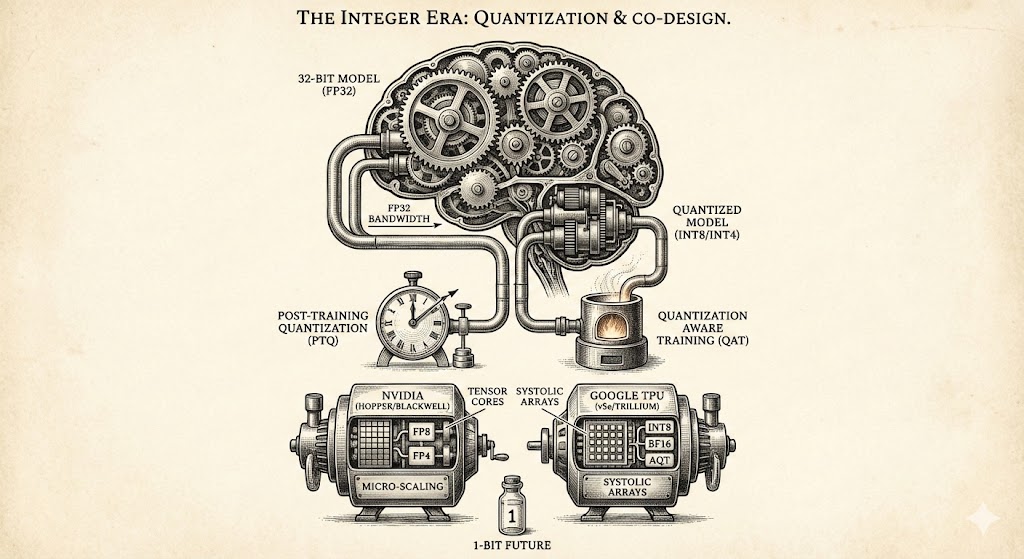

Explore how quantization and hardware co-design overcome memory bottlenecks, comparing NVIDIA and Google architectures while looking toward the 1-bit future of efficient AI model development.

As the AI industry moves from model training to large-scale deployment, the strategic bottleneck has shifted from parameter count to inference orchestration. This post explores how advanced techniques like RadixAttention, Chunked Prefills, and Deep Expert Parallelism are redefining the ROI of GPU clusters and creating a new standard for high-performance AI infrastructure.

The competitive advantage in AI has shifted from raw GPU volume to architectural efficiency, as the "Memory Wall" proves traditional frameworks waste runtime on "data plumbing." This article explains how the compiler-first JAX AI Stack and its "Automated Megakernels" are solving this scaling crisis and enabling breakthroughs for companies like xAI and Character.ai.

As hardware lead times and power constraints hit a ceiling, the competitive advantage in AI has shifted from chip volume to architectural efficiency. This article explores how JAX, Pallas, and Megakernels are redefining Model FLOPs Utilization (MFU) and providing the hardware-agnostic Universal Adapter needed to escape vendor lock-in.

Google Cloud’s G4 architecture delivers 168% higher throughput by maximizing PCIe Gen 5 performance. This deep dive examines the engineering stack driving these gains, from direct P2P communication and NUMA optimizations to Titanium offloads. Explore how G4 transforms standard connectivity into a high-speed fabric for demanding AI inference and training.