· AI at Scale · 6 min read

Layered improvements with G4 / RTX 6000 Pro

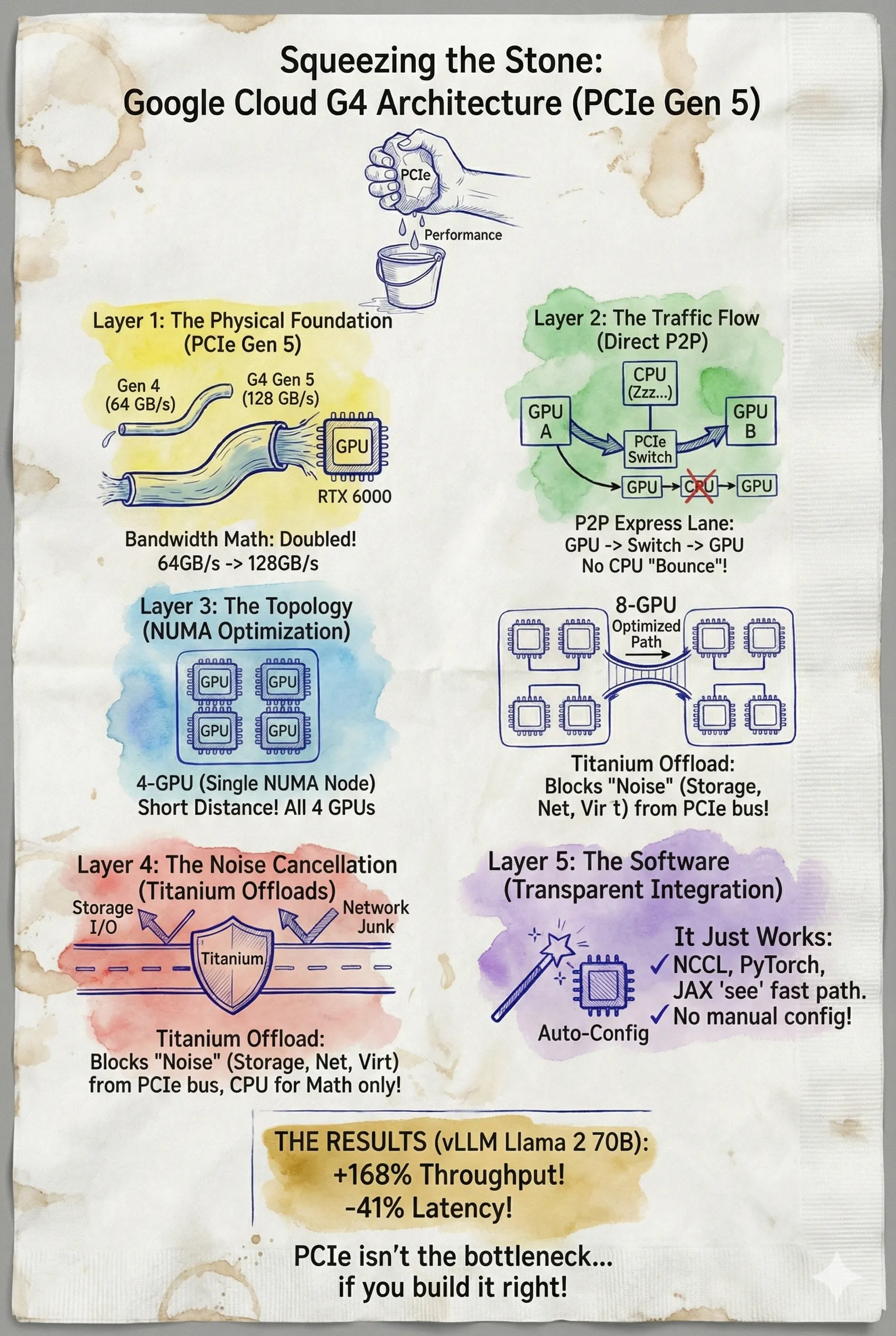

Google Cloud’s G4 architecture delivers 168% higher throughput by maximizing PCIe Gen 5 performance. This deep dive examines the engineering stack driving these gains, from direct P2P communication and NUMA optimizations to Titanium offloads. Explore how G4 transforms standard connectivity into a high-speed fabric for demanding AI inference and training.

Squeezing the Stone - How Google Cloud’s Layered Architecture Unlocks 168% Higher Throughput on PCIe Gen 5

In the world of high-performance computing and AI, bandwidth is oxygen. For years, the narrative has been simple: if you want top-tier multi-GPU performance for training or massive inference, you need proprietary interconnects like NVLink. Standard PCIe was viewed as the bottleneck—the slow lane.

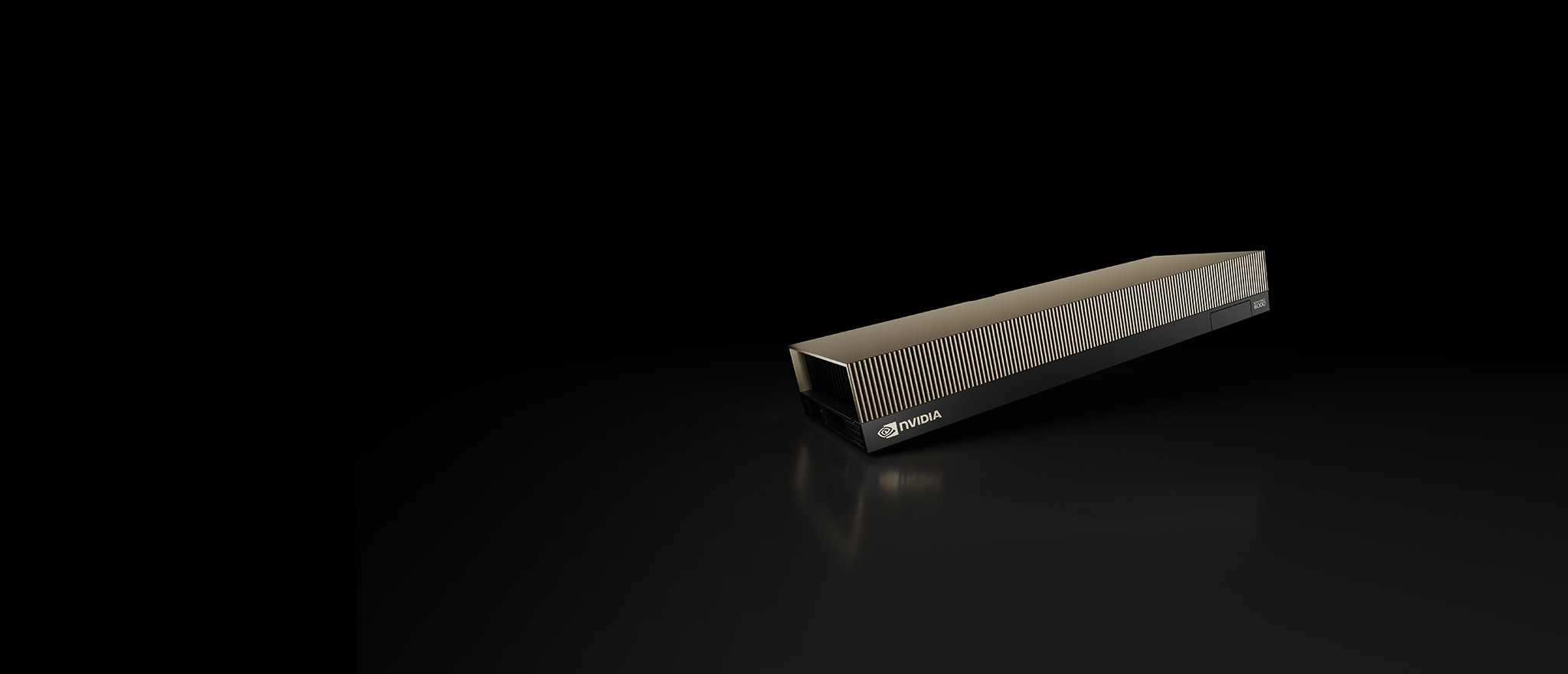

But with the release of the G4 instance family powered by NVIDIA RTX 6000 Ada Generation (Blackwell) GPUs, Google Cloud has challenged that assumption. By achieving 168% higher throughput and 41% lower Inter-Token Latency (ITL) compared to baseline configurations, G4 demonstrates that PCIe Gen 5 can be a powerhouse interface if architected correctly.

The secret isn’t a single feature. It is a layered engineering approach designed to extract every ounce of value from the PCIe v5 link. This post dissects that stack, from the physical traces on the motherboard to the offload processors managing the noise, showing how Google optimizes the RTX 6000 Pro environment for maximum performance.

Layer 1: The Physical Foundation – PCIe Gen 5

The first layer of the strategy is the adoption of the PCIe Generation 5 standard. While many cloud providers and on-premise setups are still operating on Gen 4, G4 migrates to Gen 5 to double the raw theoretical limit.

The Bandwidth Math

The jump from Gen 4 to Gen 5 is non-trivial. A standard PCIe Gen 4 x16 link offers 64 GB/s of bidirectional bandwidth. G4’s Gen 5 implementation pushes this to 128 GB/s (32 GT/s per lane).

For an 8-GPU cluster, this is the difference between a congested artery and a free-flowing highway. However, raw bandwidth is only potential energy. Implementing reliable Gen 5 speeds at cloud scale introduces significant signal integrity challenges, requiring custom motherboard layouts, retimers, and rigorous thermal management for the PCIe switches. Google’s engineering ensures that the “pipe” is wide enough to support the massive data exchanges required by modern AI workloads, specifically catering to the RTX PRO 6000’s profile which, unlike the H100, relies on PCIe for inter-chip communication.

Layer 2: The Traffic Flow – Direct Peer-to-Peer (P2P)

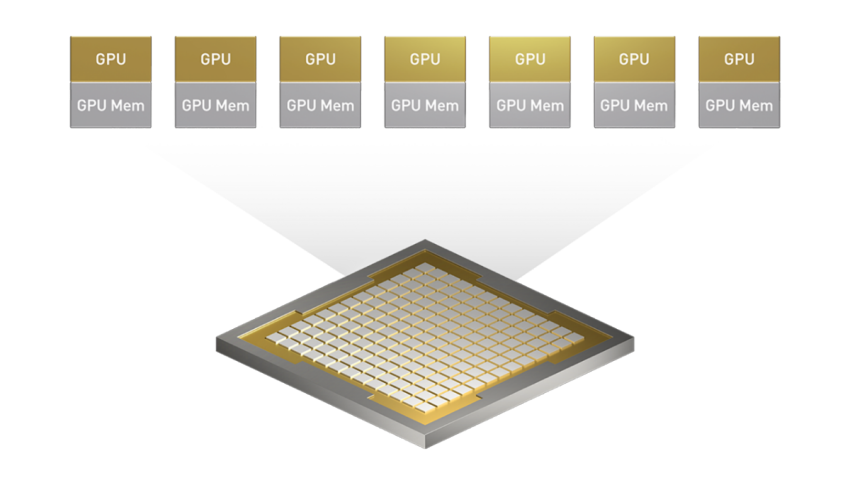

A wide road is useless if traffic is forced to stop at every intersection. In traditional multi-GPU architectures, data transfer is a “store-and-forward” process: data moves from GPU A, to the CPU, into System Memory, back to the CPU, and finally to GPU B.

This “bounce” creates three critical problems:

- Latency: The round trip through system RAM adds significant delay.

- CPU Contention: The CPU must orchestrate the move, stealing cycles from application logic.

- Bandwidth Waste: The transfer consumes system memory bandwidth, starving other subsystems.

The P2P Solution

Google’s G4 instances implement a Direct P2P Fabric. By utilizing advanced PCIe switch capabilities, traffic is routed directly from GPU A → PCIe Switch → GPU B.

This architecture completely bypasses the CPU and system memory for GPU-to-GPU communications. The result is a deterministic, low-latency path that allows the RTX 6000 GPUs to saturate the PCIe Gen 5 links without CPU interference. This is critical for collective operations like AllReduce and AllGather, which occur thousands of times during distributed training or tensor-parallel inference.

Layer 3: The Topology – NUMA Optimization

The third layer addresses the physical distance and logical hierarchy of the components. In a high-density server, the “distance” a signal travels matters. Google employs a Non-Uniform Memory Access (NUMA) optimized topology to minimize these hops.

4-GPU vs. 8-GPU Topologies

G4 instances are architected to align with NUMA nodes physically:

- The 4-GPU (g4-standard-192) Configuration: All four GPUs reside on a single NUMA node. This guarantees that P2P transfers never cross the CPU interconnect (such as UPI or Infinity Fabric), resulting in the lowest possible latency.

- The 8-GPU (g4-standard-384) Configuration: While this spans two NUMA nodes, Google utilizes an optimized cross-node fabric. Instead of the traditional path (GPU → Memory → CPU Interconnect → Memory → GPU), G4 uses a direct path between PCIe complexes on different nodes.

This thoughtful topology design yields up to a 2.04x to 2.19x improvement in collective operations compared to non-optimized layouts. It ensures that the PCIe Gen 5 link isn’t wasted waiting for signals to traverse inefficient motherboard routes.

Layer 4: The Noise Cancellation – Titanium Offloads

This is perhaps the most overlooked yet impactful layer. You cannot maximize PCIe throughput for GPUs if the bus is clogged with storage I/O, network packets, and virtualization overhead.

Google’s Titanium architecture acts as a noise-cancellation system for the server. It offloads infrastructure tasks to custom silicon, ensuring the host CPU and main PCIe lanes are dedicated to the customer’s workload.

Two-Tier Offload

- Tier 1 (On-Host): Titanium adapters handle virtualization, networking, and storage I/O physically on the host. This means the CPU isn’t burning cycles encapsulating packets or managing block devices.

- Tier 2 (Datacenter-Scale): Titanium extends into the datacenter, utilizing offload processors for distributed storage (Colossus) and network functions.

The Impact on GPU Performance

How does a storage offload help a GPU?

- Jitter Reduction: Because the CPU isn’t interrupted by network or storage interrupts, it can orchestrate GPU kernels with perfect timing.

- Data Feeding: Titanium-enabled Hyperdisk storage (up to 500K IOPS) and 200Gbps networking ensure that data arrives at the GPU memory instantly.

By cleaning the “noise” off the bus, Titanium ensures that the 128 GB/s PCIe Gen 5 link is used almost exclusively for the high-value tensor data moving between GPUs.

Layer 5: The Software – Transparent Integration

The final layer is usability. A complex hardware architecture is useless if it requires custom drivers or rewritten code. G4’s P2P fabric is software-defined but application-transparent.

Auto-Configuration

When a G4 VM boots, the hypervisor dynamically creates the PCIe routes and isolates them for the specific tenant. The NVIDIA drivers and NCCL (NVIDIA Collective Communications Library) automatically detect this P2P capability.

There is no need to manually configure PCIe switch registers or topology files. Frameworks like PyTorch, JAX, and TensorFlow simply “see” the fast path and utilize it immediately. This integration ensures that the hardware innovations (Gen 5 + P2P) translate directly to application performance without developer friction.

Real-World Results: vLLM and Beyond

The convergence of these five layers—Physical (Gen 5), Routing (P2P), Topology (NUMA), Offload (Titanium), and Software (NCCL)—delivers measurable impact.

In benchmarks running vLLM (a popular high-throughput LLM serving framework) on Llama 2 70B models, G4 instances demonstrated:

- 168% higher throughput compared to non-optimized PCIe configurations.

- 41% reduction in Inter-Token Latency (ITL).

For organizations deploying generative AI, this translates to faster inference, lower cost per token, and the ability to run larger models on cost-effective RTX PRO hardware rather than waiting for scarce H100 availability.

Summary

Google Cloud’s approach to the G4 instance proves that PCIe is not a legacy bottleneck—it is an optimization frontier. By layering PCIe Gen 5 speed, P2P direct routing, NUMA-aware topology, and Titanium offloads, G4 transforms the RTX 6000 Pro into a high-performance engine capable of punching far above its weight class.

For AI architects and ML engineers, G4 offers a compelling lesson: the interconnect is more than just a cable; it is a full-stack architecture. And when that stack is optimized from silicon to software, the results speak for themselves.