LLMs are Terrible Backends (Unless You Force JSON)

Non-determinism is a bug, not a feature. We explore how to whip the model into compliance using Enforcers, Pydantic, and Constrained Generation.

Non-determinism is a bug, not a feature. We explore how to whip the model into compliance using Enforcers, Pydantic, and Constrained Generation.

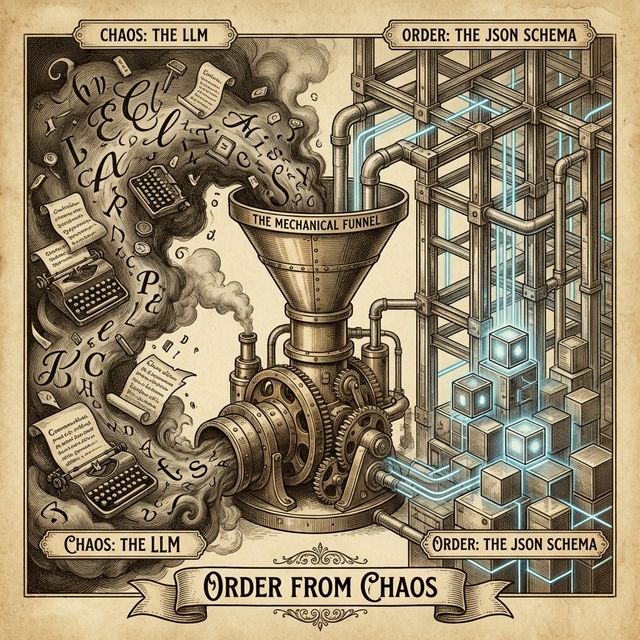

Chat is reactive. Hooks are proactive. We explore how to use Gemini CLI Hooks to inject context and enforce security before the model thinks.

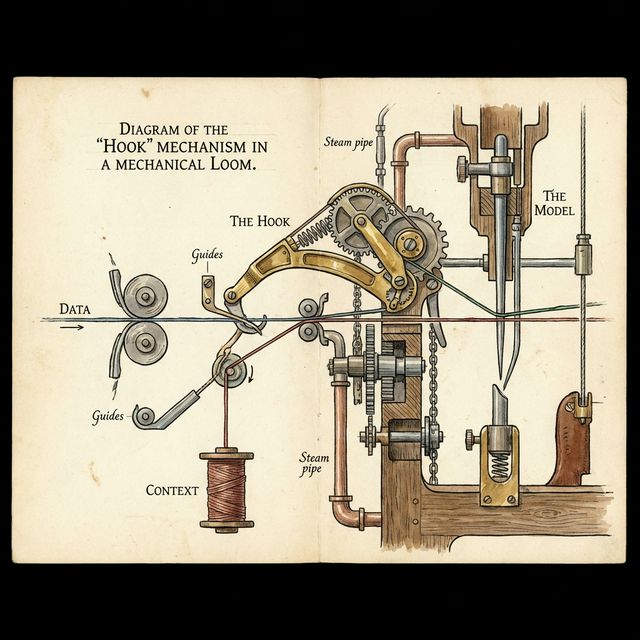

Recompilation is the silent killer of training throughput. If you see 'Jit' in your profiler, you are losing money. We dive into XLA internals.

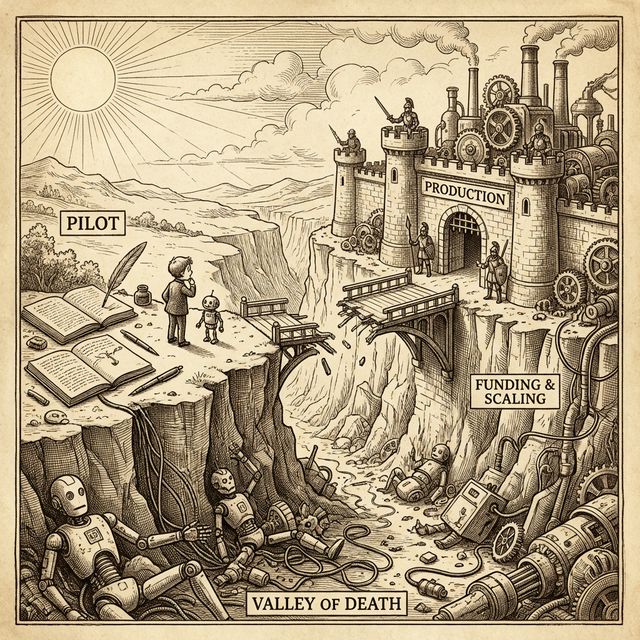

Most enterprise AI fails not because of the model, but because of the 'Last Mile' integration costs. We breakdown the hidden latency budget of RAG.

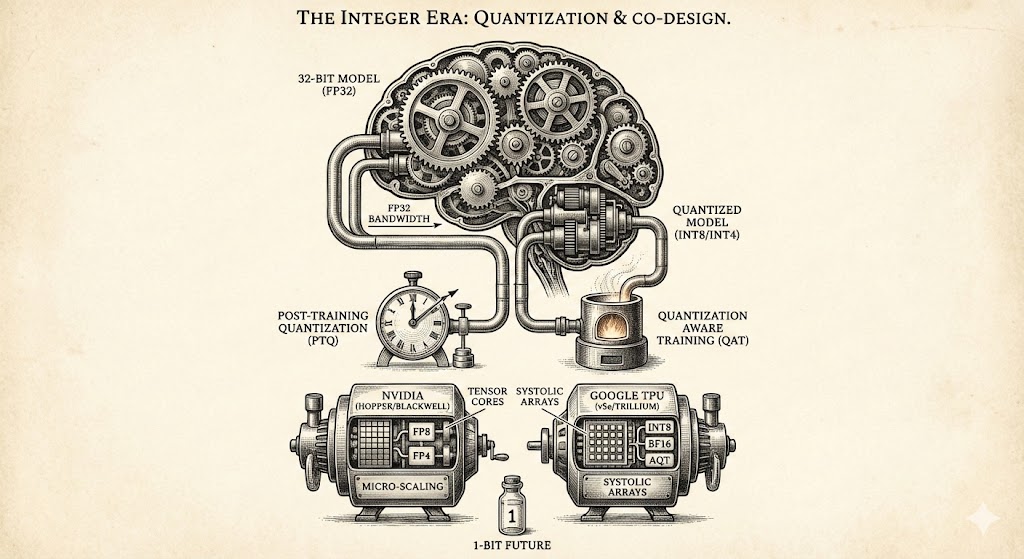

The AI industry is shifting from celebrating large compute budgets to hunting for efficiency. Your competitive advantage is no longer your GPU count, but your cost-per-inference.

Explore how quantization and hardware co-design overcome memory bottlenecks, comparing NVIDIA and Google architectures while looking toward the 1-bit future of efficient AI model development.