· Infrastructure · 3 min read

Network Jitter: The Silent Killer of Training

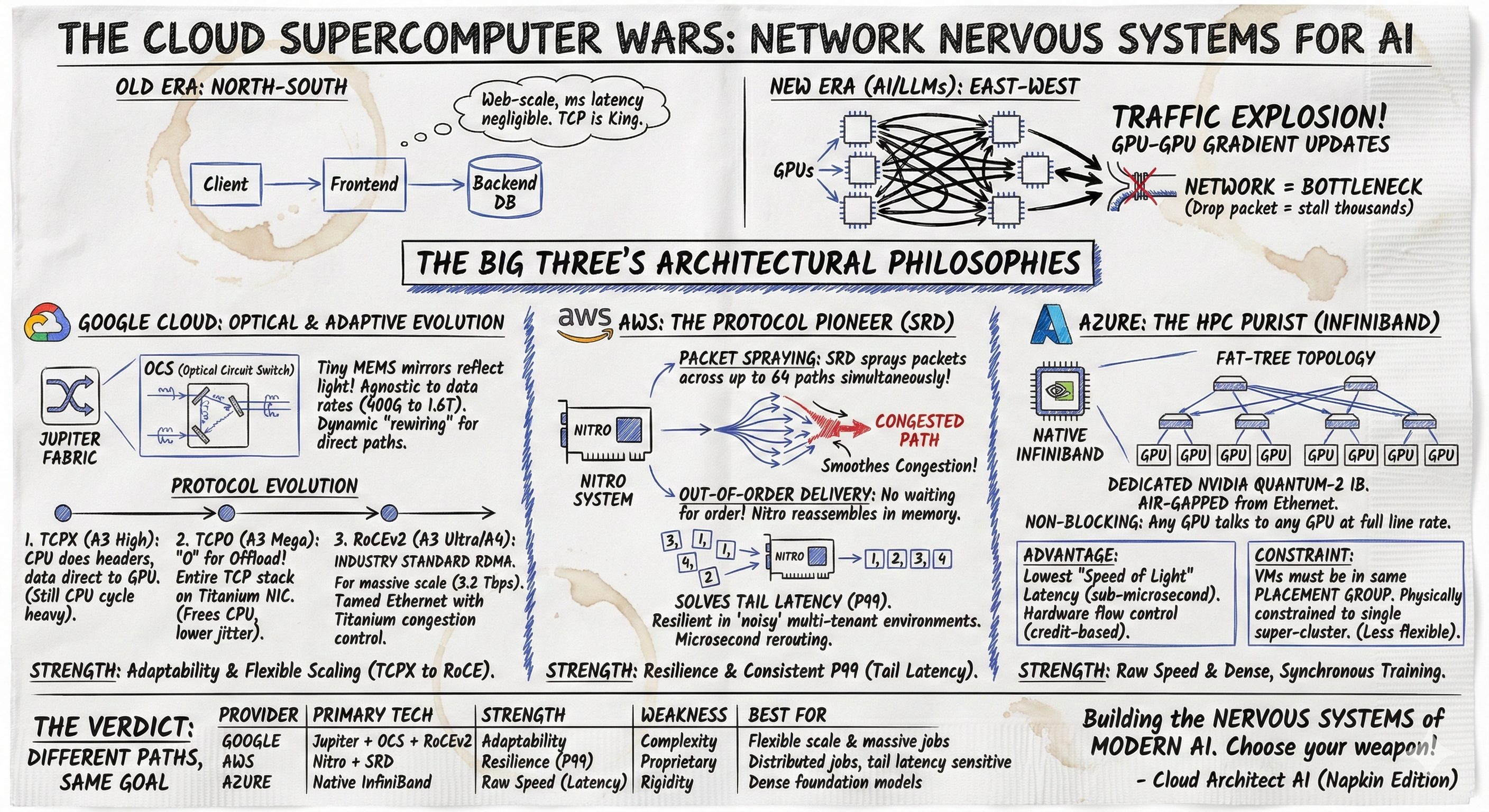

In distributed training, the slowest packet determines the speed of the cluster. We benchmark GCP's 'Circuit Switched' Jupiter fabric against AWS's 'Multipath' SRD protocol.

The Hardware Is the Same, the Experience Isn’t

You can rent an H100 SXM5 from AWS (P5 instances) and you can rent one from Google Cloud (A3 Mega). They utilize the exact same NVIDIA H100 silicon. They both advertise 3.2 Tbps of Interconnect bandwidth. Yet, when running large-scale All-Reduce collectives, the performance profiles can be different significantly.

The difference lies not in the GPU, but in the Fabric Architecture.

AWS EFA: Multipath (SRD)

AWS uses the Elastic Fabric Adapter (EFA), which runs on their custom Nitro chips. The secret sauce of EFA is the Scalable Reliable Datagram (SRD) protocol.

- How it works: SRD is not TCP. It intentionally sprays packets across multiple network paths (ECMP) to maximize throughput. It handles out-of-order delivery in hardware (on the Nitro card).

- The Philosophy: “Packet Loss is okay; Congestion is fatal.” SRD reacts to congestion by rapidly re-routing.

- The Trade-off: While phenomenal for aggregate bandwidth, this dynamic re-routing introduces Jitter. Packets taking different paths arrive at different times. In a strict ring-topology collective, this can cause micro-stalls.

GCP Fastrack (Jupiter): Leveraging Optics

Google Cloud operates its Jupiter data center network differently. For AI workloads on A3 Mega, it uses Optical Circuit Switching (OCS) and a centralized Software-Defined Network (SDN).

- How it works: Instead of spraying packets, the Jupiter control plane pre-calculates the topology. It essentially reserves a “virtual circuit” for your flow.

- The Philosophy: “Deterministic Latency.”

- The Trade-off: Setup time is higher (managed by the SDN), but once the flow is established, the variance (jitter) is near zero. It’s like a train on a dedicated track versus a Ferrari in traffic.

Impact on Collectives (NCCL)

In a Ring All-Reduce:

- AWS (High Variance): You get incredible peak speeds, but the P99 tail latency can sufffer due to SRD’s re-ordering buffer. This forces the GPU to wait for the straggler packets.

- GCP (Low Variance): The peak speed might be slightly lower due to SDN overhead, but the P99 is rock stable.

For Mixture of Experts (MoE) models, which require massive All-to-All communication:

- AWS Approach: The multipath nature of SRD handles the chaotic, bursty traffic of All-to-All .

- GCP Wins: For dense models (Llama 3) with predictable All-Reduce patterns.

The Boot Time Gap: GKE vs EKS

There is another operational area of focus: Image Pull Times.

- GCP (GKE Image Streaming): We can cold-boot a 400GB container image on a new H100 node in under 30 seconds. GKE streams the data blocks on-demand.

- AWS (ECR): IMHO Pulling that same image to a P5 node often takes 8-10 minutes.

If you are using Spot Instances (Preemptible), this matters.

- On GCP, you can recover from a preemption in < 1 minute.

- On AWS, you lose 10 minutes just re-pulling the Docker image.

Conclusion

Takeaway: Don’t just benchmark TFLOPS. Benchmark nccl-tests for P99 latency. That’s your real speed limit.