· Engineering · 4 min read

Visualizing All-Reduce Bandwidth: The Physics of Distributed Training

When your model doesn't fit on one GPU, you're no longer just learning coding-you're learning physics. We dive deep into the primitives of NCCL, distributed collectives, and why the interconnect is the computer.

“The rack is the new server.”

It’s a phrase that sounds like marketing until the first time you try to shard a 70B parameter model across multiple nodes. At that moment, the “speed of light” stops being a metaphor and starts being your primary bottleneck.

If your model is larger than 80GB-the memory limit of a standard H100-you aren’t just a software engineer anymore. You are a network architect. You have moved from the world of processing into the world of pathing.

Let’s look at the physics of how your model actually “learns” when it lives in more than one place at once.

What is a “Collective”?

In distributed training, we use a pattern called Data Parallelism. Every GPU in your cluster gets a full copy of the model weights, but each one “reads” a different slice of the training data.

After every “step” of training, each GPU calculates how it thinks the weights should change (the gradients). But because they all saw different data, they all have different ideas. To continue, they must agree on a single, averaged update.

This communication is called a Collective Operation.

The most critical of these is the All-Reduce. It’s the process where every GPU sends its math to every other GPU, they sum it up, and everyone ends up with the exact same result. If you have 8 GPUs and a 50GB model, you are effectively trying to move 400GB of data across the wires in milliseconds.

NCCL: The Librarian of the Cluster

To manage this, we use the NVIDIA Collective Communication Library (NCCL). NCCL is the software layer that understands the topology of your GPUs. It knows which ones are in the same tray, which ones are across the rack, and which ones are on a different floor of the data center.

NCCL uses specific algorithms to move this data without choking the network:

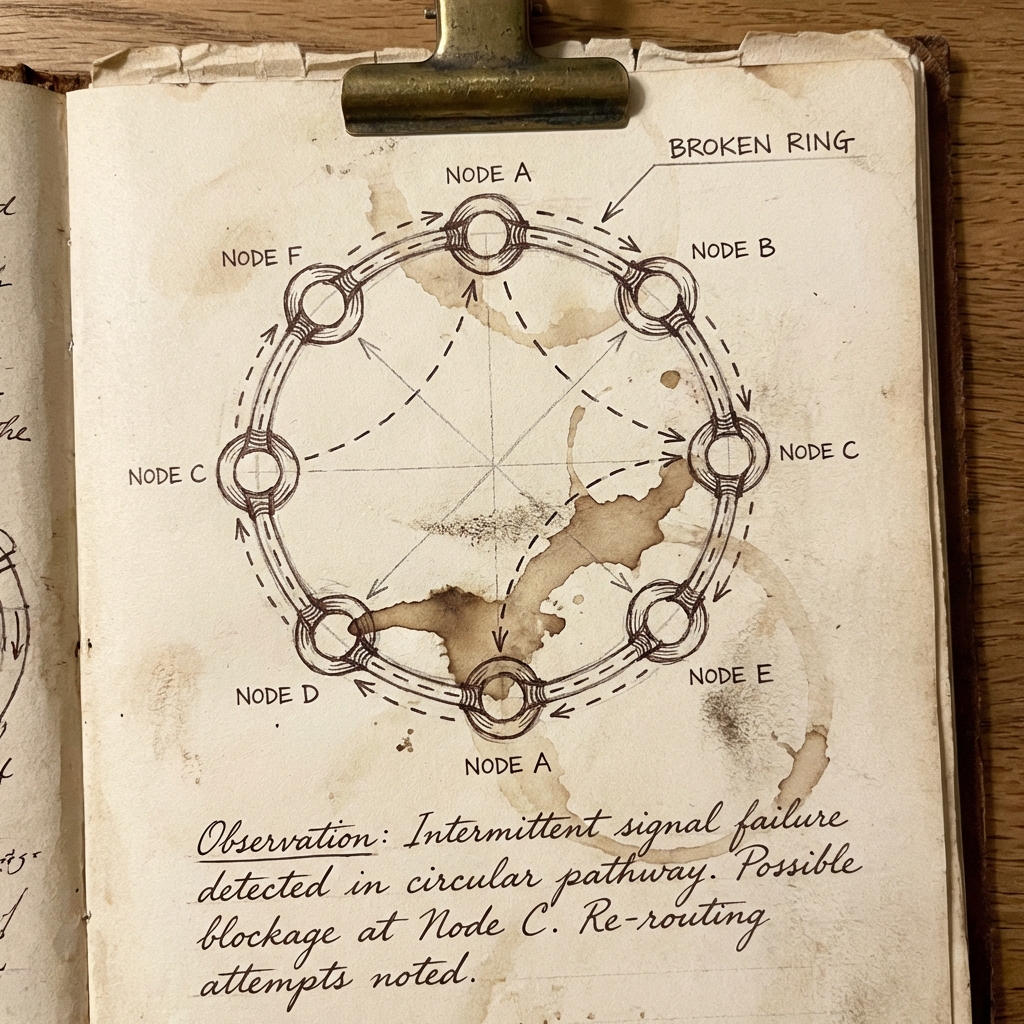

- The Ring Algorithm: GPUs are arranged in a logical circle. Each GPU sends a chunk of its data to its neighbor and receives a chunk from the other. It takes two “laps” around the ring for everyone to have the averaged result. It’s highly efficient for bandwidth but can be slow if one link is lagging.

- The Tree Algorithm: For larger clusters, NCCL builds a hierarchy. Data flows up to a root node, get summed, and flows back down. This is much faster for synchronization because it reduces the number of “hops” across the network.

The Infrastructure Reality: NVLink vs. InfiniBand

The “Physics” of your cluster changes depending on how the GPUs are connected:

- Intra-node (NVLink): Inside a single machine, GPUs talk over NVLink. It’s incredibly fast (up to 900 GB/s on H100). This is local traffic.

- Inter-node (InfiniBand/RoCE): When you move between machines, you leave the “local” world and enter the “network” world. Even with the fastest InfiniBand at 400 Gbps, the bandwidth is significantly lower than NVLink.

This drop-off in speed is where “bubbles” appear. A bubble is a period where your $30,000 GPU is doing nothing but waiting for a packet to arrive from its neighbor across the rack.

Modern Advancements: Symmetric Memory & SHARP

In the latest generation of distributed systems (NCCL 2.27), we are seeing breakthroughs that attempt to hide these physical limits:

- Symmetric Memory: Historically, GPU A had to ask its CPU to send data to GPU B. With Symmetric Memory, the entire cluster shares a unified address space. GPU A can write directly into GPU B’s memory as if it were local. This removes the “CPU Handshake” and can reduce synchronization latency by 9x.

- SHARP (In-Network Computing): In a Blackwell rack, the computation of the All-Reduce actually happens inside the switch. The GPUs don’t sum the math; the network switch sums the math while the packets are in transit.

Conclusion: The Path is the Product

As we push toward trillion-parameter models, the bottleneck of AI has shifted from the chip to the fabric.

The core insight for any practitioner in this era is this: Optimizing distributed training is about pathing, not just processing.

You cannot simply throw more GPUs at a problem if your network topology cannot support the collective communication they require. Your job isn’t just to write the code that runs on the GPU; it’s to ensure that the path between those GPUs is as short, as wide, and as deterministic as possible.

In the world of distributed AI, the interconnect is the computer.