Performance over Portability? Running Local LLMs on the Asus ProArt 13

Can a thin-and-light PC handle production-level LLMs? We benchmark the Asus ProArt 13 with RTX 4060, the Ryzen AI 9 NPU, and the 8GB VRAM bottleneck.

Can a thin-and-light PC handle production-level LLMs? We benchmark the Asus ProArt 13 with RTX 4060, the Ryzen AI 9 NPU, and the 8GB VRAM bottleneck.

Autonomous agents are prone to infinite reasoning loops and 'democratic' indecision. We explore the Supervisor pattern in LangGraph, MCP, and why orchestration beats choreography.

When your model doesn't fit on one GPU, you're no longer just learning coding-you're learning physics. We dive deep into the primitives of NCCL, distributed collectives, and why the interconnect is the computer.

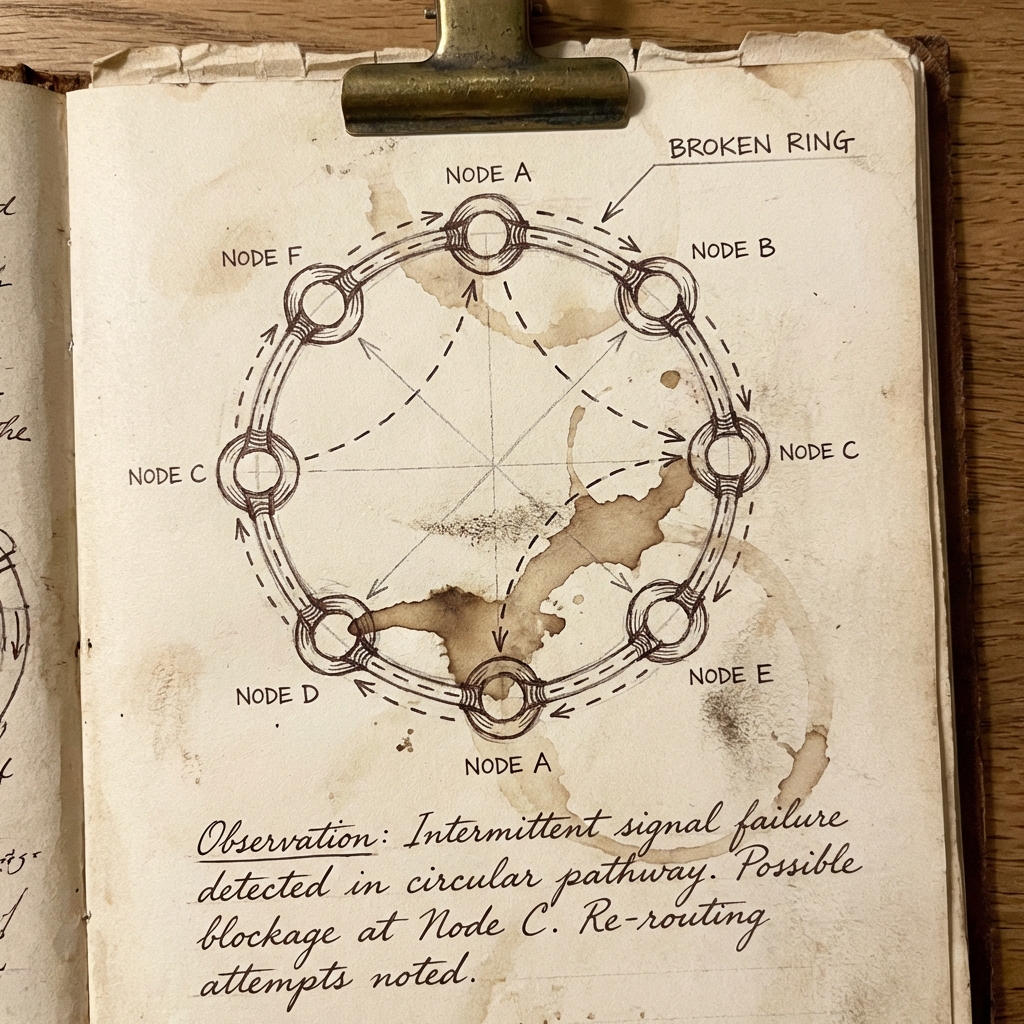

When standard tools report a healthy cluster, but your training is stalled, the culprit is often a broken ring topology. We decode specific NCCL algorithms and debugging flags.

FP4 isn't just 'lower precision' - it requires a fundamental rethink of activation outliers. We dive into the bit-level implementation of NVFP4, Micro-Tensor Scaling, and the new Tensor Memory hierarchy.