· Engineering · 4 min read

Debugging NCCL Ring Failures

When standard tools report a healthy cluster, but your training is stalled, the culprit is often a broken ring topology. We decode specific NCCL algorithms and debugging flags.

The Mystery of the Stalled Rank

This week, we hit a wall. A 128-GPU training run was hanging indefinitely. No crash, no core dump. nvidia-smi showed 100% GPU utilization, but the loss curve was flat. The GPUs were maxed out, but the machines were not exchanging data. Standard monitoring tools (Prometheus node exporter) showed “Healthy” because the OS was up and the GPU was accepting kernels.

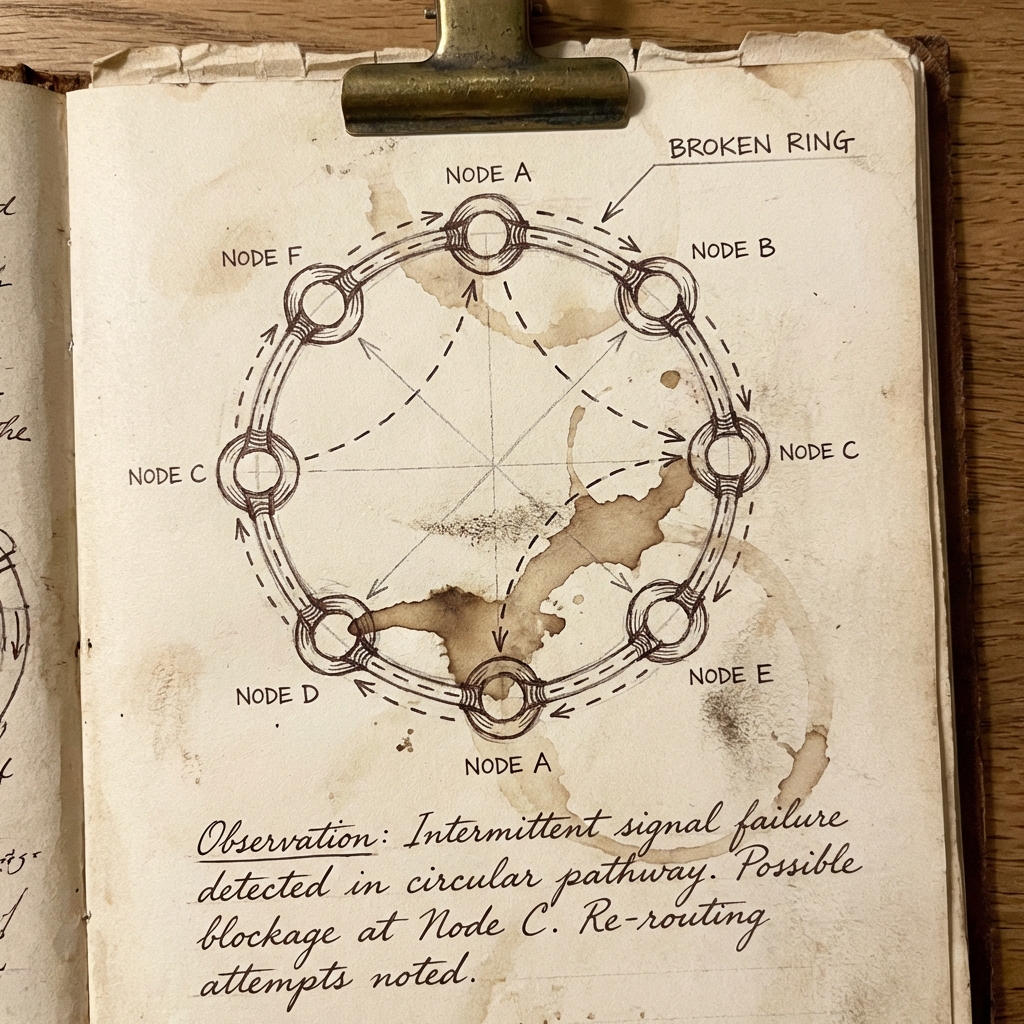

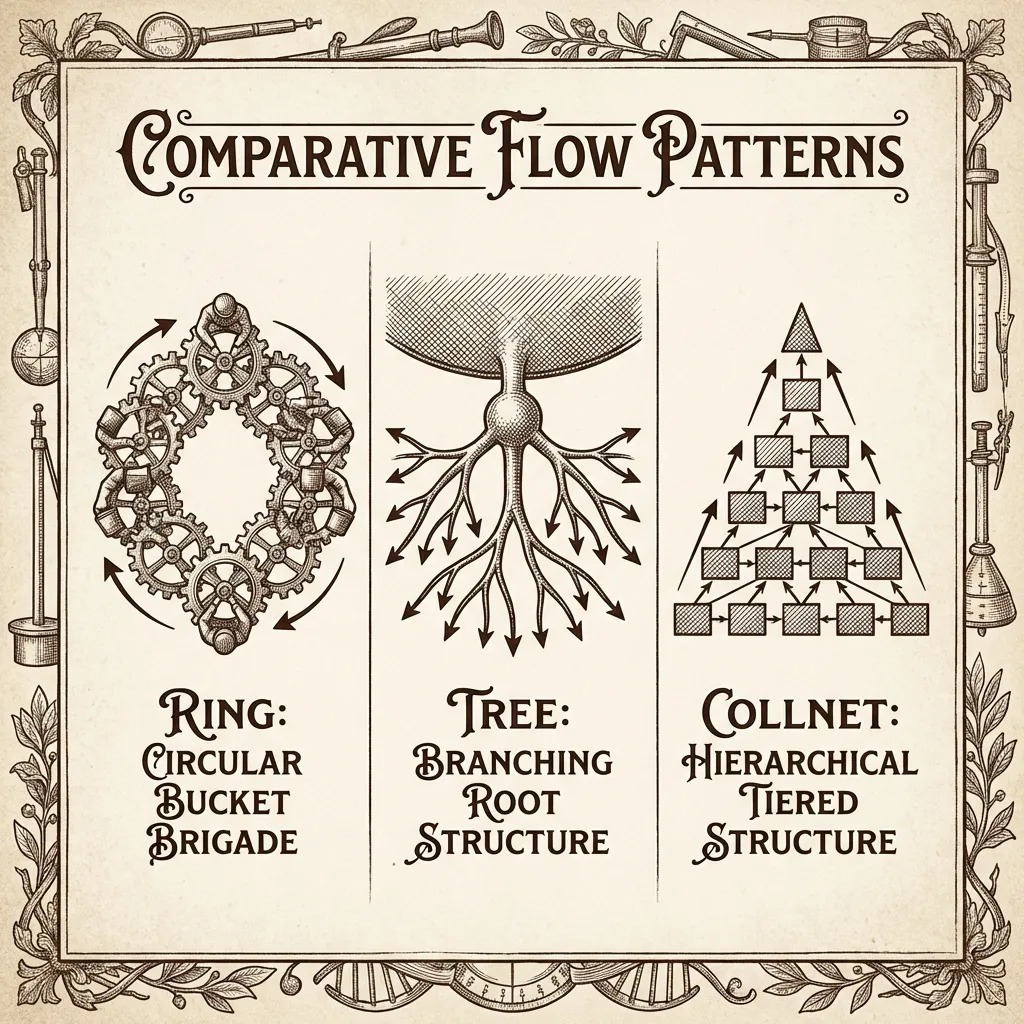

Understanding NCCL Algorithms: Ring vs. Tree

To debug this, you have to understand how NVIDIA Collective Communications Library (NCCL) moves data. NCCL chooses between three main algorithms based on message size and topology:

- Ring (

NCCL_ALGO_RING): Bandwidth-optimal. The data moves in a circular bucket brigade (0->1->2->…->N->0). Great for large tensors.- Failure Mode: If one link is slow, the entire ring drops to that speed. Latency scales linearly with N.

- Tree (

NCCL_ALGO_TREE): Latency-optimal. Uses a double binary tree structure. Great for small messages (like metadata or small gradients).- Failure Mode: Congestion at the root/leaves.

- CollNet (

NCCL_ALGO_COLLNET): Hierarchical. Aggregates intra-node first, then inter-node.

In our case, the stalled job was a large model using Ring All-Reduce.

Diagnostic Steps: Some magic flags

1. The NCCL_DEBUG=INFO Firehose

We first enabled the logs. Warning: this generates gigabytes of text.

export NCCL_DEBUG=INFO

export NCCL_DEBUG_SUBSYS=NET,COLL,GRAPHWhat do these variables mean?

NET: Logs activity from the Network plugin (InfiniBand/RoCE/EFA). Look here for packet drops (Got completion with error) or connection timeouts.COLL: Logs details about the Collective operations (AllReduce, Broadcast). It tells you what the rank is trying to do when it hangs.GRAPH: Dumps the topology search logic. It reveals why NCCL chose a Ring vs Tree.

We saw the dreaded NET/IB : Got completion with error 12 spam. Error 12 usually means a timeout or retry limit exceeded on the InfiniBand/RoCE card.

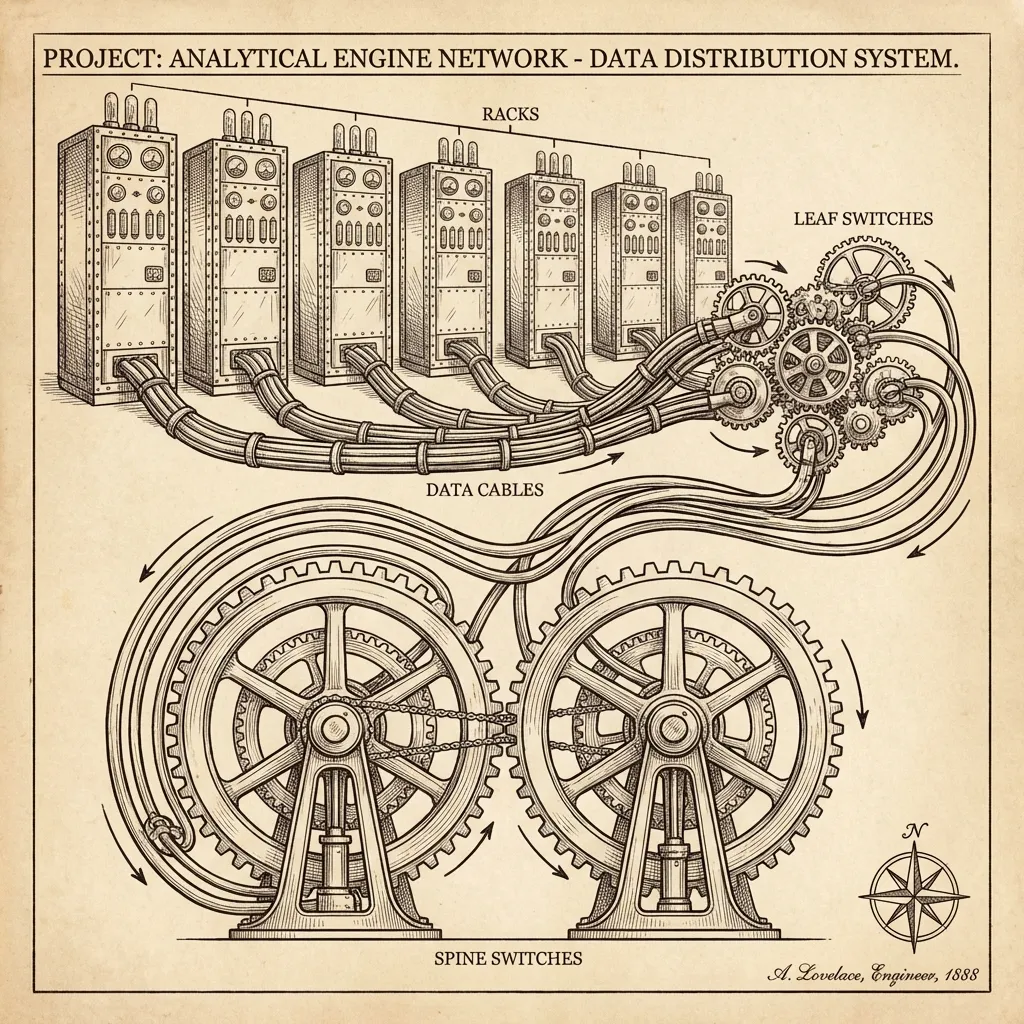

2. Isolate the Topology

We ran nvidia-smi topo -m to verify the physical layout. We realized that for this specific job, the Scheduler had allocated nodes in non-contiguous racks.

The Concept:

- Rack: A physical cabinet holding ~32 GPUs. Communication inside a rack is ultra-fast via a Top-of-Rack (Leaf) switch.

- Leaf Switch: The “local road.” Connecting servers within the same rack. Low latency (~2µs).

- Spine Switch: The “highway.” Connecting multiple Racks together. Higher latency (~10µs) and often oversubscribed (more traffic than bandwidth).

Because we were using the Ring Algorithm, the ring was physically stretching across the Spine switch multiple times. A packet had to go Rank 0 (Rack A) -> Rank 1 (Rack B) -> Rank 2 (Rack A). This “Spine Crossing” introduced massive jitter and congestion.

3. Forcing the Algorithm

We confirmed the hypothesis by forcing NCCL to switch algorithms.

export NCCL_ALGO=TREEThe job started running! It was slower (lower bandwidth), but it didn’t stall. This confirmed the issue was Bandwidth/Congestion related to the cross-rack links, which Ring is sensitive to.

The Fix: Topology-Aware Scheduling

Software patches (forcing Tree) are band-aids. The real fix was Physics. We configured Slurm (or Kubernetes) to enforce Rack Affinity.

# Kubernetes affinity example

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: topology.kubernetes.io/zone

operator: In

values:

- us-central1-aBy ensuring the Ring stayed within the Leaf switch domains as much as possible, we could switch back to NCCL_ALGO_RING and reclaim our bandwidth.

Priority to Test: A Field Guide

When you face a stalled training job, don’t guess. Follow this priority list:

- Pairwise Bandwidth Test (

all_reduce_perf -b 8 -e 128M -f 2 -g 1):- Scenario: Run this first between every pair of nodes.

- Goal: Identify the one bad cable/transceiver. If Node A<->Node B is slow but Node A<->Node C is fast, Node B’s NIC is the suspect.

- Topology Check (

nvidia-smi topo -m):- Scenario: Start of every job.

- Goal: Ensure you aren’t crossing PXB (PCIe Bridges) unnecessarily.

- NCCL Replay:

- Scenario: When you can’t reproduce the hang in a synthetic test.

- Goal: Captures the exact sequence of NCCL calls from your PyTorch training loop to replay in isolation.

Lesson Learned: Trust Physics, Not Software

Takeaway: “Ready” just means the OS booted. If your Ring topology crosses oversubscribed spine switches, you will see stalls. Always run a Pairwise Bandwidth Test (all_reduce_perf) that matches your job’s topology before starting the main run.