· AI at Scale · 3 min read

The Case for SparseCore

Large-scale recommendation models involve a two-part process. First, a "sparse lookup" phase retrieves data from memory, a task that is challenging for standard GPUs. Second, a "dense computation" phase handles intense calculations, where GPUs perform well. This disparity creates a performance bottleneck. Google's TPUs address this with a specialized SparseCore processor, specifically designed for the lookup phase. By optimizing for both memory-intensive lookups and heavy computations, this integrated architecture provides superior performance for large-scale models.

In the domain of artificial intelligence, not all workloads are created equal. While generative models like LLMs capture the public’s imagination, a significant portion of industrial AI—powering everything from search ranking to product recommendations—relies on a different class of model with unique architectural demands. Understanding the hardware’s role in executing these models is critical to achieving performance at scale.

Embedding Models vs. Large Language Models

An embedding model is a type of neural network designed to transform high-dimensional, sparse categorical features into low-dimensional, dense vector representations, or “embeddings.” Its primary function is to map discrete identifiers, such as user IDs or product SKUs, into a continuous vector space where semantic relationships can be captured mathematically. For example, similar products will have vectors that are closer together in this space.

This contrasts with Large Language Models (LLMs), which are typically autoregressive transformers designed for sequence generation. While LLMs internally use embeddings for tokens, their primary task is generative. Embedding models, conversely, are often a foundational component in larger retrieval, classification, or ranking systems, where the generated embedding vectors are the key output for downstream processing.

The Biphasic Execution Pipeline

The computational pipeline for a typical large-scale embedding model is biphasic, characterized by two distinct bottlenecks:

Sparse Lookup/Gather Operations: This initial phase is

memory-bound. It takes a batch of sparse input IDs and performs a lookup to retrieve the corresponding vectors from an extremely large embedding table. This process is dominated by irregular, data-dependent memory access patterns. The core operation is agather, which is notoriously inefficient on parallel processors not designed for it.Dense Computation: This second phase is

compute-bound. The dense embedding vectors retrieved in the first phase are processed through subsequent neural network layers, such as Multi-Layer Perceptrons (MLPs). This stage consists primarily of dense matrix multiplications (matmul) and activations, which are highly parallelizable.

When executed on general-purpose parallel architectures like NVIDIA GPUs, this pipeline presents a significant challenge. GPUs excel at the compute-bound dense operations in Phase 2 due to their 1SIMT (Single Instruction, Multiple Thread)1 architecture. However, they are fundamentally inefficient at the irregular gather operations in Phase 1. The data-dependent memory access leads to thread divergence and memory bandwidth bottlenecks, leaving the powerful compute units severely underutilized.

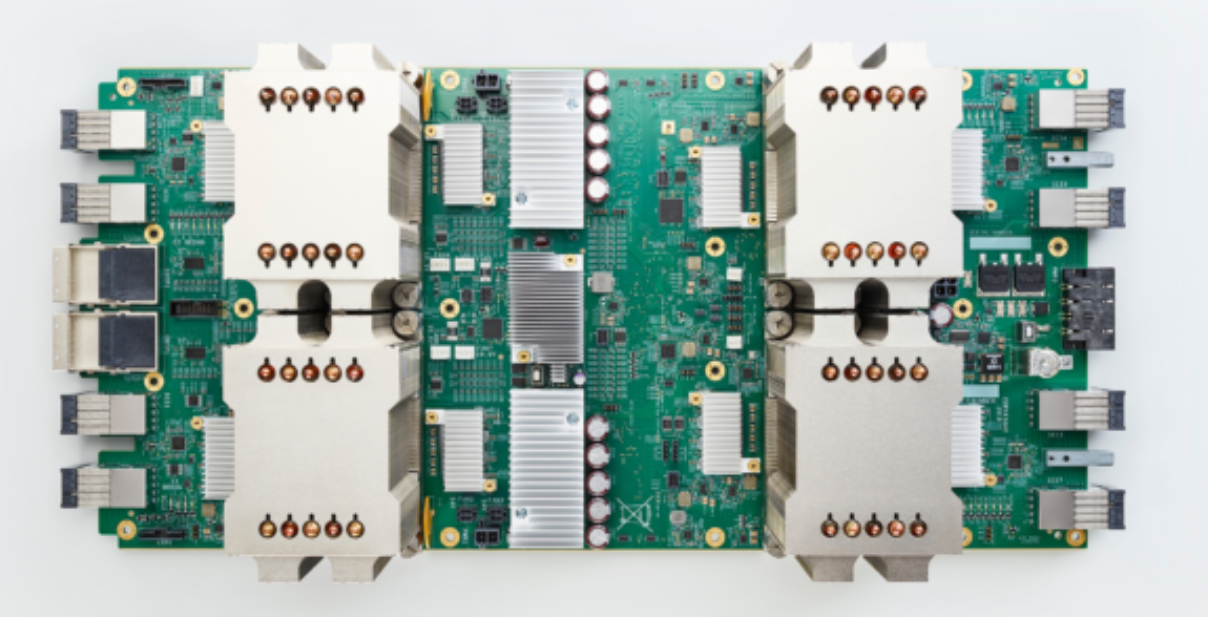

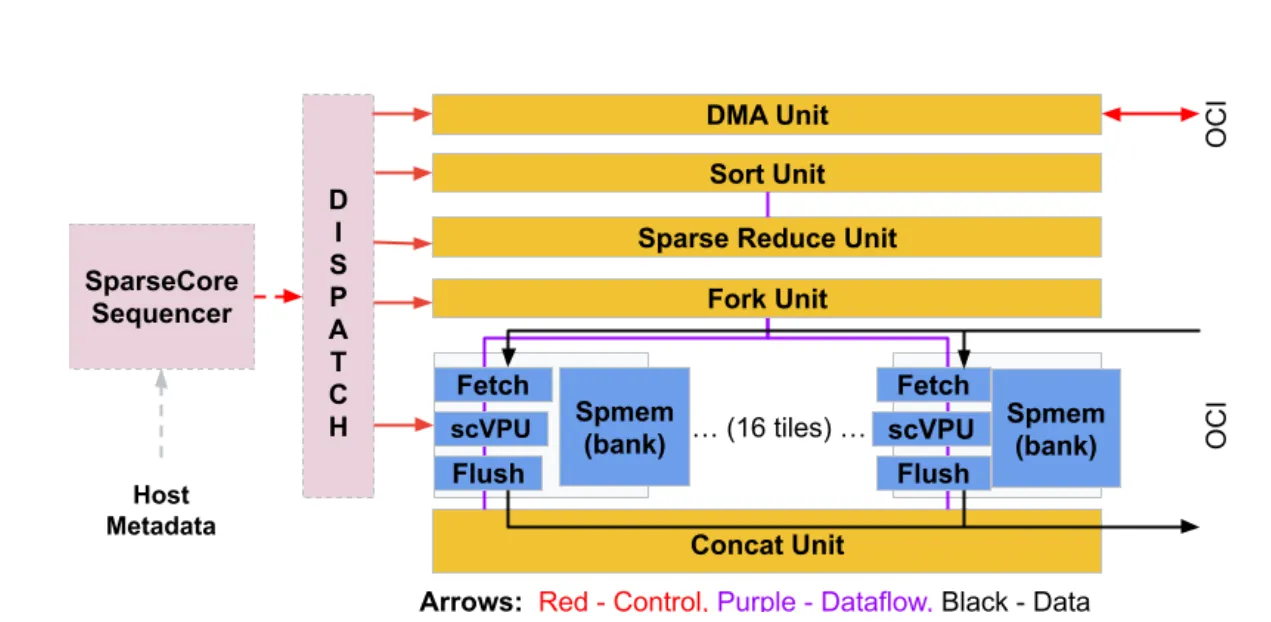

TPU SparseCore

Google’s Tensor Processing Unit (TPU) architecture addresses this challenge directly through hardware specialization. Newer generations, like TPU v5p/v6, integrate SparseCores, which are specialized dataflow processors designed explicitly to accelerate the memory-bound lookup phase of embedding-heavy workloads.

The SparseCore is not a general-purpose core; it is purpose-built to handle the irregular memory access patterns inherent in processing massive, sparse tensors. It executes the gather operations with maximum efficiency, directly resolving the primary bottleneck found on other architectures.

Co-Design for End-to-End Performance

The key differentiator for the TPU is its architectural co-design. The chip integrates both the SparseCore for Phase 1 and the Matrix-Multiply Unit (MXU), a systolic array, for Phase 2.

- The SparseCore efficiently handles the memory-bound sparse lookups.

- The MXU provides extreme performance for the compute-bound dense matrix multiplications.

This combination of specialized hardware for both phases of the pipeline allows the TPU to maintain high utilization and throughput across the entire end-to-end execution of the model. By designing hardware that conforms to the computational pattern of the workload, rather than forcing the workload onto a general-purpose architecture, significant gains in performance and efficiency are realized. This specialization is precisely why TPUs with SparseCore are the optimal solution for large-scale ranking and recommendation systems.