The Compute-to-Cashflow Gap

The AI industry is shifting from celebrating large compute budgets to hunting for efficiency. Your competitive advantage is no longer your GPU count, but your cost-per-inference.

The AI industry is shifting from celebrating large compute budgets to hunting for efficiency. Your competitive advantage is no longer your GPU count, but your cost-per-inference.

Demystifying hardware acceleration and the competing sparsity philosophies of Google TPUs and Nvidia. This post connects novel architectures, like Mixture-of-Experts, to hardware design strategy and its impact on performance, cost, and developer ecosystem trade-offs.

This post contrasts the switching technologies of NVIDIA and Google's TPUs. Understanding their different approaches is key to matching modern AI workloads, which demand heavy data movement, to the optimal hardware.

It's not just about specs. This post breaks down the core trade-off between the GPU's versatile power and the TPU's hyper-efficient, specialized design for AI workloads.

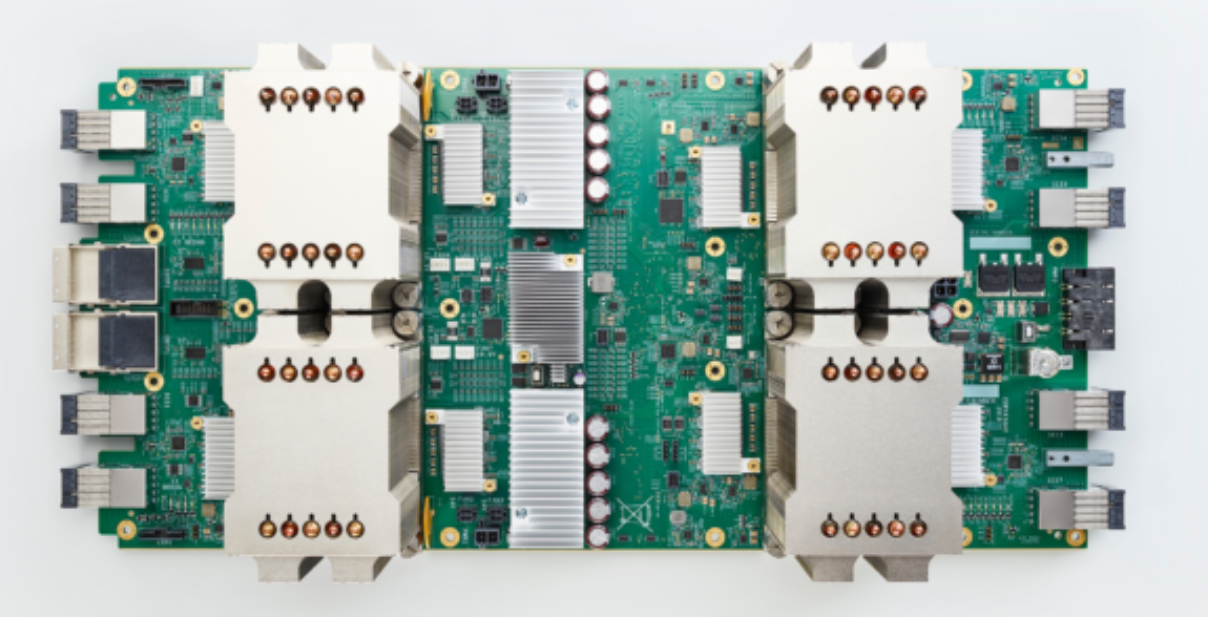

Large-scale recommendation models involve a two-part process. First, a "sparse lookup" phase retrieves data from memory, a task that is challenging for standard GPUs. Second, a "dense computation" phase handles intense calculations, where GPUs perform well. This disparity creates a performance bottleneck. Google's TPUs address this with a specialized SparseCore processor, specifically designed for the lookup phase. By optimizing for both memory-intensive lookups and heavy computations, this integrated architecture provides superior performance for large-scale models.