· AI at Scale · 7 min read

Generality vs. Specialization - The Real Difference Between GPUs and TPUs

It's not just about specs. This post breaks down the core trade-off between the GPU's versatile power and the TPU's hyper-efficient, specialized design for AI workloads.

This post refers to the TPU v6 and previous generations.

What’s the difference between TPUs and NVIDIA’s Blackwell GPUs.

Often when speaking with our customers who are looking at the spec sheets - the number #1 question is almost aways the reason for the the stark differences in the key metrics.

For context In case of TPUs - On-chip memory units (CMEM, VMEM, SMEM) on TPUs are much larger than L1, L2 caches on GPUs. HBM on TPUs are also much smaller than HBM on GPUs. Also a complete opposite on the side of GPUs where they have smaller L1, L2 caches (256KB and 50MB, respectively for H100), larger HBM (80GB for H100), and tens of thousands of cores.

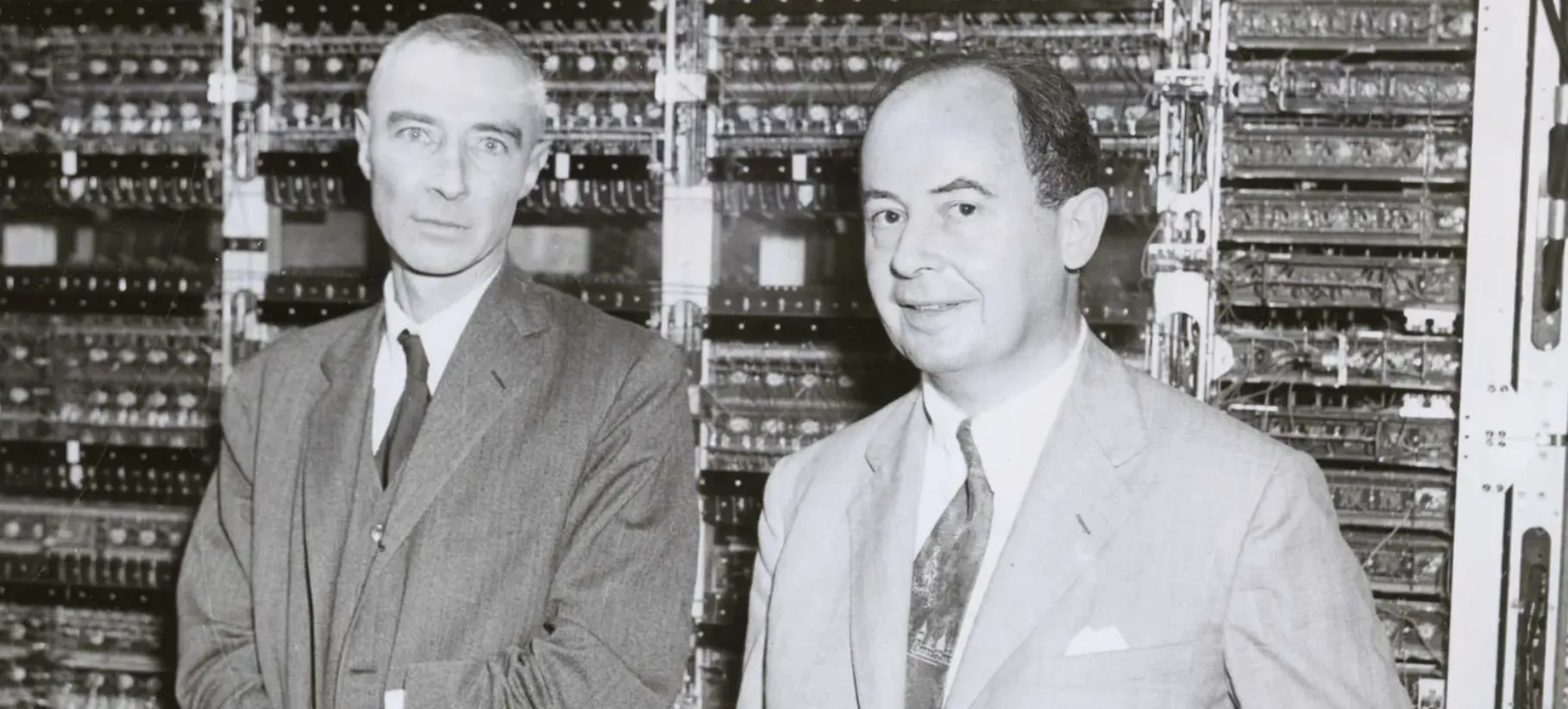

The idea behind this post is to help clarify the two fundamentally divergent philosophies for solving a 75-year-old problem in computing: the Von Neumann bottleneck.

To understand why these AI work horses are so different, we have to go back to the start of the modern day computing and the architectural decisions taken at the time.

The Von Neumann Bottleneck

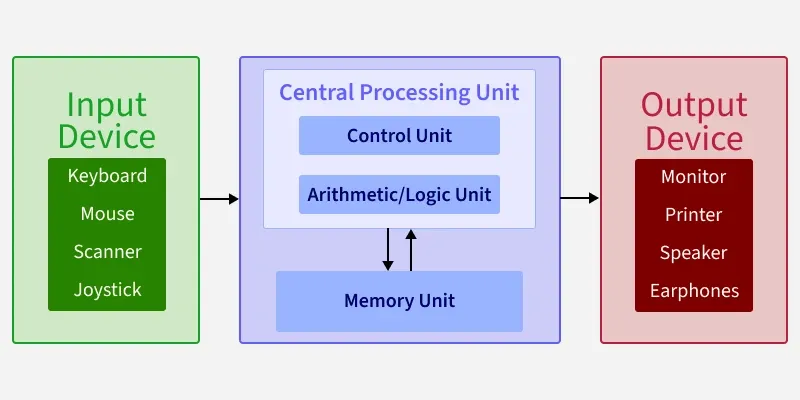

In 1945, John von Neumann laid out a blueprint for a “stored-program computer” where program instructions and the data they operate on reside in the same, unified memory.

This was a revolutionary concept that gave computers their incredible flexibility. However, it also created an inherent traffic jam. Because instructions and data have to travel across the same shared pathway (the bus) to get to the processor, the system can’t fetch a new instruction and the data for the current one at the same time.  For decades, this “von Neumann bottleneck” was a manageable issue.

For decades, this “von Neumann bottleneck” was a manageable issue.

With the rise of AI, it has become an acute crisis. Training a large language model involves moving trillions of parameters between memory and the processor. This constant data shuffling is not just slow; it’s incredibly energy-intensive. In fact, the physical act of moving data can consume orders of magnitude more power than the actual computation itself.

This is the core problem that both GPUs and TPUs are designed to solve, but they do so in dramatically different ways.

The GPU Approach: Bruteforcing the Bottleneck with Parallelism

A modern GPU, like those in the Blackwell series, is the ultimate evolution of the von Neumann architecture. It doesn’t fundamentally change the model of separated memory and compute; instead, it engineers a system so massively parallel that it can tolerate the bottleneck’s effects.

A GPU contains thousands of cores. These are divided into two main types:

- CUDA Cores: These are the general-purpose workhorses, capable of handling a wide range of parallel tasks, from graphics rendering to data pre-processing in an AI pipeline.

- Tensor Cores: These are specialized circuits designed for one job: performing the matrix multiply-accumulate operations that are the heart of neural networks, doing so with incredible speed.

It’s a brute-force solution that accepts the bottleneck and builds a system powerful enough to work around it.

The GPU’s strategy is latency hiding. It keeps tens of thousands of threads ready to execute. When one group of threads stalls while waiting for data from memory, the GPU’s hardware scheduler instantly switches to another group that is ready to compute. This keeps the thousands of CUDA and Tensor cores constantly busy with useful work, effectively masking the time spent waiting on the memory bus.

https://developer.nvidia.com/blog/nvidia-hopper-architecture-in-depth/

The TPU Approach: Eliminating the Bottleneck with Specialization

Google’s Tensor Processing Unit (TPU) takes a more radical approach. As an Application-Specific Integrated Circuit (ASIC),

It sacrifices the GPU’s generality for extreme efficiency on AI workloads. The TPU’s design philosophy is built on two key pillars that work in concert to attack the von Neumann bottleneck at its source.

1. The Hardware: Systolic Arrays

At the heart of a TPU is a systolic array, a large two-dimensional grid of simple processing elements, each performing a multiply-accumulate operation. Instead of a processor repeatedly fetching data from memory, the TPU establishes a dataflow paradigm. Model weights are pre-loaded into the array, and input data is streamed through it in a rhythmic, wave-like pattern.  Each processing element performs its calculation and passes the result directly to its neighbor.

Each processing element performs its calculation and passes the result directly to its neighbor.

The crucial breakthrough is that once the data is flowing, no memory access is required during the core matrix multiplication process. This spatial computing model effectively eliminates the von Neumann bottleneck for the most intensive part of the workload, drastically reducing data movement and the associated energy cost - which can be passed on the end customers.

2. Hardware-Software Co-Design and Compilation

The TPU’s rigid hardware is made powerful by its tight integration with software, particularly the XLA (Accelerated Linear Algebra) compiler and frameworks like JAX.

Because the computational graph of a neural network is predictable, the XLA compiler can analyze the entire program Ahead-of-Time (AoT). This allows it to orchestrate the perfect sequence of data movements from main memory to on-chip buffers, feeding the systolic array just in time. This predictability means TPUs don’t need complex, power-hungry caches, further boosting efficiency.

Frameworks like JAX leverage this with Just-in-Time (JIT) compilation. When a JAX function is marked with @jax.jit, it is traced on its first run to create a static computation graph. This graph is then handed to XLA, which performs AoT-style optimizations like fusing multiple operations into a single efficient kernel before compiling it into a static binary for the TPU.

This hybrid JIT/AoT model unlocks significant performance but also introduces the TPU’s main trade-off: a lack of flexibility for workloads with dynamic shapes or control flow.

So Where Does Each Architecture Wins

The difference between the GPU and the TPU approach to the bottleneck leads to clear advantages in different scenarios. The GPU is a versatile generalist, while the TPU is a hyper-efficient specialist.

Here’s how they stack up against various workload types:

| Workload Type | GPU (e.g., Blackwell) Suitability | TPU Suitability |

|---|---|---|

| Large-Scale Model Training (Dense) | High: Excellent performance and a mature, flexible ecosystem with CUDA. Power consumption is a key consideration at scale. | Very High: Architecturally optimized for this task. The systolic array and compiler co-design offer superior performance-per-watt and TCO. |

| Real-Time Inference (Low Batch Size) | Very High: High clock speeds and optimized software stacks (e.g., TensorRT) provide industry-leading low latency for single inputs. | Medium: Can be effective, but performance is suboptimal if batches cannot be formed to keep the systolic array fully utilized. |

| High-Throughput Inference (Large Batch) | High: Can batch inputs effectively to achieve high throughput, leveraging its massive parallelism. | Very High: The ideal use case for the TPU, maximizing the utilization and efficiency of the systolic array. |

| Research & Prototyping (Novel Architectures) | Very High: The CUDA ecosystem offers unparalleled flexibility and fine-grained control for developing new algorithms and custom operations. | Low: The reliance on the XLA compiler to recognize specific patterns makes it difficult to implement operations that are not already optimized.Although this is changing with the software evolution. E.g. the recent tpu support on VLLM |

| Sparse Model Training/Inference | Medium: The more flexible memory system and execution model handle sparsity better than TPUs, but it’s still less efficient than dense computation. | Low: The rigid structure of the systolic array is highly inefficient for sparse matrices, performing many wasted computations on zero-valued elements. |

| General HPC & Graphics | High: Designed as a general-purpose parallel processor, it excels at a wide range of scientific computing and graphics rendering tasks. | Not Applicable: The TPU is an ASIC and lacks the hardware and instruction set to perform general-purpose or graphics tasks. |

Ultimately, the differences in specs between a Blackwell GPU and a TPU are not just about numbers—they reflect a deep, philosophical split in architectural design.

The future of AI will not be about one winning, but about leveraging the unique strengths of both.

The GPU is the pinnacle of flexible, parallel computing within the von Neumann world, while the TPU is a bold step outside of it for a specific, critical domain.