· AI at Scale · 6 min read

Why More GPUs Is No Longer a Viable Strategy in 2026

As hardware lead times and power constraints hit a ceiling, the competitive advantage in AI has shifted from chip volume to architectural efficiency. This article explores how JAX, Pallas, and Megakernels are redefining Model FLOPs Utilization (MFU) and providing the hardware-agnostic Universal Adapter needed to escape vendor lock-in.

Idea in Brief

- The Problem: The “compute arms race” has reached a point of diminishing returns. Organizations are finding that simply adding more hardware - GPUs, TPUs, or custom ASICs - no longer yields a linear increase in model performance. Power constraints, hardware wait times, and software bottlenecks are creating a “Scarcity Trap.”

- The Hidden Metric: The true measure of AI leadership in 2025 is not the size of your cluster, but your Model FLOPs Utilization (MFU). Most organizations operate at 30-40% efficiency; the winners are hitting 70%+.

- The Strategic Shift: To escape the trap, firms must move from an imperative programming model (PyTorch/CUDA) to a functional, compiler-driven architecture. JAX and the emergence of Megakernels via Pallas represent the new frontier of hardware-agnostic efficiency.

For the past five years, the playbook for AI leadership was simple: Raise more capital, buy more H100s, and scale the parameters. In 2023 and 2024, this brute-force approach worked. But as we move through 2025, the industry has hit a wall - not of intelligence, but of infrastructure.

We call this the Scarcity Trap. It is a state where the physical limits of the data center (power delivery and thermal ceilings) and the economic limits of silicon availability have made “throwing more hardware at the problem” a strategy for the stagnant. The competitive advantage has shifted from those who own the most chips to those who can extract the most “math” from the chips they already have.

To navigate this, technology executives must look past the hardware layer and into the soul of the machine: the compiler and the kernel. Specifically, the transition to JAX and the implementation of Megakernels are becoming the primary levers for ROI in the generative era.

MFU Narrative: The New “P/E Ratio” of AI

In the financial world, we look at the P/E ratio to understand value. In AI infrastructure, the equivalent metric is Model FLOPs Utilization (MFU).

MFU measures the percentage of a chip’s theoretical peak performance that is actually being used to do the “useful work” of training a model. If an NVIDIA B200 or a Google TPU v6 has a peak performance of hundreds of teraflops, but your model only realizes 35% of that, you are effectively paying a 65% “inefficiency tax” on every watt of power and every dollar of capital.

Today, the industry average MFU for large-scale training sits between 30% and 45%. The “leaks” in this bucket are caused by data waiting to move between memory tiers and the GPU sitting idle while the CPU decides what to do next. For a Fortune 500 company spending 50M in “free” hardware. This is why JAX - designed from the ground up to maximize MFU via the XLA compiler - has become the standard for frontier labs like Anthropic, xAI, and DeepMind.

”Squeezing the Juice”: The Shift to Megakernels

To understand why JAX is winning, we must look at how it handles the most expensive resource in the data center: Memory Bandwidth.

The Problem: The “Stop-and-Go” Architecture

Traditional AI frameworks operate on a “kernel-by-kernel” basis. Think of a model layer as a recipe with ten steps (Add, Multiply, Normalize, etc.). In a standard environment, the GPU executes Step 1, writes the result back to the main memory (HBM), waits for the CPU to signal Step 2, reads the data back from memory, and proceeds.

This constant round-trip to the “slow” main memory is the primary killer of MFU. The GPU’s “engines” (ALUs) are incredibly fast, but they spend most of their time waiting for the “trucks” (memory buses) to deliver the data.

The Solution: Megakernels (Persistent Kernels)

A Megakernel changes the logic entirely. Instead of ten separate steps with ten round-trips to memory, a Megakernel fuses the entire operation into a single, massive program that stays on the chip.

Using JAX’s Pallas - a specialized kernel language - engineers can now “hand-craft” these fused operations. Pallas allows the developer to manage the SRAM (the super-fast, on-chip memory) directly.

- Direct Control: Pallas lets you tell the chip: “Keep this specific block of data in the internal cache for the next five steps. Do not send it back to main memory.”

- Overlapping: While the chip is calculating Step 1, it is already pre-fetching the data for Step 2 in the background.

This “Model Fusion” is what separates modern production stacks from research prototypes. It “squeezes the juice” out of the hardware by ensuring the ALUs are never idling. Libraries like Tokamax have further democratized this by providing pre-optimized Megakernels for common bottlenecks like FlashAttention, allowing teams to gain “Expert-Level” MFU without needing a PhD in CUDA programming.

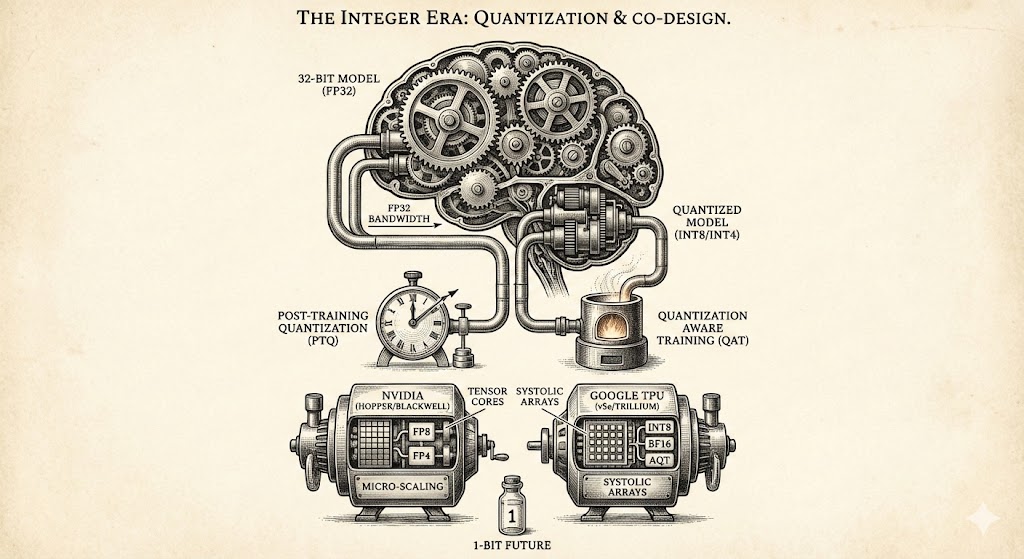

Accelerator Diversification: Beyond the General-Purpose GPU

The second major driver for JAX is the “Cambrian Explosion” of silicon. In 2025, the monopoly of the general-purpose GPU is flatlining. We are seeing the rise of ASICs (Application-Specific Integrated Circuits) designed specifically for tensor math - Google’s TPUs, Amazon’s Trainium, and startups like Groq with their Language Processing Units (LPUs).

JAX acts as the Universal Adapter. Because it is built on the OpenXLA compiler, the code is “decoupled” from the silicon. JAX describes the math, and XLA translates that math into the specific instructions for whichever chip you have available today - be it an NVIDIA B200, an AMD MI325X, or a TPU v7.

This Hardware Optionality is a critical risk-management tool. It allows a CIO to move a workload from an expensive on-prem GPU cluster to a spot-priced TPU pod in the cloud with minimal friction.

The Organizational Challenge: Bridging the Skill Gap

The primary hurdle to JAX adoption isn’t technical; it’s cultural. Most of the talent pool is trained in PyTorch, which favors an “Imperative” style (execute Step A, then Step B). JAX is “Functional” and “Declarative” - it requires a different mental model.

However, the emergence of Keras 3 has fundamentally changed this ROI calculation. Keras 3 allows developers to write code in a high-level, “human-readable” format that can run on any backend - PyTorch, JAX, or TensorFlow. This allows organizations to hire for PyTorch familiarity while deploying on a JAX/XLA engine.

The New Efficiency Frontier

We are entering a phase of AI maturity where “bigger” is no longer the only way to be “better.” The companies that will define the next three years are those that recognize compute as a finite, precious resource.

By investing in JAX and Megakernel expertise, you are not just choosing a software library. You are building an organization that is:

- Resilient: Capable of running on any silicon, shielding you from supply chain shocks.

- Efficient: Realizing 2x the output from the same power and hardware footprint via maximized MFU.

- Scalable: Utilizing deterministic, compiler-driven workflows that eliminate the “ghost bugs” that plague large-scale distributed training.

The “Scarcity Trap” is real, but for the strategically minded, it is also a massive opportunity to out-compete those who are still trying to solve 2025 problems with 2020 tactics.