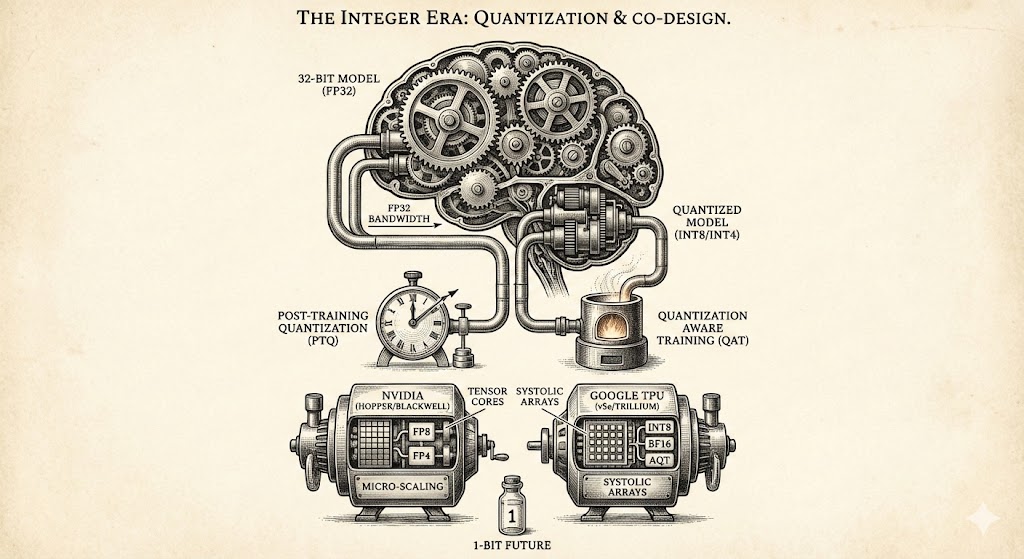

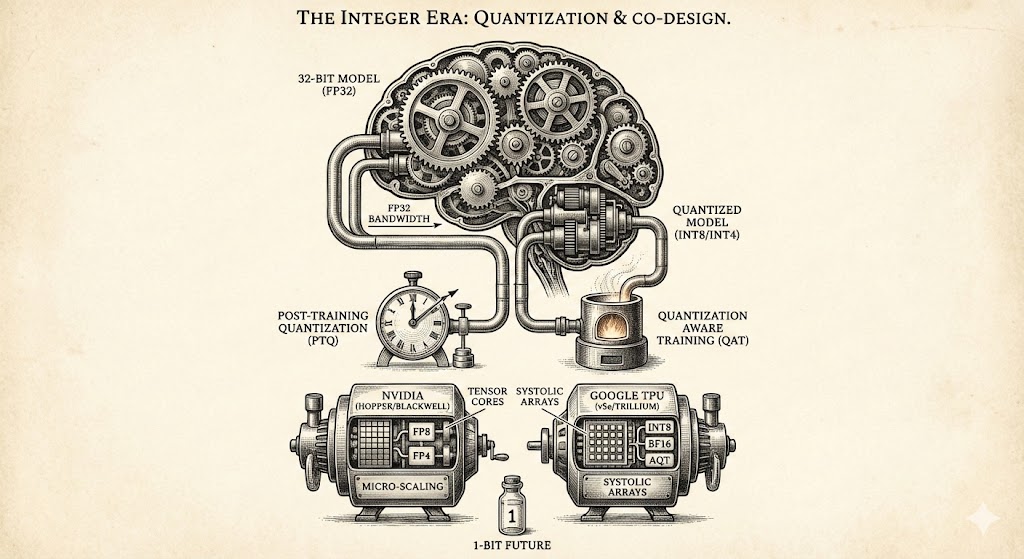

AI Quantization and Hardware Co-Design

Explore how quantization and hardware co-design overcome memory bottlenecks, comparing NVIDIA and Google architectures while looking toward the 1-bit future of efficient AI model development.

Explore how quantization and hardware co-design overcome memory bottlenecks, comparing NVIDIA and Google architectures while looking toward the 1-bit future of efficient AI model development.

FP4 isn't just 'lower precision' - it requires a fundamental rethink of activation outliers. We dive into the bit-level implementation of NVFP4, Micro-Tensor Scaling, and the new Tensor Memory hierarchy.