· AI at Scale · 6 min read

Network Design for AI Workloads

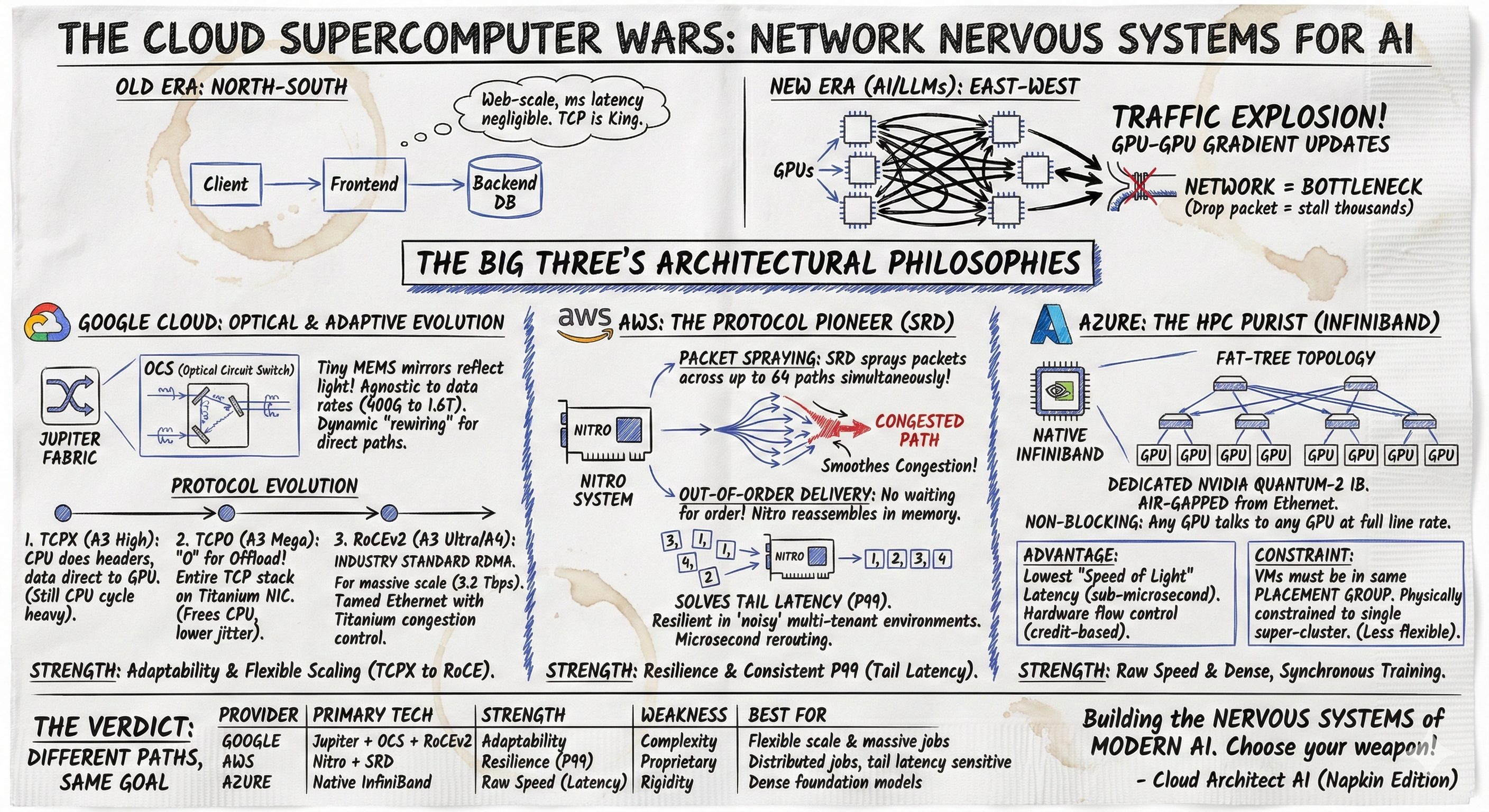

Generative AI has shifted data center traffic patterns, making network performance the new bottleneck for model training. This post contrasts how the "Big Three" cloud providers utilize distinct architectures to solve this challenge. We examine Google Cloud’s evolution from proprietary TCPX to standard RoCEv2 on its optical Jupiter fabric, AWS’s innovation of the Scalable Reliable Datagram (SRD) protocol to mitigate Ethernet congestion, and Azure’s adoption of native InfiniBand.

In the traditional era of web-scale computing, the “North-South” traffic pattern reigned supreme. Client requests hit a frontend, queried a backend database, and returned a response. In this world, a millisecond of latency here or there was negligible, and standard TCP/IP was the undisputed king of transport protocols.

The rise of Generative AI and Large Language Models (LLMs) has violently inverted this paradigm. Training a trillion-parameter model like GPT-4 or Gemini requires thousands of GPUs to function as a single, synchronous supercomputer. The dominant traffic is now “East-West” - GPUs exchanging massive gradient updates with every other GPU in the cluster. In this environment, the network is no longer just plumbing; it is the bottleneck. If one packet is dropped, thousands of GPUs stall, burning millions of dollars in idle silicon time.

To solve this, the “Big Three” cloud providers - Google Cloud, AWS, and Azure - have adopted radically different architectural philosophies. This post dives into the engineering behind Google’s Jupiter fabric, AWS’s SRD protocol, and Azure’s InfiniBand integration to understand how they are building the nervous systems of modern AI.

Google Cloud: From TCPX to RoCE

Google’s approach was always been defined by a refusal to buy off-the-shelf “black box” hardware. Instead, they relied on Jupiter, a data center fabric that combines optical physics with deep software-defined networking (SDN) capabilities.

Optical Circuit Switching (OCS)

At the core of Jupiter is the Optical Circuit Switch (OCS). Unlike traditional switches that convert optical signals to electricity to route them (O-E-O conversion), OCS uses tiny MEMS mirrors to physically reflect beams of light from one fiber to another. This makes the core fabric agnostic to data rates; whether the signal is 400Gbps or 1.6Tbps, the mirrors simply reflect the light.1

This architecture allows Google to dynamically “rewire” the topology of the data center in real-time, creating direct optical paths between racks of GPUs involved in a massive training job, effectively reducing hop counts and latency.2

Protocol Evolution: TCPX, TCPO, and RoCE

Until recently, Google avoided specialized HPC networks like InfiniBand. Instead, they engineered custom solutions to make Ethernet perform like a supercomputer network. This strategy has evolved through three distinct phases:

GPUDirect-TCPX (A3 High)

Google engineered GPUDirect-TCPX to solve the latency of standard TCP. Standard TCP involves multiple copies of data between the CPU and NIC, which kills performance.

- How it works: TCPX uses a Header-Data Split mechanism. When a packet arrives, the NIC separates the header from the payload. The CPU handles the complex TCP headers (control path), while the massive payload is DMA’d directly into the GPU memory (data path).3

- So what was the bottleneck?: While this bypassed the kernel for data, the CPU still had to process headers, consuming cycles that could be used for AI computation.

GPUDirect-TCPO (A3 Mega)

For the A3 Mega instances, Google introduced GPUDirect-TCPO (“O” stands for Offload).

- The Difference: unlike TCPX, where the CPU processes headers, TCPO offloads the entire TCP protocol stack to the hardware NIC (specifically the Titanium adapter). This frees up the host CPU entirely, reducing jitter and allowing for higher sustained bandwidth (up to 400 Gbps on A3 Mega) while maintaining the universality of the TCP protocol.

The Pivot to RoCEv2 (A3 Ultra & A4)

With the arrival of the NVIDIA H200 (A3 Ultra) and Blackwell (A4) generations, bandwidth requirements jumped to 3.2 Tbps. At this scale, even optimized TCP becomes a bottleneck.

- Google has officially pivoted to RoCEv2 (RDMA over Converged Ethernet) for its flagship AI instances. This is the industry-standard way of doing RDMA over Ethernet.

- So why the change? RoCEv2 provides the absolute lowest latency and highest throughput (3.2 Tbps) required for massive clusters, matching the performance of InfiniBand. Google enables this on their Jupiter fabric using their Titanium offload adapters to manage the congestion control that RoCE requires, effectively “taming” the Ethernet fabric to support lossless AI traffic.4

AWS: Approaching via Protocol innovation

While Google hacked the stack and Azure bought the hardware (more on that later), AWS decided the problem was the transport protocol itself. They concluded that TCP, designed in the 1970s, was fundamentally broken for high-performance datacenter workloads.

SRD: The “Anti-TCP”

AWS introduced the Scalable Reliable Datagram (SRD), a custom protocol running on their Nitro offload cards. SRD challenges two core tenets of TCP:

- Packet Spraying: In standard networks (ECMP), a flow takes a single path. If that path is congested, the flow suffers. SRD sprays packets across up to 64 different paths simultaneously, regardless of order.

- Out-of-Order Delivery: TCP wastes time reordering packets at the receiver. SRD delivers packets as they arrive and uses the Nitro hardware to reassemble them directly in the memory buffer.

Solving the “Tail Latency” Problem

This approach specifically targets tail latency (P99). In a cluster of 10,000 GPUs, the training speed is dictated by the slowest link. By spraying packets across all available paths, SRD smooths out congestion. If one path fails or lags, only 1/64th of the data is affected, and the Nitro card’s microsecond-scale congestion control instantly reroutes traffic. This makes AWS a resilient platform in “noisy” multi-tenant environments.

Azure: The HPC Purist

Azure took the most pragmatic path: if you want to build a supercomputer, use supercomputer networking. They integrated InfiniBand, the gold standard of High-Performance Computing (HPC), directly into their cloud.

Native InfiniBand and Fat-Trees

Azure’s H100 instances come with dedicated NVIDIA Quantum-2 InfiniBand networking, completely air-gapped from the standard Ethernet tenant network.7 They use a Fat-Tree topology, which is non-blocking—meaning any GPU can talk to any other GPU at full line rate without contention.

- This offers the absolute lowest “speed of light” latency (sub-microsecond) because the hardware handles flow control (credit-based) without any software overhead.8

- So what’s the Constraint: To work, VMs must be in the same “Placement Group.” You cannot stretch an InfiniBand fabric across the world; it is physically constrained to a single super-cluster. This makes Azure’s performance unbeatable for dense, synchronous jobs, but less flexible than the Ethernet-based approaches of Google and AWS.9

The Verdict: Comparing the Clouds

Each provider has carved a unique niche. Azure is the choice for purists who want raw, “bare-metal” supercomputing performance. AWS is the choice for resilience and scale, leveraging their Nitro architecture to make Ethernet reliable enough for AI. Google is the choice for flexibility, utilizing optical switching and now converging on standard RoCEv2 for its highest-end workloads.

Technical Comparison Table

| Feature | Google Cloud (GCP) | AWS | Microsoft Azure |

|---|---|---|---|

| Primary Network Tech | Jupiter Fabric + OCS | Nitro System + EFA | NVIDIA InfiniBand |

| Transport Protocol | GPUDirect-TCPX (A3 High) GPUDirect-TCPO (A3 Mega) RoCEv2 (A3 Ultra/A4) | SRD (Scalable Reliable Datagram) | Native InfiniBand |

| Congestion Control | Titanium Adapter (Edge-based) & Centralized SDN (Firepath) | Multipath “Packet Spraying” (ECMP agnostic) | Hardware-based Credit Flow Control |

| Latency Profile | Low (TCPX/TCPO) to Ultra-Low (RoCEv2) | Consistent P99 (Tail Latency resilience) | Ultra-Low (Sub-microsecond) |

| Topology | Dynamic Mesh (Optical Circuit Switching) | Clos / Folded Clos | Non-blocking Fat-Tree |

| Key Strength | Adaptability: Shifts from TCPX to RoCE depending on tier; OCS allows dynamic topology rewiring.4 | Resilience: SRD handles “noisy neighbors” and link failures better than any other.5 | Raw Speed: Native InfiniBand offers the highest throughput and lowest latency.8 |

| Key Weakness | Complexity: Multiple protocols (TCPX, TCPO, RoCE) across different VM generations can be confusing. | Proprietary: SRD is unique to AWS; moving to/from it requires re-tuning NCCL. | Rigidity: VMs must be in specific “Placement Groups”; harder to fragment clusters.9 |

| Best For | Flexible scaling & cost-efficiency (TCPX) or massive scale training (RoCE). | Massive distributed jobs (MoE) where tail latency kills performance. | Dense, synchronous foundation model training (e.g., GPT-4 style). |