Generality vs. Specialization - The Real Difference Between GPUs and TPUs

It's not just about specs. This post breaks down the core trade-off between the GPU's versatile power and the TPU's hyper-efficient, specialized design for AI workloads.

It's not just about specs. This post breaks down the core trade-off between the GPU's versatile power and the TPU's hyper-efficient, specialized design for AI workloads.

A guide for technology executives on how to move beyond proofs-of-concept and realize sustainable, transformative value from agentic AI by focusing on business-first strategies.

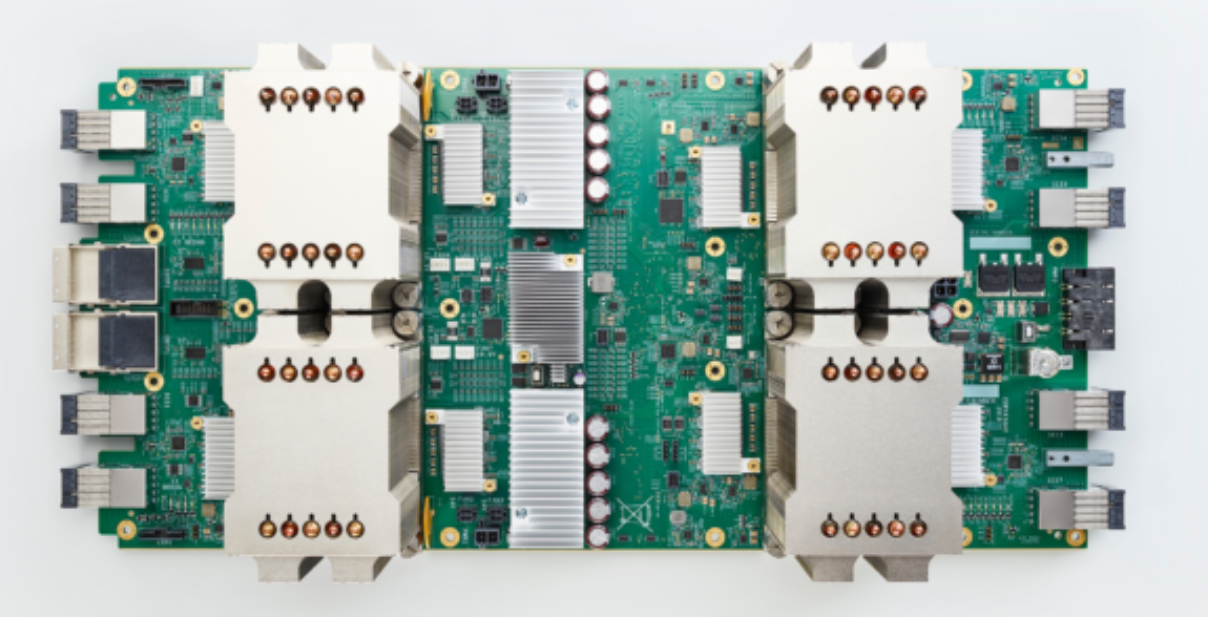

Large-scale recommendation models involve a two-part process. First, a "sparse lookup" phase retrieves data from memory, a task that is challenging for standard GPUs. Second, a "dense computation" phase handles intense calculations, where GPUs perform well. This disparity creates a performance bottleneck. Google's TPUs address this with a specialized SparseCore processor, specifically designed for the lookup phase. By optimizing for both memory-intensive lookups and heavy computations, this integrated architecture provides superior performance for large-scale models.

Technical debt is not new, This weekend I went down the trail to read-up on its impact due to the increased throughput of code generation thanks to AI. Turns out AI code generation is a double-edged sword. Lightning-fast code creation can mask underlying architectural flaws, poor naming, and inadequate testing. Often creating more of the uncoordinated code.The right approach? Thoughtful AI integration that learns from existing code-bases, not blindly generating new code.

Some ideas on what to watchout for enterprise when starting new projects using emerging technology. This post talks about aspects of scoping, ownership and technology changes. Most of it is obvious but less commonly

Understanding the ability of an organisation to adapt to cross functional level changes